It was AWS’ flagship conference and it didn’t disappoint, offering up a veritable dog’s breakfast of launches that spanned new chips and autonomous agents to model tooling, cost controls, and even on‑premises systems. The through line: Give customers control, performance, and choice — not lock them into one way to build.

AI Agents Enter the Enterprise with New Autonomy

AWS went big on “agentic AI,” unveiling Frontier agents such as the autonomous coding agent Kiro, a security agent for code reviews, and a DevOps agent to prevent incidents downstream, along with a new collection of outbound command placement systems. The pitch is that such agents can learn team norms and run autonomously for hours or days; they’re chat assistants on steroids that star in a Seth Rogen–produced world of Mad Men office automation.

- AI Agents Enter the Enterprise with New Autonomy

- Custom Silicon and Systems for AI Scale and Speed

- Models and Tooling Designed for Choice and Control

- AI Factories and the Sovereignty of Data

- Savings Plans and the Startup Sweeteners

- Customer Proof and Cultural Signals from re:Invent

- Why It Matters Now for Enterprises and Developers

AgentCore, AWS’s agent‑building platform, introduced Policy to enforce guardrails; verbose memory so agents can log and remember user context; and 13 prebuilt evaluation systems that pressure test behavior.

That focus on evaluation and control is what enterprise CIOs have been demanding in analyst briefings from Gartner and IDC: predictable, auditable AI that can be governed.

Custom Silicon and Systems for AI Scale and Speed

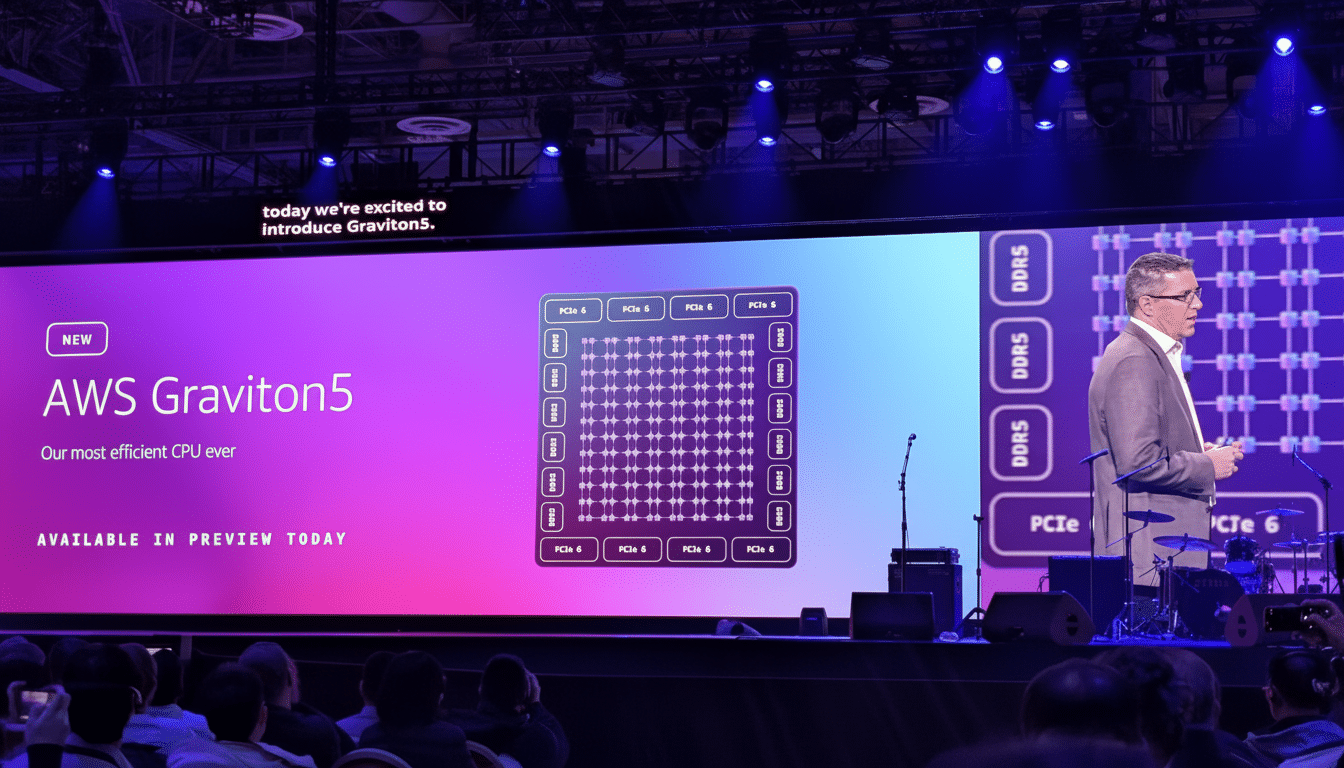

Infrastructure‑wise, AWS announced Graviton5, a 192‑core CPU architected to deliver higher performance per watt and reduce intra‑chip latency. AWS claimed that its denser layout decreases inter‑core communication latency by up to 33%, and that it boosts bandwidth, aiming at both general‑purpose compute and AI‑adjacent workloads that leverage a fast pace of data.

For accelerators, the new Trainium3 chip arrived alongside an UltraServer system that chains devices together for large‑scale training and high‑throughput inference. Up to 4× performance was quoted by AWS for training as well as inference, and when compared against the previous generation, in terms of energy, there is allegedly a 40% reduction. Trainium4 is already in development, and notably, it’s being designed to interoperate with Nvidia hardware — a recognition that most customers will be running mixed fleets for a long time to come. Management also noted strong momentum around Trainium2 revenue, suggesting that custom silicon is becoming increasingly real and less of a side bet.

Models and Tooling Designed for Choice and Control

Five new models were added to the Nova model family — three text models and a text‑and‑image multimodal model — to expand the range of available models with varying latency, cost, and accuracy trade‑offs.

Nova Forge was introduced as a service for access to pre‑trained, mid‑trained, or post‑trained models already trained, and all that is required is to add proprietary data at the top of the model in order to close the gap between baseline and business‑specific performance.

For builders, Bedrock introduced Reinforcement Fine‑Tuning, whereby teams can select from a library of predetermined workflows or reward templates and leave it up to Bedrock to orchestrate the end‑to‑end process. SageMaker got serverless model customization, so teams can begin tailoring models without the need to choose instance types or pre‑provisioning clusters — a not‑so‑subtle play toward developer velocity and cost hygiene.

AI Factories and the Sovereignty of Data

AWS announced AI Factories to run its AI stack on‑premises within customer data centers. Designed using Nvidia and compatible with Nvidia GPUs as well as Trainium3, the systems were developed for governments and regulated industries that need to process sensitive data securely in their own facilities. The takeaway here is simple: if data won’t go to the cloud, AWS will take the cloud to the data!

Savings Plans and the Startup Sweeteners

Between the marquee features, a cost cut that drew applause: Database Savings Plans that can reduce eligible database bills by up to 35% with a one‑year hourly commitment. The discount is automatically applied for supported services and overage is charged at on‑demand rates. It’s the type of pragmatism finance leaders have pushed for; as one popular cloud economist joked, “relentless customer pressure finally worked.”

To capture developers’ attention, AWS will offer a year’s worth of Kiro Pro+ credits to eligible early‑stage startups in certain countries. It’s a straight‑up gambit in a crowded AI coding domain where incumbent status often belongs to whoever ends up with the first tool adopted by a founding team.

Customer Proof and Cultural Signals from re:Invent

Lyft was one of the clearest signs of business impact. Employing Anthropic’s Claude via Bedrock to drive a customer‑support agent, the company saw average resolution time decrease by 87% and driver use of the agent nearly doubled this year. That sort of lift, if repeatable at scale, is the ROI that CFOs need to see before greenlighting more broad rollouts.

On stage, AWS executives painted agents as the next evolution of assistants, noting that natural language now can be used to specify goals and systems will plan, call tools, write code, and act. The closing keynote also served as a cultural moment: the longtime technologist Werner Vogels gave his last re:Invent keynote, telling developers not to be scared of becoming obsolete but to transform themselves as tasks are automated. The subtext to enterprises is straightforward — get your teams ready, encode guardrails, measure performance, as we are transitioning agentic systems from demos to production.

Why It Matters Now for Enterprises and Developers

From silicon to software to services, the 2025 lineup from AWS is a longish bet that tries to meet customers where they are: a hodgepodge of GPUs and custom chips alongside off‑the‑shelf models and some domain tuning, with governance woven through every layer. With cloud and AI investment still accelerating, per recent studies from Gartner and IDC, the winners will be platforms that enable demonstrable progress without requiring trade‑offs in existing IT control, cost discipline, or pace of innovation. This re:Invent makes AWS’ bet clear — autonomous agents and flexible infrastructure are how enterprises get there.