Anthropic has released version 4.5 of its smallest base model, the new Claude Haiku, which provides Sonnet-class performance at a fraction of the cost and latency, according to the company. In internal tests, Haiku 4.5 is priced at approximately one-eighth of the cost and is more than twice as fast compared with a typical mid-tier model. It’s rolling out widely across Anthropic’s free and paid tiers.

Why small, fast models matter for real-time AI

Shrunken bots are the backbone of responsive chat, live coding, and cheap automation. They control server loads, power free product tiers, and support parallel “agent swarms” that divide work into fast but specialized steps. Anthropic is banking on that pattern: combine a bigger planner like Sonnet with multiple Haiku 4.5 sub-agents to complete tasks in parallel, and then fold results back together.

- Why small, fast models matter for real-time AI

- Haiku 4.5 benchmarks and what the results indicate

- Why it’s the age of scalable, agentic AI workflows

- Early fit for coding assistants and practical product ops

- Placing Haiku 4.5 in a stacked lightweight division

- Availability, rollout details, and what to watch next

That’s a pattern we see in many AI teams: keep heavyweight reasoning for thorny problems and hand over the parsing, retrieval, or UI control to lightweight executors. Overall, this means lower total cost of ownership and better real-time performance with no sacrifice to outcome quality.

Haiku 4.5 benchmarks and what the results indicate

Anthropic says Haiku 4.5 hits 73% on SWE-bench Verified and 41% on Terminal-Bench, sitting close to Sonnet 4 with those figures and within reach of peers that the company identifies as GPT-5 and Gemini 2.5. Tests for tool use, computer use, and visual reasoning show similar tiering, according to Anthropic’s release.

SWE-bench Verified, developed by Princeton and other researchers, gauges whether models can generate functional patches for real bugs—an ever-more viable stand-in for actual coding. Terminal-Bench emphasizes CLI skills and step-by-step accuracy. Numbers should be independently replicated, but the direction is clear: Haiku is closing in on mid-tier reasoning within a latency and price band suited to high-traffic products.

Why it’s the age of scalable, agentic AI workflows

Anthropic’s product vision positions Haiku 4.5 as the engine of execution in agentic systems: a high-speed, cost-effective machine that does many tiny jobs while a stronger model figures out what to do and then verifies the work. Mike Krieger, Anthropic’s chief product officer, has highlighted this split as enabling new approaches for deploying in production: giving a team the ability to “compile their toolbox and pick which models they want based on speed, intelligence, and cost,” he said.

Functionally, this might mean that Sonnet handles a series of steps—triage a ticket, design a fix, write tests—and then sends Haiku instances to execute commands, manipulate files, or invoke APIs. Lower inference overhead means developers can spin up multiple Haiku agents in parallel without spiking latency or spend.

Early fit for coding assistants and practical product ops

Anticipate initial interest in software development, where tools like Claude Code are already dueling for response times. Coding assistants live or die based on first-token latency and throughput; a model that maintains accuracy but reduces wait times is worthwhile now. From materials shared by Anthropic, Zencoder CEO Andrew Filev noted that when execution is both fast and cheap, new use cases open up for end users—inline refactors, shell automation, and continuous codebase hygiene.

Outside IDEs, Haiku’s speed profile also makes sense for customer-support deflection, lightweight analytics, RAG pipelines, and UI automation. These are environments in which throughput and cost per request are king, where a model that consistently calls tools or drives interfaces can save humans for higher-order work.

Placing Haiku 4.5 in a stacked lightweight division

Haiku 4.5 comes on the heels of Anthropic’s Sonnet 4.5 and Opus 4.1 updates and effectively completes a roster that fits neatly with planner–executor models.

It also addresses rising competition at the low end across the space (e.g., OpenAI mini-tier models, Google Gemini Flash variants) and lightweight open weights like Llama-based 8–13B systems fine-tuned for tools and code. Independent communities like LMSYS and MLCommons have made it clear that small models can perform above their size class when highly optimized for latency and tool use. Anthropic’s gamble with Haiku 4.5 is that real-world, agent-driven workloads will favor that balance more than peaky benchmark numbers will, particularly at high-volume deployments.

Availability, rollout details, and what to watch next

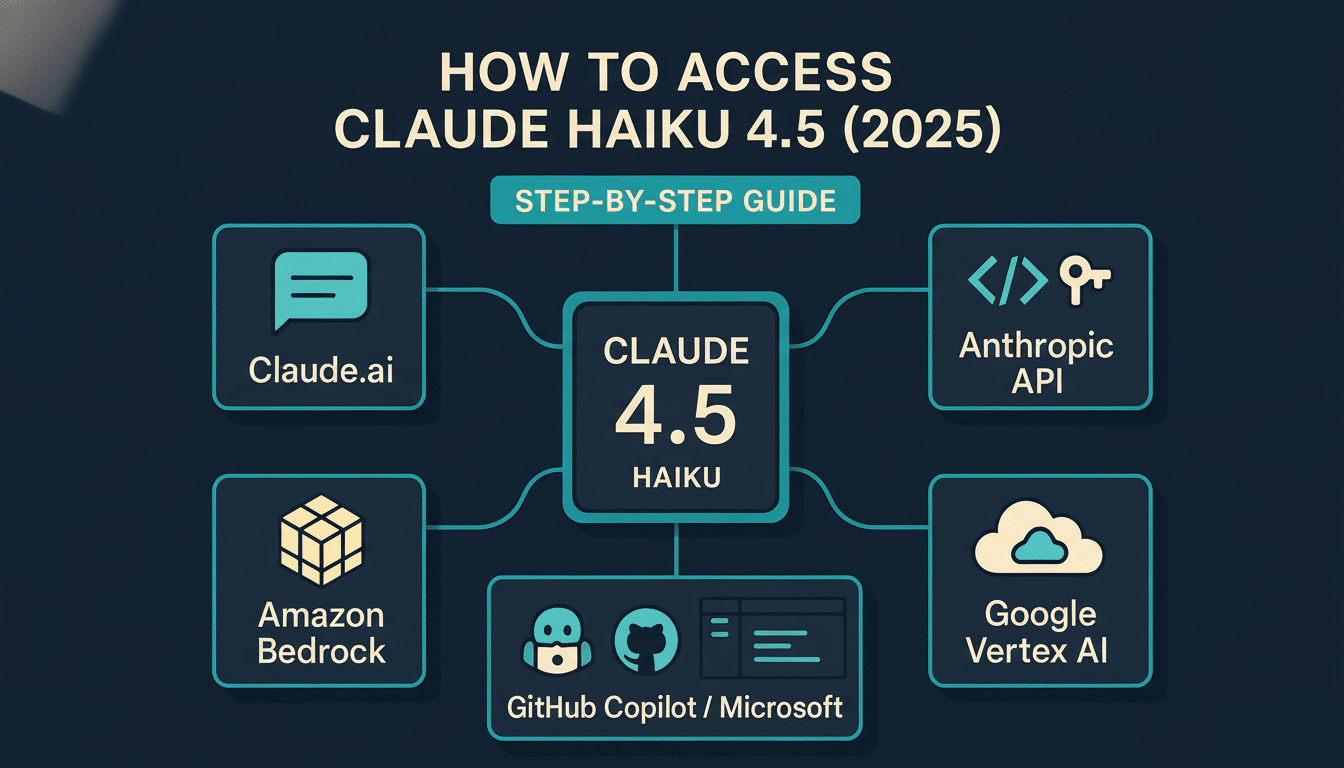

Anthropic says Haiku 4.5 is available now and bundled with free-tier access, a step that will probably drive adoption among fledgling startups and teams that are dabbling with agentic patterns. Look for external reproductions of the announced SWE-bench Verified and Terminal-Bench scores, and for head-to-head latency and cost comparisons as developers kick the tires on Haiku versus other compact models.

If the company’s claims pan out in production, Haiku 4.5 could mean a broader shift: away from monolithic, do-everything models and toward orchestrated systems in which a fast, cheap executor is the keystone of practical AI.