AI training clusters are running into a thermal wall. Next-gen racks are expected to draw up to 600 kW — more power than hundreds of ultra-fast EV chargers — and cooling looms as the gating factor for performance and deployment. Now a young company, Alloy Enterprises, claims it can buy the industry more time with a deceptively simple fix: seamless copper “metal stacks” that shuttle heat off GPUs and their immediate neighbors faster and more reliably than today’s cold plates.

Why AI racks are overheating in modern data centers

With the acceleration of accelerators and more interconnected bandwidth, rack densities have crossed traditional air-cooling boundaries. Guidance from ASHRAE for data center airflow points operators to direct-to-chip liquid cooling when rack loads rise above a few dozen kilowatts, and hyperscale AI gear is blasting through that. The International Energy Agency suggests global data center electricity demand could hit 620–1,050 TWh by 2026, with AI leading the charge — a timely reminder that every watt saved on cooling counts at grid scale.

Hot spots remain even in systems with liquid cooling. Peripherals — high-bandwidth memory, voltage regulators, NICs — can represent around 20 percent of a server’s thermal burden. Squeezing effective cold plates into those tight spaces without introducing additional leak risk or pumping penalties has been a sticking point.

Inside the metal stack that cools AI chips and servers

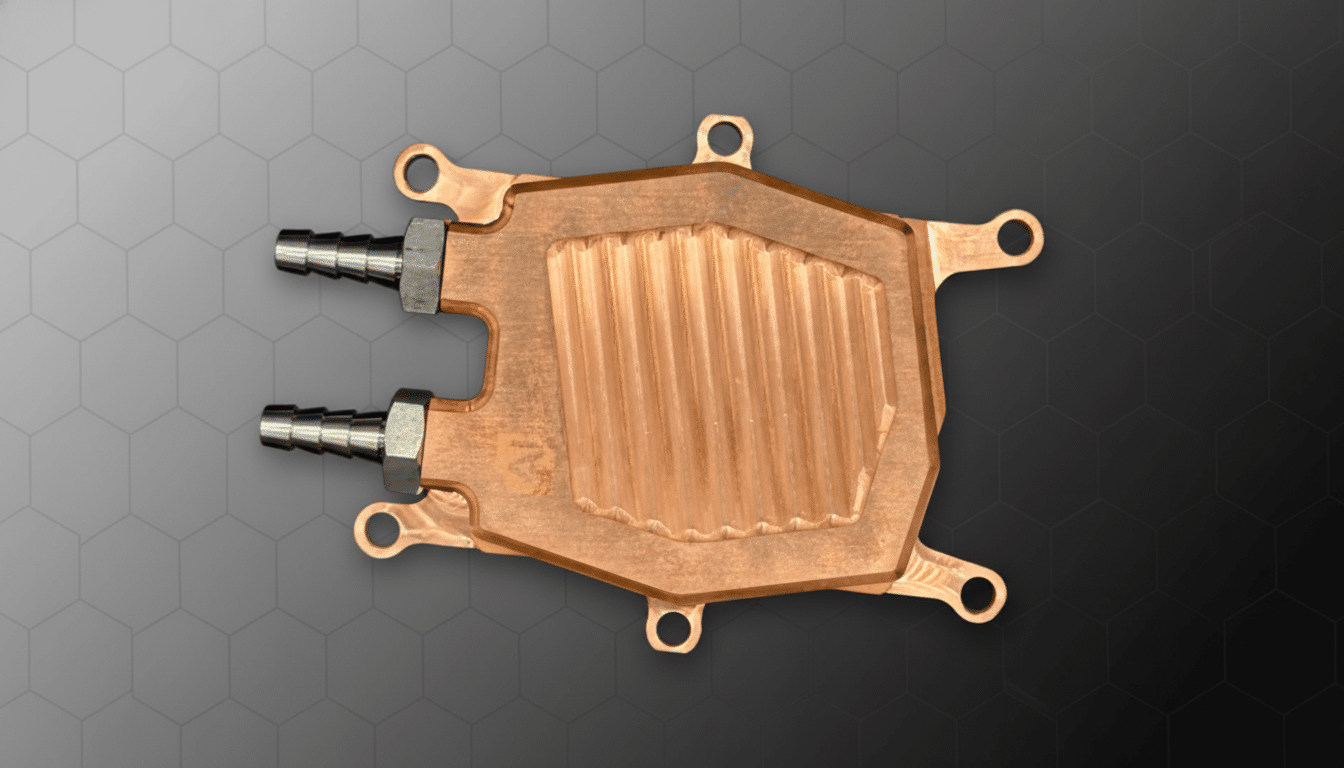

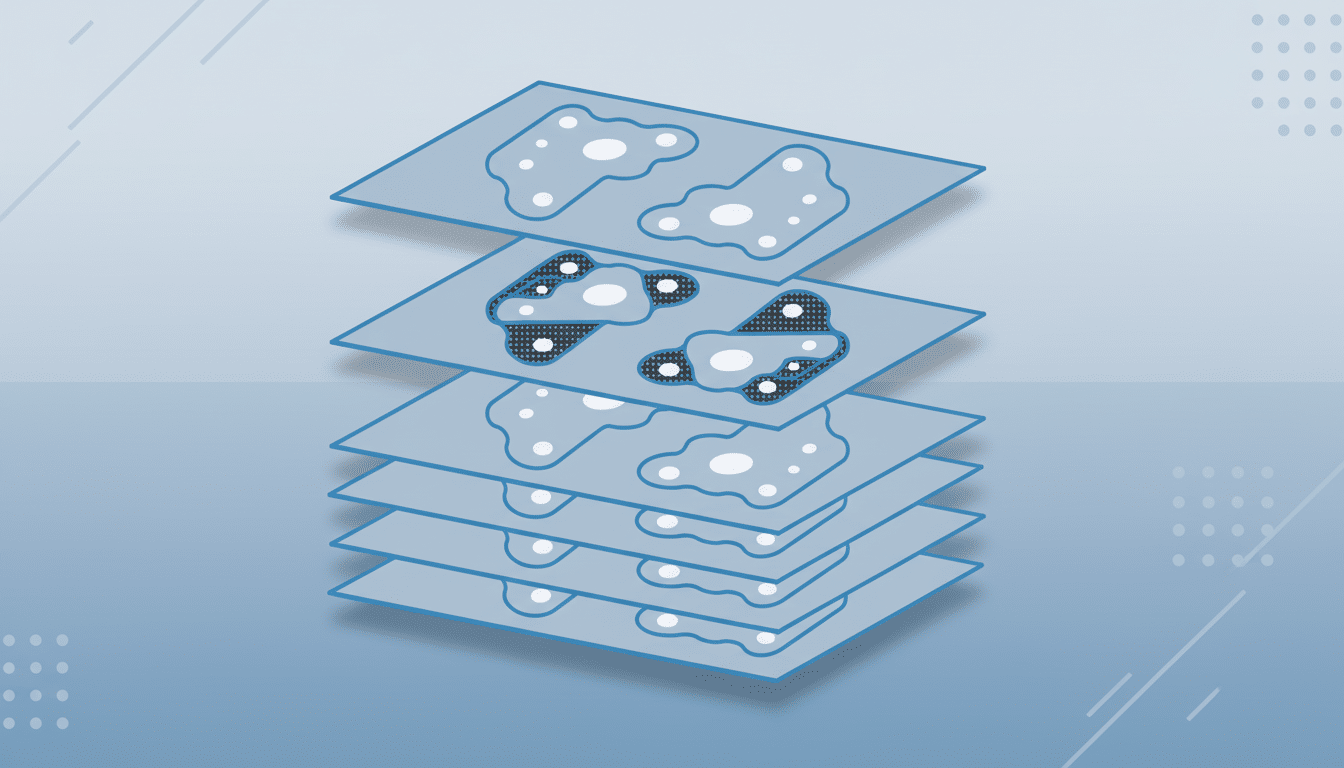

Alloy’s process resembles additive manufacturing — the family of so-called three-dimensional printing technologies — but is not 3D printing. Features are laser-cut into two thin sheets of copper, a bonding inhibitor is applied wherever internal channels need to stay open, and then the stack is diffusion-bonded in an oven under heat and pressure. What you get is a monolithic plate — no brazed joints, no welds, no seams — with internal microchannels and manifolds directly integrated into the metal material.

Finer features open up more surface area and finely tuned flow. Alloy claims that it can make channels about 50 microns wide — less than half as thick as a human hair — which would squelch temperatures from soaring while preventing pressure drops from getting too high. Being a fully dense copper plate, it avoids porosity and mechanical variability that is an issue in some printed parts, and it can more easily tolerate higher pressures, which are more common in facility liquid loops.

Thermal gains and system effects from copper plates

The plates bring about a 35% general thermal performance improvement compared to incumbent designs, which is significant in an era of tight budgets for modern AI servers. Less device-to-coolant resistance could mean cooler junction temperatures for more sustained boost clocks, or lower coolant set points. Either way, operators can reclaim efficiency that would have been lost to throttling and over-provisioned pumping.

Equally important is reliability. Traditional cold plates are generally produced in two halves, which are then joined by brazing or sintering, thus creating a joint that becomes susceptible to failure under pressure and thermal cycles. This yields a leak path-resistant block. The smooth surface, which results from a diffusion bonding process — commonly used in aerospace — eliminates potential leak paths in the block — of particular importance to operators moving thousands of nodes to direct liquid cooling through OCP (Open Compute Project) and vendor reference designs.

Since Alloy’s process has the ability to make thin, high-strength geometries, the plates can be nested around memory stacks, VRMs, and networking ASICs. Attacking those “secondary” heat sources can reduce DLC racks’ chilling demands, whittle down fan power, and give PUE a boost. In tight clusters, the cumulative effect across a hall can be significant.

From prototype to production at Alloy Enterprises

The manufacturing process begins with commodity copper coils that are prepped, cut, laser-patterned, either inhibitor-coated or placed in stacks, and fed into a diffusion bonding press.

Alloy places the cost somewhere between conventional machining and 3D printing, gambling on repeatability and throughput being more valuable than exotic geometries as AI server racks scale in their numbers.

Designing side by side with customers: specify a thermal limit, pressure, or envelope constraints; Alloy’s software will find channel architectures that fit your process window. The goal is to retrofit into current server sleds and manifolded DLC networks without requiring facility-level alterations to pumps, coolants, or coolant chemistry. Copper’s good conductivity and corrosion resistance in typical water/glycol loops remain a plus, though operators will continue to rely on ASHRAE liquid classes and water treatment to deal with erosion and galvanic risks.

Alloy says that it is working with the big data center and server OEMs, but has not named names.

Sounds like how this market moves: suppliers prove out parts on a small number of boards, perform accelerated life tests for pressure and thermal cycling, and then qualify for multiyear programs. Efforts such as ARPA-E’s COOLERCHIPS and hyperscaler-backed OCP workstreams highlight the extent to which industry is poring over component-level thermal resistances and leak tolerances at this point in time.

The competitive landscape for high-density cooling

Direct-to-chip plates jockey with immersion cooling and rear-door heat exchangers, both requiring trade-offs. Immersion provides excellent thermal transfer, but it is difficult to service and not compatible with most materials. Rear-door units assist the most at modest densities, but they have difficulty keeping up as racks race for center-stage triple-digit kilowatts. When closed, microchannel plates can fit into today’s server forms and rack manifolds, minimizing deployment friction but lifting the thermal ceiling higher.

No single technology is going to be able to bring a 600 kW rack into submission on its own. But if the metal stacks of Alloy manage to make good on their promise and provide performance and reliability at scale, then they could become a foundational building block for the AI era — a quiet, copper-first answer to one of computing’s loudest problems.