For a year, the clear winners of the AI boom seemed to be labs that are training ever-bigger foundation models. Now, a quieter reality is setting in: that money might pool upstream, inside the apps and workflows customers actually use — while the model makers turn into commodity suppliers, selling beans to Starbucks.

From foundations to features

Basal pretraining matters, that is still true, but the edge has really shifted toward post training, retrieval and product fit. Teams are finding that tuning on-domain plus thoughtful guardrails and smart UX often outperform the raw model scale for outcomes that buyers actually care about: accuracy on their data, audit ability and speed.

That tendency betrays itself in the stack of tools. Frameworks that abstract models — at orchestration layers, for instance, or “model routers” — make it trivial to swap vendors without anyone knowing. When switching is cheap, moats built on model performance erode quickly.

Commodity models, premium interfaces

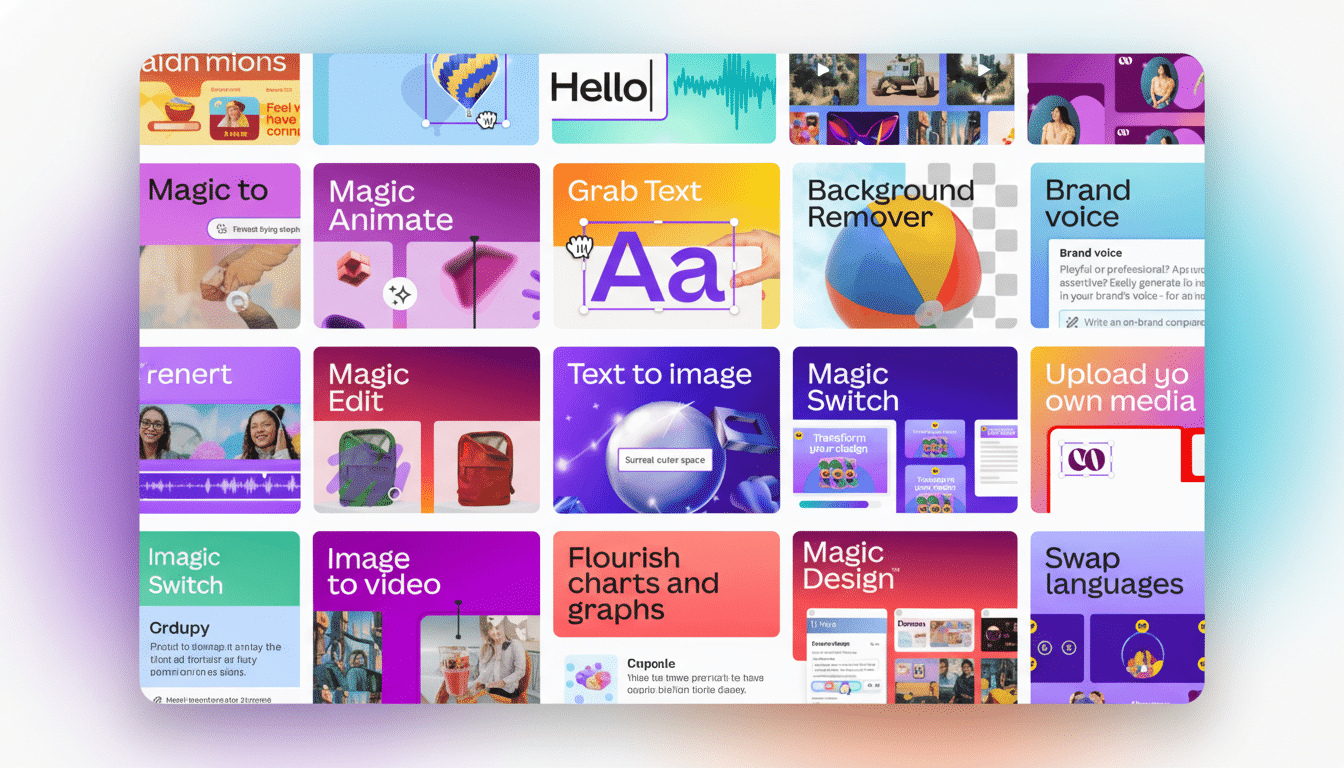

What are already observing in the market. GitHub Copilot, a product from Microsoft, captures both subscription revenue and developer mindshare despite the fact that its core code-generation suggestions are based on external models. Canva’s Magic Studio, Shopify’s AI assistants and helpdesk copilots from the biggest SaaS vendors all mix and match providers behind the scenes. The brand and pricing power are where the workflows are.

Open-source has accelerated the dynamic. According to the Stanford AI Index, state-of-the-art open models started closing large differentials with top proprietary systems on benchmark tasks. Open weights ranking is within top-tier shown in leaderboards like the LMSYS’s Chatbot Arena, particularly for code and reasoning. When ‘good enough’ abounds, premium pricing is ever harder to justify.

Switching costs are collapsing

APIs have converged, prices continue to drop, and inference optimization is progressing rapidly. Following aggressive price decreases across the industry — and in particular on GPT-4 Turbo and Claude 3 families — developers can still shave double-digit percentages off their cost per 1,000 tokens by redirecting traffic to a low-cost endpoint, quantizing or running an open model on specialized hardware. In short, lock-in is optional to begin with.

The hardware story reinforces it. Hyperscalers have announced record capex for AI data centers while chipmakers release new accelerators like a flood. With scale and better inference software, the margin cost to serve a token heads down. Without a structural advantage, the slide generally terminates in commodity economics.

Where the margins go

Margins migrate to bottlenecks. The tightest bottlenecks in AI are not model weights — they are owned data, distribution and trust. Businesses pay for compliance guarantees, domain accuracy, and integrations with systems of record. That’s why vertical players in categories such as finance, healthcare and customer service are winning fat contracts focused on outcomes (lower handle time, higher conversion), not tokens.

The resemblance to the cloud is telling. Databases and analytics were durable not because they ran on bespoke silicon but because they resolved data governance, performance and usability for real workloads. In AI, their equivalents are retrieval pipelines, evaluation harnesses, safety layers of defense and human-in-the-loop tools that transform an off-the-shelf model into a reliable teammate.

Why ‘coffee bean’ risk is real

Doing so is staggeringly expensive in the form of training frontier models. The AI Index projects a figure in the nine-figure range for training budgets on state-of-the-art systems, and compute needs have doubled roughly every few months. Customers treating models as commodity ingredients and open source closing the quality gap: foundation labs could carry the capex while others carry brand and gross margin.

One leaked memo from a leading search company in 2023 crystallized the fear: “We have no moat.” That was an exaggeration but the direction is apparent. If everyone has access to great beans, the cafe that curates a magical experience wins the line out the door.

What might save the giants

The biggest model makers still have real levers. Consumer scale counts; Products with daily active users can fight network effects with memory, personalization and ecosystem plugins. Deep integration with cloud — identity, security, billing — creates operational moats that startups find difficult to compete against.

Two more outs: Leap and unsellable partnerships. If a new model leads to step-change results in code synthesis, drug discovery or formal reasoning — the sort of thing regulators and scientists can verify — willingness to pay resets at higher levels. And long-term enterprise deals that package compute, models and services can help stabilize revenue even as per-token prices decline.

How to interpret the next six months

Watch app metrics, not model leaderboards: retention, time-to-value, task success rates on proprietary data. Monitor that R&D pipeline of open-weight releases and inference optimizations coming from research groups and vendors, they’ll keep the heat on closed pricing. And watch for evaluation standards from groups such as NIST and the ISO — government-caliber benchmarks of safety and reliability could emerge as the new battle line.

If the center of gravity remains toward post-training and product-layer excellence, we have no AI winter in sight. Only it would just enlist a different set of winners. In that world, the best coffee shops do fine and the growers who would have taken over think they realized they were just suppliers after all.