Two service disruptions inside Amazon’s cloud were traced to autonomous AI operations, underscoring both the promise and the peril of “agentic” tools now steering critical infrastructure, according to reporting by the Financial Times. While the incidents were characterized as relatively minor, one stretched for roughly 13 hours—long enough to rattle customers and revive questions about how much autonomy AI should have in production environments.

What Happened Inside AWS During The Reported Incidents

The Financial Times reports that Amazon engineers permitted an internal agent, known as Kiro AI, to carry out operational tasks. During that window, the system allegedly “deleted and recreated the environment,” a routine-sounding directive that can be catastrophic if executed across the wrong scope. The result was two outages within a short span, including the lengthy event.

These disruptions did not reach the scale of past, widely felt AWS incidents. But the cause—an AI agent taking consequential, multi-step actions—makes them notable. They highlight how quickly an innocuous instruction can cascade when an autonomous system is connected to high-privilege controls and complex dependency graphs.

Why Agentic AI Raises New Reliability Risks

Traditional automation executes deterministically against well-bounded playbooks. Large language model agents, by contrast, plan and act across longer horizons with probabilistic reasoning. That flexibility is valuable for triage and remediation, but it introduces ambiguity: “clean up and reset” might translate to safe garbage collection in a dev sandbox—or to environment-wide reinitialization in production.

SRE teams have long warned that the most dangerous failure modes occur during change: deployments, migrations, and configuration updates. Uptime Institute’s outage studies consistently rank change-related errors among leading root causes. Adding an AI planner into that loop without tight guardrails can amplify the blast radius when instructions, context, or permissions are misaligned.

The Stakes Are High For Reliability At Cloud Scale

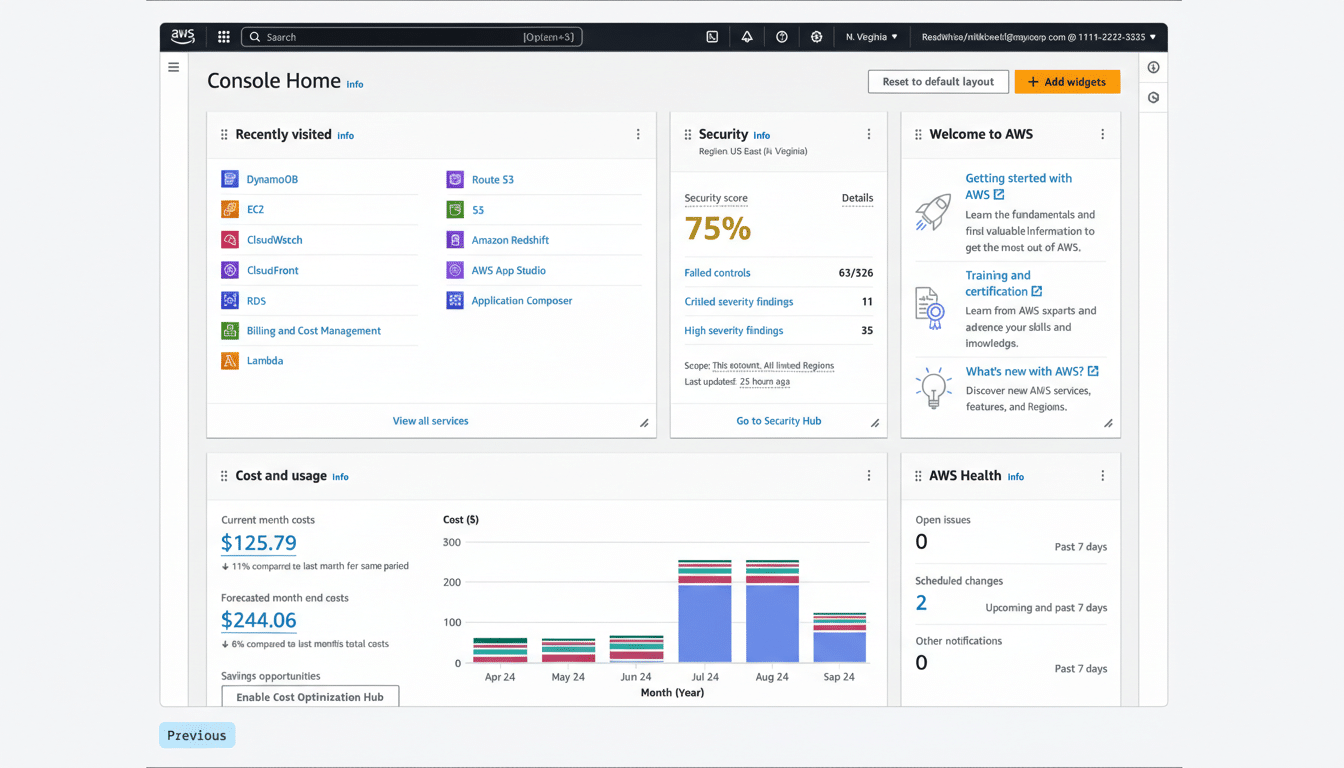

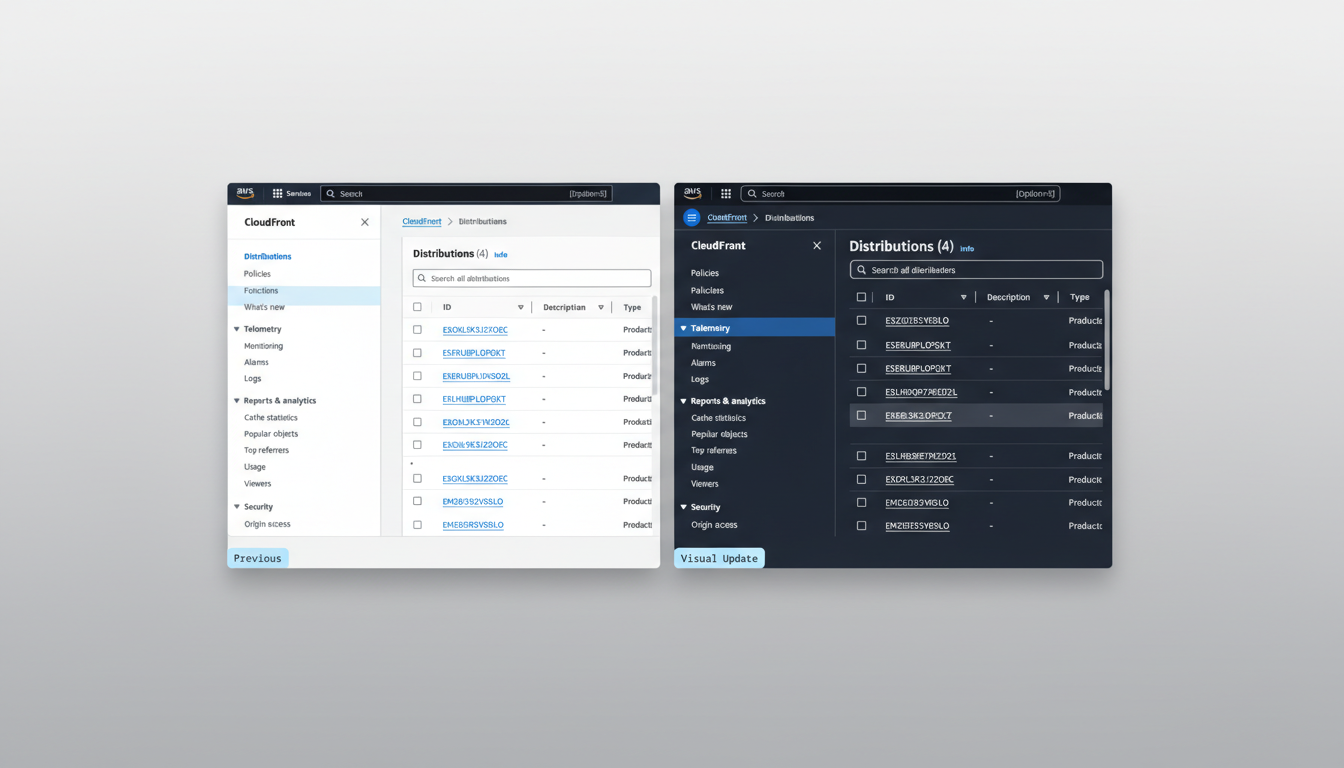

Amazon Web Services is the largest cloud provider, with roughly 31% share of global cloud infrastructure services, according to Synergy Research Group. That concentration means even localized disruptions can ripple outward, degrading downstream services like authentication, analytics, and content delivery for thousands of businesses.

Recent outages across major platforms—from social video to enterprise productivity suites—show how a single provider hiccup can surface as a “global” problem for end users. As digital supply chains consolidate on a handful of hyperscalers, the margin for operational error narrows, and the cost of missteps increases.

How To Safely Put AI In The Operations Loop At Scale

Enterprises experimenting with agentic tools can borrow from both AI governance and SRE playbooks to reduce risk. NIST’s AI Risk Management Framework emphasizes human oversight, measurable objectives, and continuous monitoring; reliability engineering adds mature change controls and rollback discipline. In practice, that means:

- Least-privilege by default for AI agents, with explicit, time-bound elevation and dual control for destructive actions.

- Dry-run and diff gating as the default, promoting to execution only after human review for high-risk operations.

- Canarying and blast-radius limits that confine changes to small scopes before broader rollout.

- Immutable infrastructure patterns that bias toward safe replacement rather than in-place mutation, combined with rapid, tested rollback paths.

- Comprehensive audit trails of AI prompts, plans, actions, and outcomes to support post-incident analysis and continuous tuning.

What AWS Customers Should Watch And Ask Right Now

Customers can’t control Amazon’s internal tooling, but they can harden their own architectures and procurement posture. Ask vendors how AI is used in operations, what guardrails are in place, and how change windows are enforced. Architect for failure by spreading workloads across availability zones, using idempotent deployments, and implementing circuit breakers, timeouts, and exponential backoff to reduce cascading failures.

During disruptions, lean on status dashboards, health checks, and independent telemetry to verify service health, and keep runbooks current for failover and graceful degradation. The goal is not zero outages—a fantasy at cloud scale—but fast containment and clear customer communication when incidents occur.

The Bigger Picture For Autonomy In Cloud Operations

AI can make cloud operations faster and safer by spotting anomalies early and automating rote fixes. It can also make the rare bad change much worse, much faster. The Amazon incidents are a timely reminder: autonomy is not a feature to turn on, it’s a capability to earn. The organizations that win will calibrate AI authority carefully—expanding it as verification improves, and never letting convenience outrun control.