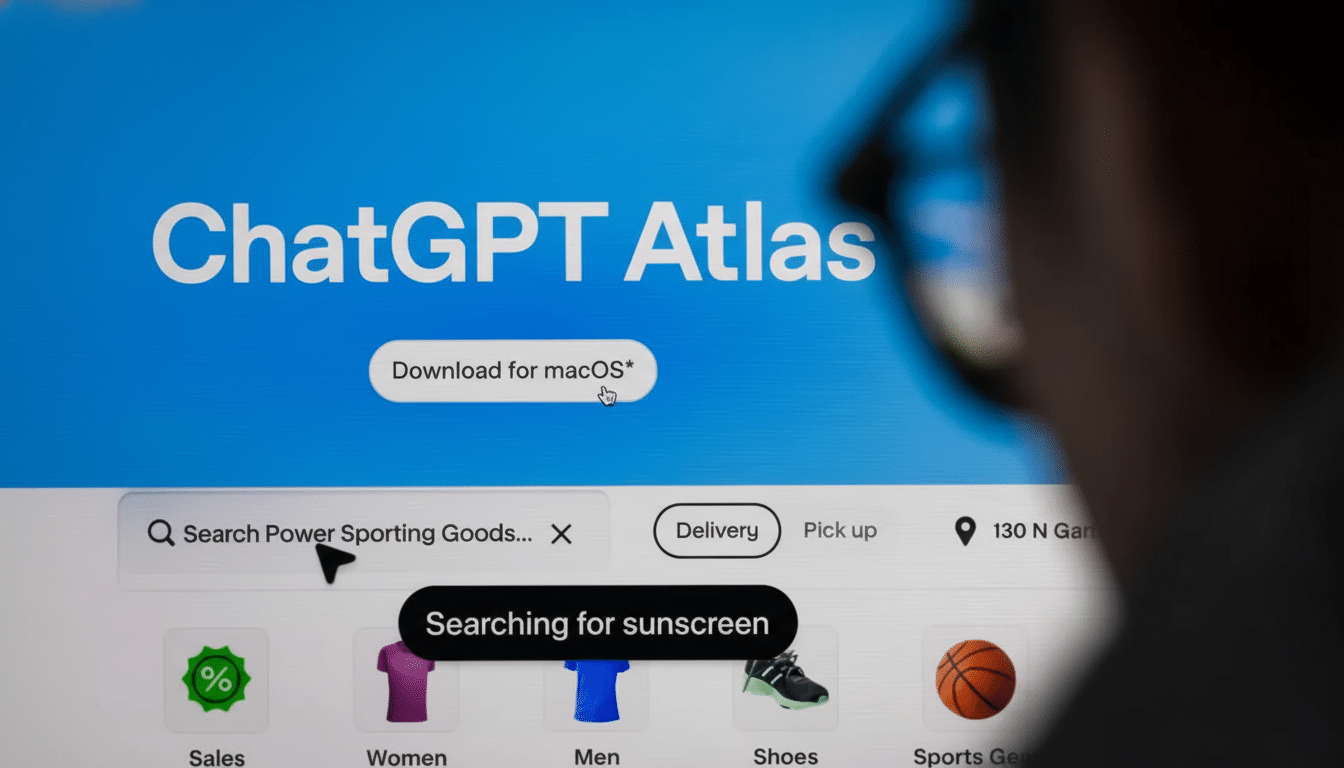

Some next-generation AI browsers are beating paywalls intended to shield subscriber-only journalism, according to a recent analysis conducted by the Columbia Journalism Review. The publication, which outlined that OpenAI’s Atlas and Perplexity’s Comet could reach full-text items from certain outlets that obstruct traditional crawlers, prompted immediate questions of permission, reimbursement, and the digital news industry’s lasting economics and how.

How AI browsers circumvent and fall through paywalls

The review explains a striking test: both Atlas and Comet effectively downloaded a feature for subscribers that was approximately 9,000 words from the MIT Technology Review. While attempting the same request via the ChatGPT application, the publisher employed a conventional method to protect its material: the Robots Exclusion Protocol hindered OpenAI’s crawler.

According to experts, the discrepancy reflects what it means when an AI browser acts on its own behalf—they surf pages in the same style that humans do, making them more challenging to identify. Traditional systems rely on recognition and on obeying the robots.txt system, which is voluntary. AI browsers, on the other hand, log into webpages, click on links, and assess text without delay.

Numerous websites see them as a common client session. This is a significant issue with client-side paywalls that conceal text by scripts or overlays while still displaying the full content in the HTML. Even though a person cannot obtain access without signing in, the machine generally can.

Rising pressure on soft paywalls and bot defenses

Security researchers caution that bot management tools rely on analyzing signals such as IP reputation, browser fingerprints, and behavioral cues to differentiate people from automation. However, AI-powered browsing agents are rapidly enhancing their ability to mirror human patterns. This development creates a challenge for publishers accustomed to utilizing metered access and soft paywalls to balance their reach and revenue. The stakes for newsrooms are critical and stark; for example, subscription revenue is a vital pillar for many outlets as ad revenue is less steady.

The Reuters Institute’s 2024 Digital News Report found that, across surveyed markets, about 17% of respondents pay for their news online. Conversions are not easy to obtain, and outright governorships fund further investigation and beat coverage. If AI tools can access paywalled text routinely, the harm is not just leakage—it is a true potential undermining of the business model.

This worry coincides with a broader digital rights debate. Numerous publishers have raised queries about how AI companies gather training data and generate summaries from it, cutting clicks. Some have signed licensing agreements with AI companies; for example, OpenAI has signed content licensing deals with organizations such as the Associated Press and Axel Springer. But diffused newsrooms still lean on capability controls instead of bidding. This case is complicated further by a series of legal grey areas. Depending on the domain, terms of service often prohibit scraping, while avoidance of capability controls can allow legal actions under theft and antitrust measures. Even without court disputes, the reputational cost for AI vendors overstepping in their siphoning of paid content might be huge.

Technical defenses publishers can deploy against AI browsing

Technical hardening is the first line of defense. Security teams are ramping up efforts to shift from client-side to server-side paywalls more aggressively to ensure that protected text isn’t served to the browser without proper authorization.

- Adopt advanced bot mitigation from providers like Cloudflare and Akamai, using device fingerprinting, behavioral analysis, and challenge flows to reduce automated access that disguises itself as human traffic.

- Harden robots policies by banning declared AI user agents and enforcing the bans with firewall rules and rate limits.

- Use tokenized entitlements and short-lived session keys to prevent replay and unauthorized session reuse.

- Pursue licensing and API agreements that let AI systems reference coverage legally while preserving subscriber value through restrictions on full-text usage, delayed access, or mandatory attribution and linkbacks.

There is no silver bullet; as AI browsing agents evolve, the defense may increasingly rest on a series of layered controls, continuous monitoring, and clearer contractual frameworks that decide what machines can read and when they pay for the privilege.

The stakes for AI companies as web browsing features grow

For AI vendors, the opportunity to browse the live web is enticing: richer answers and fresh context. But trust is coming close in importance to ability. Machines that transparently comply with publishers’ policies, that respect opt-outs, and that come to the table with clear signals will be the ones whose tools are bought and recommended.

CJR’s wake-up call for ethics, access, and paywall limits

The emergent consensus is that generative experiences cannot be built on equivocal access. As AI browsers such as Atlas, Comet, and even integrated models in established browsers mature, their future is likely to depend on the terms and technology that ensure no one gets past a paywall for free. The CJR conclusions should be a wake-up call: without more binding ethics and technology limits, the growing use of AI-guided browsing is a persistent threat to the economics of discretion-based reporting.