Cloud storage, for years, has felt like a treadmill: upgrade a plan, buy an external drive, close some accounts down and repeat. That dynamic is upended by the arrival of 100TB consumer cloud plans. It’s enough capacity for a lot of users to stop fretting entirely about space — and to construct a long-term strategy around how we actually create and retain data today.

With high-resolution photos, 4K video and shared workflows becoming common, the argument for “oversizing” storage has gone from being a luxury to sanity. The question is no longer if you’ll fill terabytes, but when — and how much time (and money) you’ll spend tangling with the growth.

Why 100TB Alters the Math for Everyday Data Needs

Think about scale. Approximately 1 hour of 4K video at 100 Mbps occupies about 45 GB. A pool 100 TB in size can store over 2,200 hours of that footage. Are you shooting RAW images with the latest 45 MP camera? It gets about 50 MB an image, so you have room for around 2 million photos. And even heavy creative archives — feature-length edits, drone footage, multi-year design libraries — have room to spare.

This is an amount of capacity that makes the little, constant micro-decisions — move this folder? get a new drive? upgrade to a higher subscription level? — drain less time than the equivalent bill-reading, thank-you-ing, and tax-return-processing when confronted by even bigger hard drives at greater capacities.

For small teams and serious hobbyists, it also unlocks proper versioning and long-term retention without the worry of hitting a ceiling.

Market Context and Costs for Consumer Cloud Plans

Big-name consumer clouds still top out at nowhere near 100 TB. Apple’s iCloud+ tops out at 12 TB, Microsoft’s OneDrive family plan offers the equivalent of 6 TB shared across accounts, and Google One has tiers up to 30 TB. On the other hand, 100 TB tips more towards light enterprise territory where your reference points are services like Amazon S3 rather than consumer bundles.

For some perspective, storing 100 TB on Amazon S3 Standard would cost around $2,250 per month at published storage rates (not including egress or API costs).

Consumer-friendly 100 TB plans, particularly those promoted as one-time or fixed-fee deals, undercut enterprise pricing — but buyers would do well to scrutinize the fine print on transfer limits, fair-use policies, and just what “lifetime” actually means (often the service life of the product, not yours).

Security, Privacy, and Durability Requirements Explained

Capacity means little without trust. The formula for cloud assurance is based on three foundational pillars: strong encryption, transparent governance, and durable infrastructure. Zero-knowledge end-to-end encryption means you control the keys to your data, preventing insider risks and third-party access. Open-source clients, independent audits, and GDPR-compliant contracting provide measurable transparency for privacy-sensitive users.

For durability, hyperscale benchmarks are the expectation — Amazon S3 boasts 99.999999999% object durability by storing redundant copies across facilities and regions — which seems like table stakes. Consumer providers may not report the same numbers, but many of them build robust service backends. Ask how data is sharded, where it lives physically and geographically, and how the provider ensures integrity over time. Now that NIST is standardizing post-quantum cryptography, vendors promoting “quantum-safe” capabilities should clearly state which algorithms they are using and how keys will be handled.

What 100TB Can Actually Do for Creators and Teams

Creative professionals can keep full-resolution originals of their work instead of lo-res copies for clients to make selections and approvals on. Small businesses can consolidate shared resources, logs, and audit records without having to switch between multiple accounts. Households can back up their device fleets — phones, laptops, and NAS snapshots — full with space to spare for years of 4K family video.

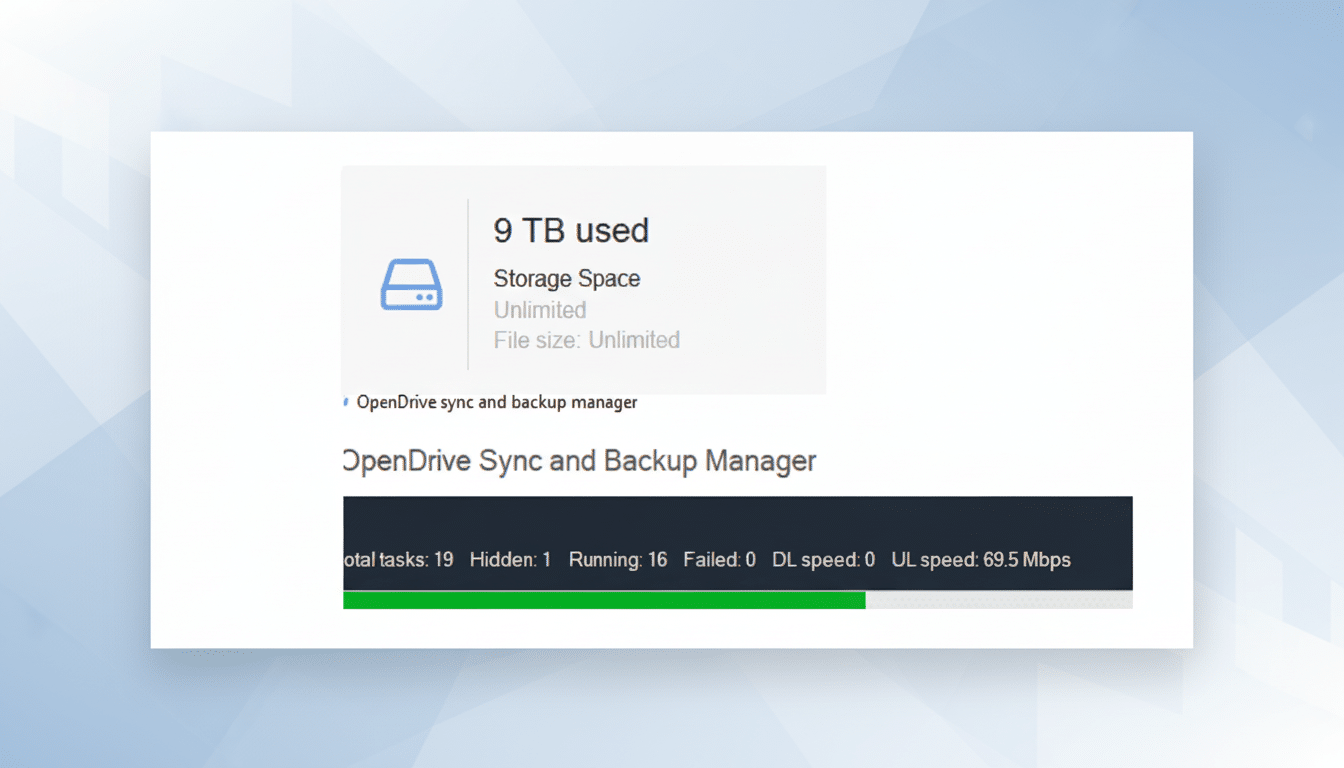

One practical caveat: bandwidth. 100 TB will take approximately 93 days to upload over a 100 Mbps connection. Most homes aren’t going to be pushing that amount of data at once, but it shows the need for incremental syncing capability and bandwidth scheduling, as well as seeding features (for power users). Enterprise services provide such devices as AWS Snowball or Backblaze B2 Fireball; those designed for consumers don’t. Look to see if the uploads are multithreaded, include delta sync, and whether they have local servers in your region for faster speeds.

The 3-2-1 Rule Is Still Relevant for Safer Backups

100 TB under one roof — but don’t forget the backup basics. The well-worn 3-2-1 rule — three copies of your data, on two different media types, with one of those in an offsite location — is still a best practice that’s widely echoed across the security community, including by CISA’s own recommendations. If you’re not ready to deal with disasters and machine repairs, a large cloud vault can be your offsite. Keep a snapshot locally or in a second cloud for the crown jewels to reduce single-provider risk.

A Buffer for the Data Deluge That Is to Come

The world is not taking the foot off the accelerator for data production. IDC forecasts the Global DataSphere will explode into the hundreds of zettabytes this decade thanks to growth in AI workflows, high-res imaging, and IoT. For people and small companies, 100 terabytes is a sensible hedge against that rising tide — enough capacity to record freely (or film with abandon), organize without pressure, and stop treating wars of storage attrition like emergencies.

Bottom line: 100 TB cloud storage is not just a lot of headroom; it’s a redefinition of the way you work. If the provider supports it with zero-knowledge security, clear sustainability, and robust performance, that’s most users’ first chance to forget about limits — and work at filling the space.