Phishing awareness training is a multimillion-dollar industry for companies, and staff are continually clicking.

This is not the moral failure of employees, and it’s not proof that education doesn’t work. It’s a sign that training as we know it doesn’t map well to how attacks in the real world play out and how people make decisions under pressure.

What New Research Reveals About Training and Click Rates

Researchers at UC San Diego Health and Censys analyzed 10 phishing campaigns sent to over 19,500 employees during an eight-month period and found no significant difference in click rates between those who had recently completed required training and those who had not. The discrepancy observed was on the order of 2%—practically negligible.

The topic or timing of the communication mattered more than training. “Update your Outlook password” was an unsuccessful lure; “employer vacation policy update,” however, hooked more than 30%. Failure rates increased with later campaigns — from approximately 10 percent early on, to more than half by month eight — indicating potential fatigue, habituation, or normalization of higher-risk prompts.

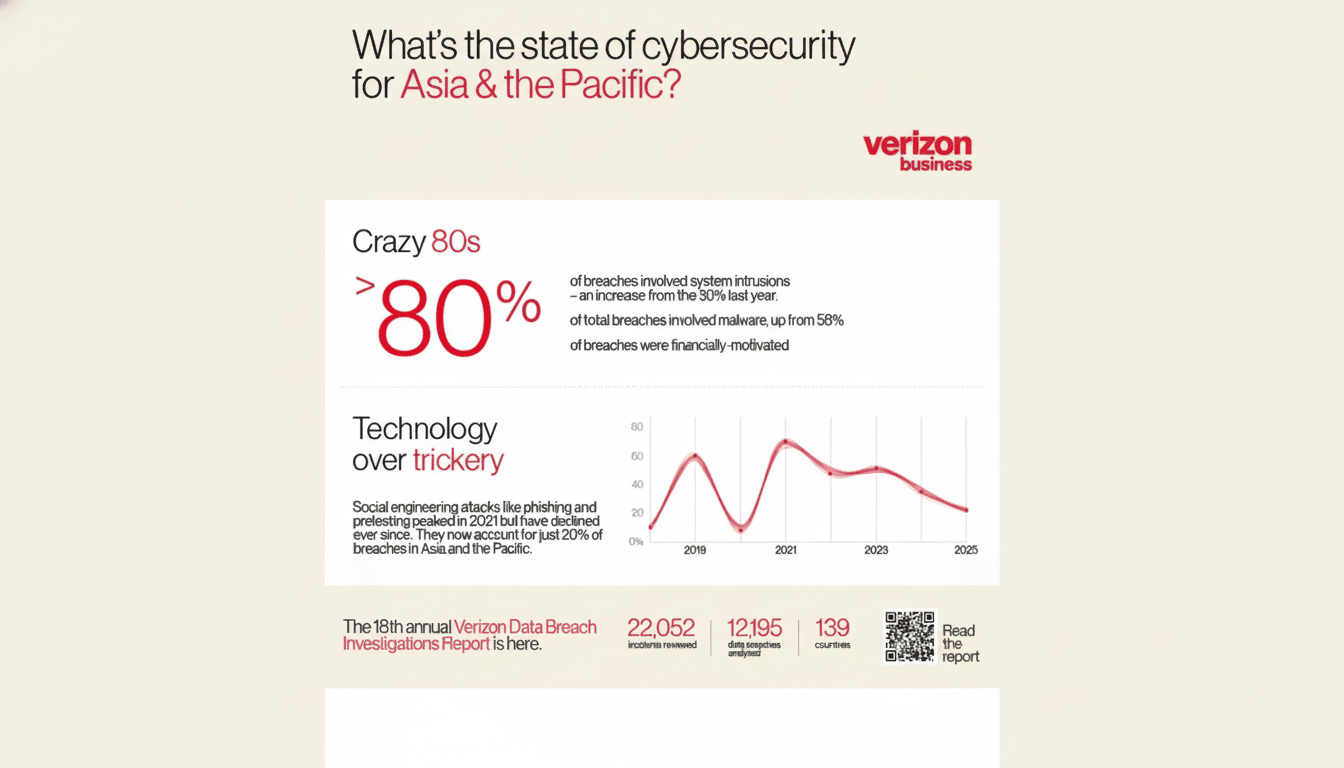

This jibes with broader industry data. The most recent SpyCloud Identity Threat Report says that phishing continues to be a major avenue for ransomware, with about 35% of affected organizations noting it as the primary means, compared to just under a quarter last year. Verizon’s Data Breach Investigations Report still finds that the “human element” contributes to most breaches.

Why People Still Click on Phishing Messages and Links

Attackers tailor lures to the point of peak cognitive load: end of day, on mobile, between meetings. Cues that carry urgency and a sense of authority or personal relevance override slow thinking. Behavioral economists call it System 1 — fast, heuristic, and biased for action.

On a phone, small screens cut off senders and URLs. Single sign-on pages are not much more than a snapshot. MFA is far from a magic bullet; attackers regularly exploit methods like reverse proxies and push-fatigue attacks to collect session tokens or trigger approvals. Generative AI renders the pretexts more fluent and customized, and multiplies the number of cues that employees need to learn how to detect.

Real-world incidents reinforce this. A brazen assault against a top hospitality chain was not about a textbook phishing email, but rather a phone-based social engineering play against IT support. The breach of a major identity provider originated in a contractor’s stolen credentials. In both situations, experienced workers followed familiar procedures while feeling stress, and training made the difference at critical junctures.

Why the Conventional Training Misses the Moment

Many annual modules are designed for completion, not behavioral change. Vanishingly low engagement — less than a minute’s attention, typically — has been documented by researchers. Simulated phish can train pattern recognition, but attackers mutate faster than instructional catalogs. What’s worse, punitive simulations damage trust and lead to underreporting.

The central flaw is timing. Most teach in January for attacks that land in June. Risk exists in the workflow — procurement approvals, HR policy updates, benefits modifications — not in the abstract. If the guidance doesn’t get built into the decision point, memory fades and habit prevails.

What Actually Reduces Risk from Phishing Attacks

Focus on controls that make the safe path the easy path. NIST and CISA advise organizations to use phishing-resistant MFA such as a FIDO2 security key or passkey, versus codes and push approvals that are susceptible to interception attacks and fatigue.

Harden identity and email. Enforce device- and location-based conditional access, disable legacy authentication, and reduce standing privileges. Move beyond awareness in email to enforcement: implement validated DMARC with quarantine or reject; verify sender identity and employ modern secure email gateways or API-based inspection that can detonate links and attachments in the cloud.

Add friction at click time. Browser-integrated link reputation, just-in-time interstitial warnings on credential pages, and domain allowlists for SSO portals disrupt the reflex. Only autofill credentials from verified origins. Strip tracking parameters and block redirects that hide the target URL.

Engineer for alternative analysis and response. The one-click report buttons that open tickets and auto-quarantine help accelerate response. Track time to report and time to contain as core KPIs. Restrict token lifetimes, monitor for abnormal OAuth consent, and use step-up authentication for sensitive tasks.

If you do train, make it situational. Brief, in-flow nudges associated with high-risk processes trump long lectures. Tabletop exercises for executives and help desks develop muscle memory for when to escalate and verify — call-back procedures, second-channel checks, and “pause points” before approvals.

Rethinking Security Metrics and Organizational Culture

Clicks are a crude metric. The organizations that drive click rates to near zero tend to do so because they have turned employees into automatons who are afraid, which leaves people feeling disempowered and doesn’t prevent the use of novel lures. Better measures are report rates, the false-positive allowance, coverage of MFA with phishing-resistant factors, and how quickly suspicious access is contained.

Most importantly, shift the narrative. Act as if employees are sensors, not costs. Even when someone does click, reward quick reporting. If staff believe honesty generates help, not punishment, you receive earlier signals and fewer incidents.

The bottom line: if you just rely on showing people what phishing looks like, it isn’t worth very much. Bring controls closer to the moment of risk, upgrade your authentication, and create a culture that prizes swift reporting over perfect judgment. That’s how to turn unavoidable clicks into manageable events.