The biggest players in AI are spending on larger and ever-larger clusters of these on the bet that brute-force scale will produce general intelligence. Sara Hooker, an alumna of Google Brain and former vice president for AI research at Cohere, is making the opposing bet. With a new company, called Adaption Labs, she says the next great leap won’t come from even more GPUs but from AI systems that incessantly adapt to the world.

The limits of AI scaling and evidence of diminishing returns

For years, scaling laws indicated that the gains would continue if only the models got bigger. The industry chased it, too, throwing billions at data centers and power contracts under the belief that the more parameters or tokens one had, the smarter the intelligence. But recent evidence indicates that there have been diminishing returns at the frontier.

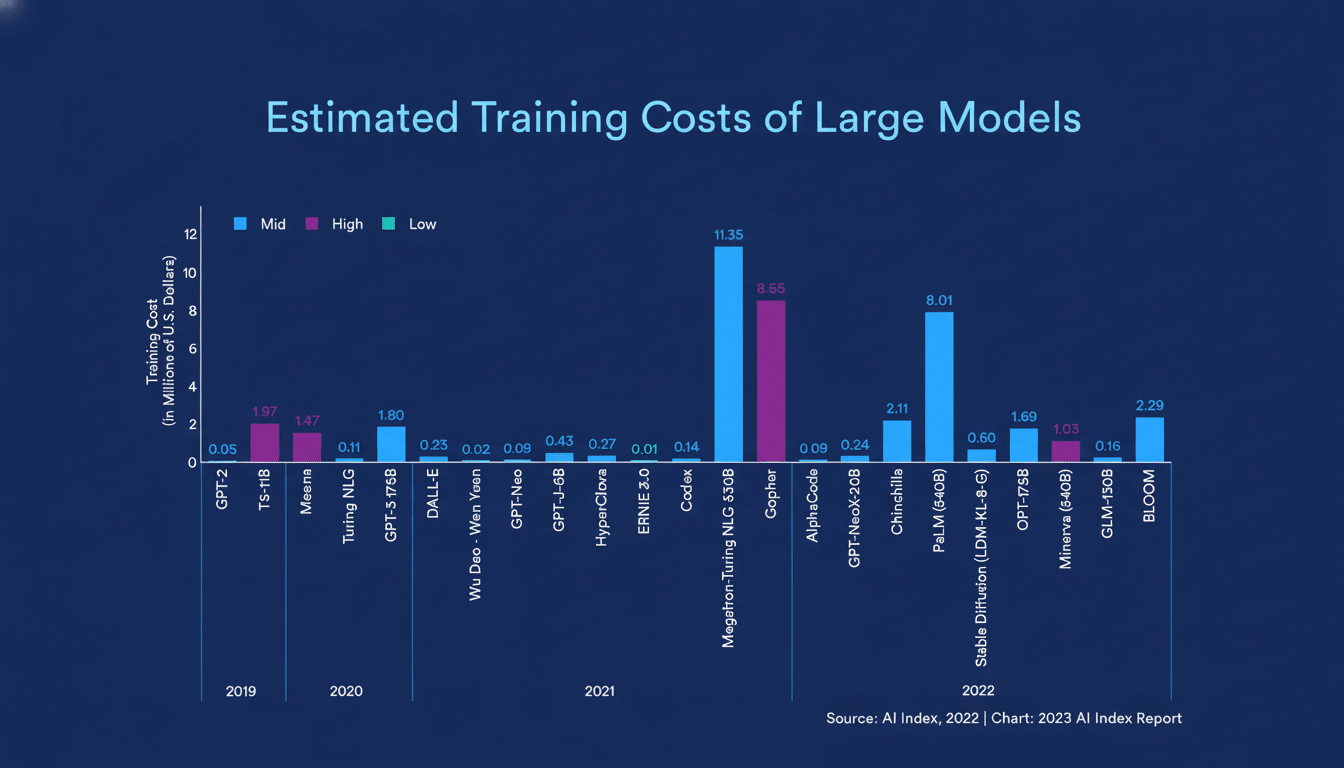

MIT researchers have pointed to diminishing returns in performance for gargantuan language models as training prices soar. The Stanford AI Index has also monitored massive rises in compute budgets, with mixed results in downstream performance. Meanwhile, the International Energy Agency has warned that data center electricity demand could, within this decade, compete with that of medium-sized countries, which further emphasizes the marginal cost per additional percentage point of accuracy.

Hooker’s criticism is stark: just scaling has not resulted in systems that are consistently able to navigate or interact with the real world. The result is an ever-widening gap between wins against benchmarks and large-scale, stable, cost-effective deployment.

Learning from real-world experience, not just more tokens

People learn by adjusting — stub the toe once, dance differently for a lifetime. Today’s big models, however, are mostly static after pretraining and fine-tuning. They can be directed, but they don’t really update themselves much from real-world feedback in production.

Reinforcement learning tries to make sense of experience, but it is largely staged: offline datasets, simulated environments, episodic policy updates. “Richard Sutton, who is often thought of as the father of reinforcement learning and won the Turing Award, has said repeatedly that systems that don’t learn from continuous interaction with the environment are crippled,” she said. Even Andrej Karpathy has expressed skepticism of how far current RL methods will take us with frontier models.

Hooker’s thesis is about closing the loop in the live field. She imagines models where, instead of retraining cycles that rely on specialized teams and the cranking open of very large contracts or even facilities, you can safely bring in feedback, policy changes, and signals from domain-specific activity — not in weeks or days but in hours or minutes. The rationale is less philosophical than economic: adaptation might reduce the overall cost of ownership while enhancing reliability.

Inside Adaption Labs and its learn-from-experience focus

Adaption Labs, which she started with Sudip Roy, a fellow Cohere and Google veteran, is centered on “learning from experience” as its foundational design principle. The team is hiring across engineering, operations, and design, including plans for a San Francisco office and globally distributed staff — continuing Hooker’s prior mission of scaling access to AI research talent.

The company’s short-term goal is factory-floor adaptability: methods that enable models to tune themselves around enterprise-specific workflows, protected by robust defenses against catastrophic forgetting and privacy leaks. Think constant learning, online evaluation, and control systems that handle policy updates and audit trails without requiring retraining from scratch.

Hooker was previously the executive at Cohere Labs with responsibility for deploying compact models for enterprise use cases. That experience matters. Smaller specialized systems have recently achieved or exceeded the performance of larger counterparts on coding, math, and reasoning benchmarks in combination with improved data curation, structured tool usage, or program-of-thought approaches. What Adaption Labs hopes to ergonomize: architecturally- and learning-based approaches can outperform raw scale on many real workloads.

The start-up has been in talks to raise a big seed round — said to be in the $20 million to $40 million range, according to investors who reviewed its materials — and is planning for an ambitious roadmap. Hooker wouldn’t comment on details, but the intent is evident: to demonstrate continuous adaptation is technically feasible and massively cheaper.

The economics behind a pivot to continuously adapting AI

Developers and enterprises still require tailor-made behavior, but customization is expensive. Others hold the most advanced fine-tuning of products and consulting for customers willing to spend eight figures, shutting out smaller players. If models that safely learn in situ can do so — ingesting feedback from tickets, call transcripts, code reviews, or human-in-the-loop annotations — the reliance on monolithic retrains and boutique services reduces.

There’s also a squeeze on the cost of inference. Novel “reasoning” approaches, such as multi-hop reasoning, may better a model’s accuracy, but latency and GPU time per query will increase. Adaptation provides a different lever: decrease the dependence on long chains by adapting the base model more to the domain over time. That’s an efficiency story with a direct P&L impact for any company — big or small — deploying AI at scale.

Risks of continuous learning and key milestones to watch

Learning in production is hard. Systems need to prevent drifting into dangerous behavior, protect sensitive information, and not forget previously acquired skills. Expect Adaption Labs to invest significantly in guardrails: offline shadow training, extensive eval suites, rollback mechanisms, policy constraints that keep updates within bounds, and audit them, etc.

There are tangible milestones to watch.

- Will the company be able to prove out step-change enhancements on tasks that are done in enterprises without needing expensive retrains?

- Does it help reduce the inference cost or latency to achieve a given quality target?

- Will third-party evaluations from the likes of MLCommons or academic partners verify durability and safety across months, not days?

If Hooker is correct, the payoff is more than just a new tool. It might scramble who is in control of AI: from a few pioneering labs scaling dutifully ever-larger models toward a market where adaptable systems allow more companies to mold — and afford — the intelligence they actually want.