Microsoft suffered a brief but annoying outage across several of its key productivity products, which took core services like Microsoft 365 apps, Teams, and Outlook offline alongside Azure-backed services. The company narrowed the incident to an issue in its Azure Front Door content delivery network and a mistaken configuration of one portion of network infrastructure, which led to sporadic timeouts and sign-in requests before traffic was redistributed and service resumed.

What happened to Azure Front Door during the outage

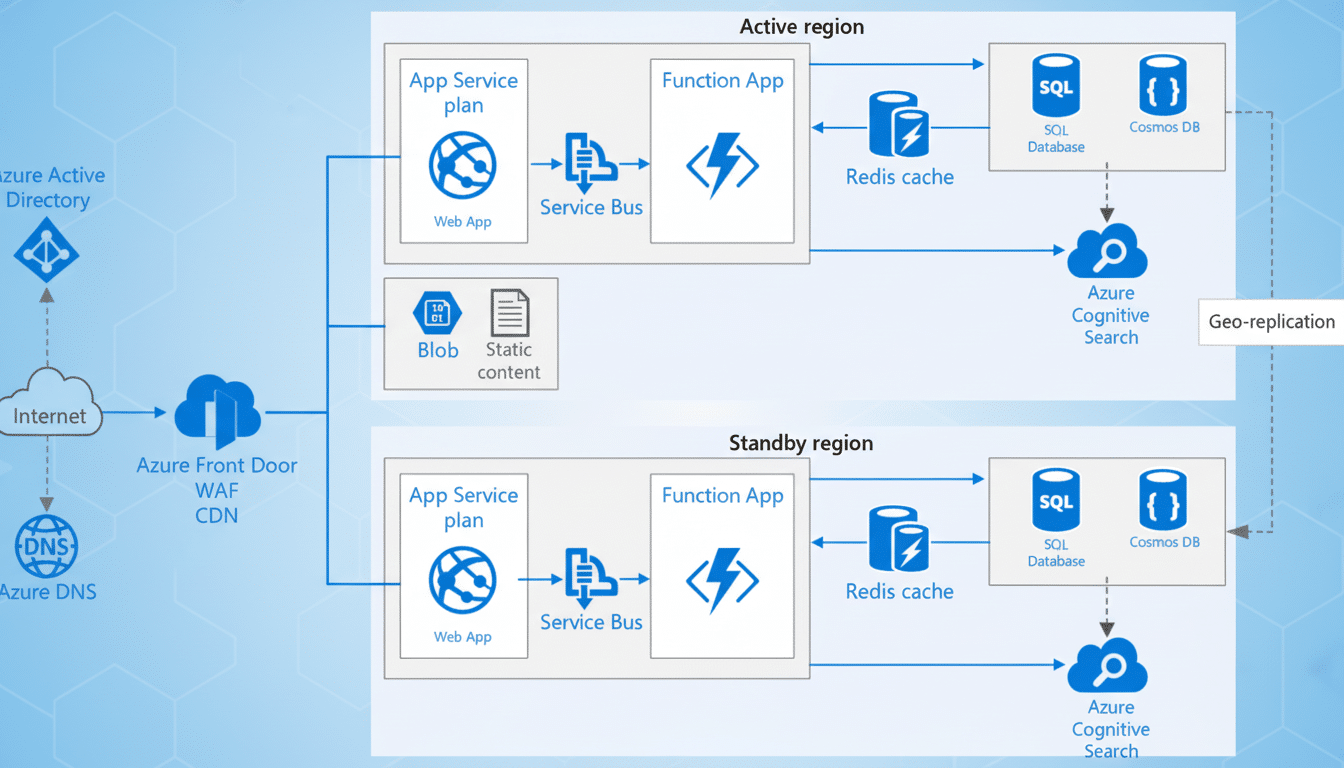

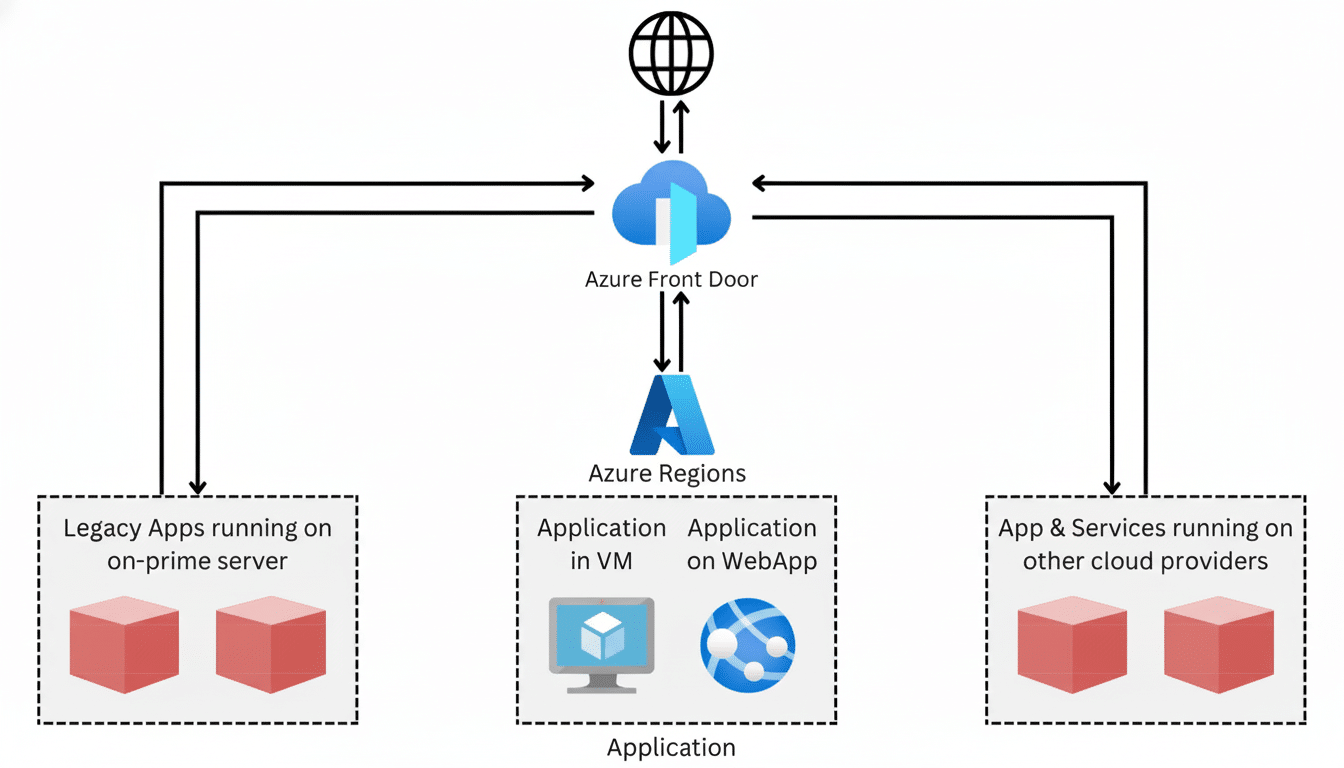

Azure Front Door sits on the edge of Microsoft’s cloud, helping to route and speed incoming requests for services such as Outlook, Exchange Online, Teams, SharePoint, and OneDrive. When configuration or routing policies at the edge break down, most customers feel it as slow loads, frequent retries, or even outright errors, often before their underlying applications start to misbehave.

Microsoft, for its part, said there was a misconfiguration of part of its North American network and a platform issue with Azure Front Door. The outcome was disrupted connections rather than a total shutdown: users saw delayed message delivery in Teams, calendar sync problems in Outlook, and web app errors for Word, Excel, and PowerPoint online. Microsoft said that it resolved the issue by adjusting traffic and verified recovery after observing stability.

Who felt the impact across regions and services

Reports peaked for Microsoft 365, Teams, and Outlook during United States business hours, with early signs of trouble also appearing in Europe, the Middle East, and Africa. There was the characteristic curve seen in many cloud edge incidents: steep spikes in reports beginning and ending over short spans, with complaints declining quickly as routing normalized.

The damage was most pronounced for collaboration-heavy teams reliant on Teams meetings, Outlook mail flow, and shared files in OneDrive and SharePoint. Organizations for whom email is a central workflow also suffered the pain of Exchange Online connectivity blips. Industry analysts from Gartner and IDC have long recorded Microsoft 365’s dominance in enterprise productivity, which is why even short interruptions can reverberate throughout a workday.

How Microsoft stabilized services and restored traffic

Microsoft reacted by addressing the misconfiguration and rerouting load to healthier paths in the Azure Front Door global edge. That often includes updating routing policies, validating health probes, and making sure the nearest possible edge location is capable of serving steady traffic. The company reported through its Microsoft 365 Status Twitter channel and Azure Service Health that, after rebalancing and following a period of monitoring, service health was normal.

This was a network and CDN layer issue, not a failure of a software update. Commentators sought to contrast that with the better-publicized incident in 2024 involving a third-party security update that triggered Windows blue-screen errors. In the most recent case, the apps themselves were still accessible; it was how people got to them that posed an issue.

Why this outage matters for cloud edge resilience

Contemporary SaaS relies on a delicate edge: global load balancing, Anycast routing, caching, and safety layers like web application firewalls. When some kind of configuration change or routing anomaly strikes at that level, a slew of products can go bad at once. It is the cloud’s issue with shared fate — high efficiency and resilience but a larger blast radius when something at the control plane goes wrong.

Microsoft and other hyperscalers also use safe deployment rings, progressive rollouts, and automated rollback options to handle risk (I know this from reading their best-practice engineering posts and resiliency briefings). Even then, edge cases illustrate how tiny missteps can snowball into user-visible issues, particularly during peak usage windows.

What IT teams need to do next to bolster resilience

- Check coordination and continuity plans for co-operation and mail.

- Make sure staff members are aware of workarounds, such as toggling between desktop clients and the web version, or working from cached documents in Office apps while cloud fetching lags.

- Define a temporary backup channel for critical communications, and document decision criteria by which to activate the temporary backup channel.

- Proactively monitor by integrating status notifications from Microsoft 365 Service Health and Azure Status with your own custom user-experience checks.

- Log authentication dependencies, since sign-in hiccups could pass as app crashes.

- Hold a brief post-incident review: which teams were affected, what playbook steps worked best, and where extra automation or user education might reduce future downtime.

The takeaway is straightforward. A CDN-edge mistake temporarily crimped the front door to Microsoft 365, Teams, Outlook, and co. Microsoft redirected traffic and restored availability, but the incident is an alarm that resilience planning should begin at the network edge — where most cloud journeys start.