The United States has levied a 25% tariff on Nvidia’s H200 artificial intelligence accelerators when those chips are routed through the U.S. on their way to China, sharpening Washington’s use of trade tools to shape the global AI supply chain. The measure, set out in a presidential proclamation, also covers certain rival products such as AMD’s MI325X and formalizes a pathway the Commerce Department created to allow vetted Chinese buyers to receive advanced accelerators under strict controls.

Nvidia publicly welcomed the decision because it preserves access to approved customers in China, even as it raises costs. Demand signals from Chinese cloud and internet firms have been strong, and early orders suggest the H200—Nvidia’s H100 successor with faster HBM3e memory—will be absorbed quickly by buyers seeking more training and inference capacity.

Details of what the new AI chip tariff actually covers

The tariff applies to advanced AI chips produced outside the U.S. that pass through American territory before being exported to foreign customers. It does not apply to chips imported into the U.S. for domestic use in research, defense, or commercial deployments, according to the proclamation. In effect, the rule targets transshipment via U.S. logistics hubs without limiting U.S. users’ access to the same hardware.

The administration framed the move as a national and economic security measure. The proclamation notes the U.S. currently manufactures roughly 10% of the chips it consumes, underscoring dependence on foreign fabrication. Industry figures from the Semiconductor Industry Association have long placed U.S. manufacturing capacity in the low teens by global share, a gap the CHIPS and Science Act incentives are intended to close.

This tariff complements Commerce Department export controls that require licensing and customer vetting for high-performance accelerators headed to China. By layering a 25% duty on top of licensing, the U.S. both constrains who can buy and makes those approved purchases more expensive.

Pricing shock and cost impact for Chinese AI builders

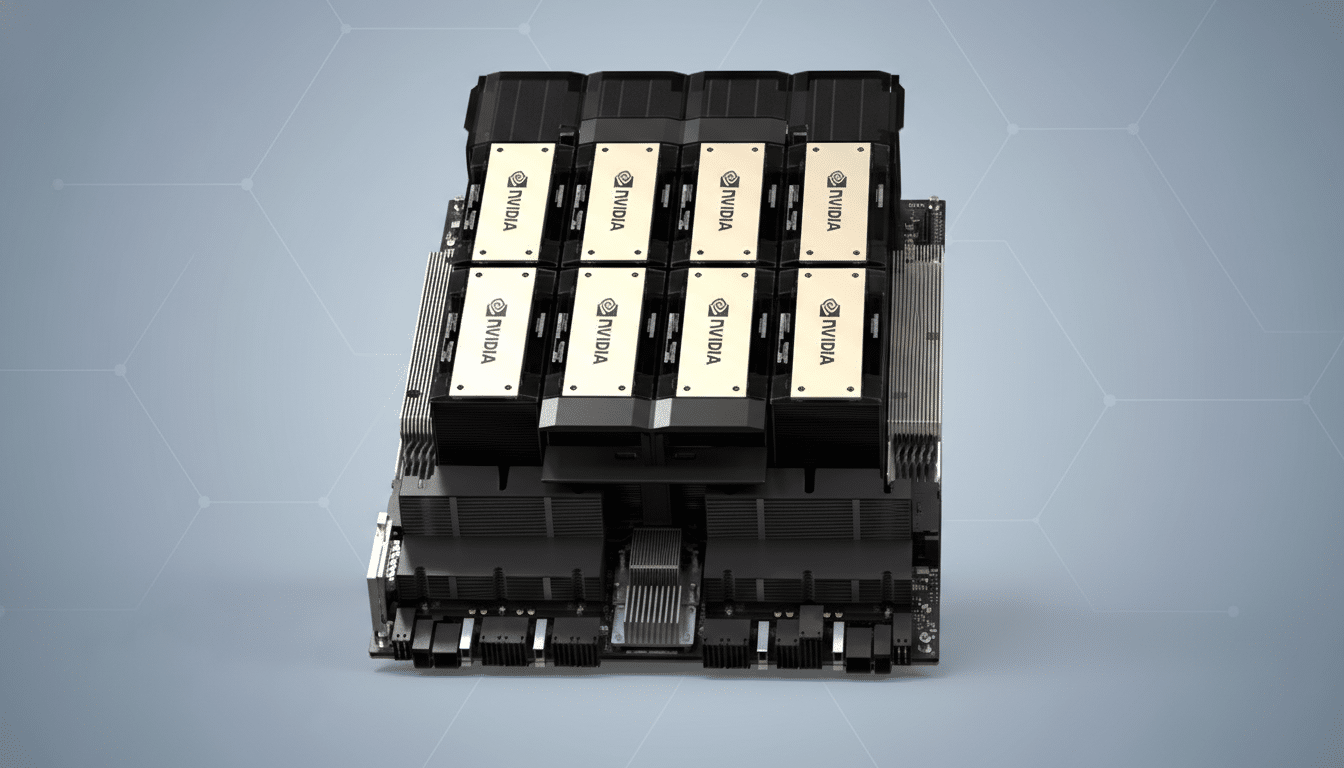

The immediate question is cost. Street pricing for top-tier AI accelerators has ranged from the mid-$20,000s to well above $40,000 per unit depending on configuration and supply. If an H200 node costs, for example, $35,000, a 25% tariff adds $8,750 per accelerator. A standard 8-GPU server could see roughly $70,000 in additional duties before considering software, networking, and power upgrades.

For hyperscale buyers in China—think cloud operators and platform companies—the added cost is meaningful but not necessarily prohibitive when weighed against the time-to-market benefits of cutting-edge GPUs. Some firms may recalibrate cluster sizes, stretch refresh cycles, or shift certain workloads to domestic accelerators. Huawei’s Ascend line, for instance, has gained traction for specific inference tasks, though most benchmarks still show Nvidia leading in developer tooling and ecosystem maturity.

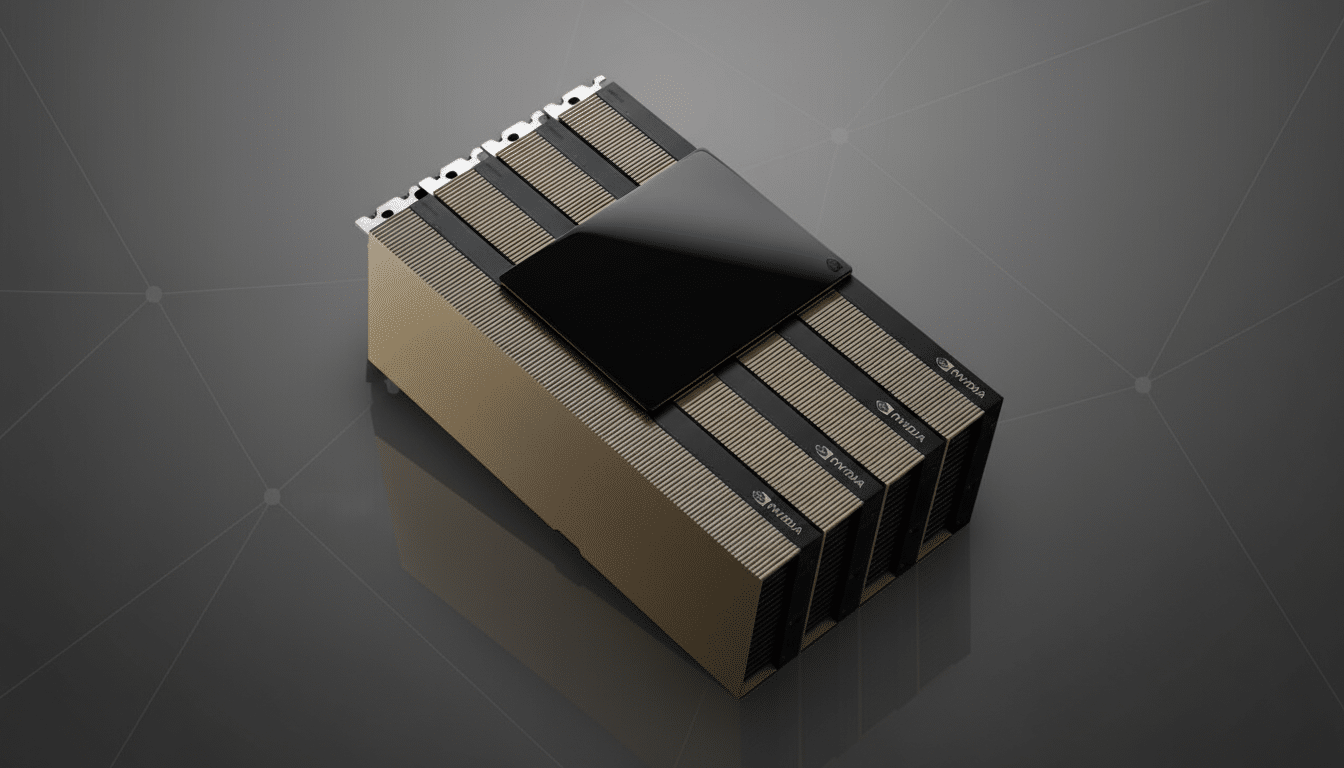

Another constraint is memory. TrendForce and other market researchers have highlighted tight supplies of high-bandwidth memory (HBM) from SK hynix, Samsung, and Micron. Even without tariffs, HBM availability—critical for H200 performance—has been the gating factor for AI server buildouts. Tariffs lift prices; HBM bottlenecks cap volumes.

Nvidia’s Strategy and Supply Chain Adjustments

Nvidia’s supportive stance reflects a pragmatic tradeoff: a tariffed channel is better than no channel. The company can also explore logistics adjustments. Because the duty is triggered when chips produced abroad pass through the U.S. before re-export, expect greater use of direct shipping from Asian contract manufacturers and packaging partners to approved Chinese customers, where permitted by licenses.

Most H200s are built on Taiwan Semiconductor Manufacturing Company processes and packaged with advanced CoWoS, a step that has been a throughput choke point. Any effort to bypass U.S. distribution hubs would need to align with Commerce licensing, end-user screening, and compliance audits. AMD faces a similar calculus with the MI325X, which targets high-bandwidth workloads and is explicitly named in the tariff scope.

Beijing’s likely countermoves and procurement guidance

Chinese policymakers are navigating between two imperatives: accelerate domestic chip capabilities and avoid compute shortfalls that could stall AI development. Reporting from Nikkei Asia indicates Beijing is drafting guidance capping or prioritizing overseas AI chip purchases. That would institutionalize a quota-like regime, ensuring access where most needed while reinforcing demand for homegrown alternatives.

In practice, that could mean steering state-linked buyers toward domestic accelerators for inference and reserving licensed Nvidia or AMD parts for frontier model training, where software stacks and performance-per-watt are most advantageous. Procurement rules, financing support, and cloud credits are likely policy levers.

Key variables to watch next for tariffs and AI supply

Three variables will determine the tariff’s real-world bite. First, routing: how much approved hardware can ship directly from foundry and packaging sites to China without U.S. transit. Second, pricing power: whether Nvidia and its partners absorb any portion of the duty in strategic deals or pass it through entirely. Third, policy cadence: whether Washington layers on additional controls or expands tariff scope, and how quickly Beijing finalizes its import guidelines.

Also keep an eye on customs classifications and implementation details. The tariff’s effect will hinge on how specific chips and systems are coded and whether integrated systems with multiple components see blended rates. Trade lawyers and compliance teams will be busy translating the proclamation into purchasing playbooks.

Bottom line: the U.S. has created a costlier, more controlled lane for cutting-edge AI chips to reach China, without fully closing it. For now, the AI race will proceed with higher bills of materials, tighter supply, and more paperwork—just the kind of friction that can shift roadmaps, but not stop them.