Week four of AWS re:Invent concluded with one very clear takeaway: agentic AI is moving from demos into production, and Amazon is repurposing its stack—from chips and servers to models, agents, and pricing—to make that happen. Among the headline announcements are the Trainium3 AI chip and UltraServer system, guardrails and memory for AgentCore, new Nova family models, and a useful carrot for customers in Database Savings Plans plus Kiro coding suite startup credits.

Agentic AI takes the spotlight with production-ready tools

AWS management pitched re:Invent as an event that would feature autonomous, task-completing agents bridging back to systems of record in the enterprise and more. “And for that, we need to create a new type of agent that can do tasks and automation on your behalf,” CEO Matt Garman said at the time, referring to what AWS believes will be the first wave of detectable ROI. Swami Sivasubramanian, who now runs Agentic AI for AWS, highlighted the momentum: agents that plan, call tools, write code, and execute workflows, with emphasis on speed from idea to impact.

- Agentic AI takes the spotlight with production-ready tools

- Custom models without heavy lifting via tuning and RFT on AWS

- New silicon and systems promise faster AI with lower energy use

- Enterprise-class governable agents gain policies, memory, tests

- Model lineup expands and Nova Forge offers staged training paths

- Pricing relief and startup benefits, including database savings

- Customer proof points and data sovereignty with AI Factories

- Why this matters: a cohesive path to safer, practical AI

Custom models without heavy lifting via tuning and RFT on AWS

To expedite custom AI, AWS included serverless model tuning with SageMaker, enabling teams to tune models without wrangling infrastructure. For Bedrock, the company launched Reinforcement Fine-Tuning, giving developers an opportunity to select reward systems or use existing workflows, and automatically have Bedrock perform end-to-end customization. The pitch: fewer ops hoops to jump through, quicker iterations, and a way for non-LLM specialists to direct domain-specific behavior.

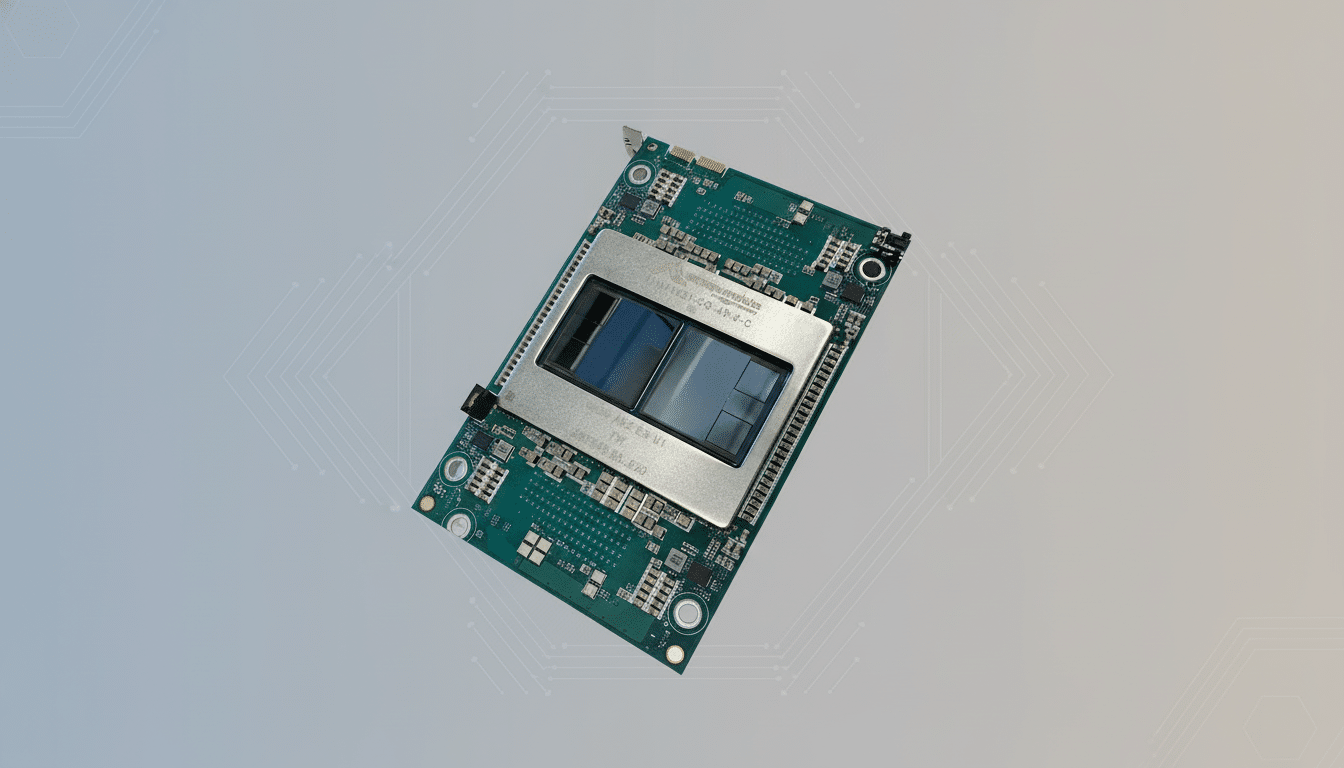

New silicon and systems promise faster AI with lower energy use

On the hardware side, AWS introduced Trainium3 and UltraServer, achieving up to 4x performance with both training and inference and a 40% reduction in energy consumption compared to the previous generation. Among the reasons is Trainium4, which Amazon said was already in development and will play well with NVIDIA chips, indicating a hybrid approach rather than an all-in bet. Amazon’s key execs also hinted that Trainium2 is already a solid revenue stream, setting the stage for a next-gen roadmap.

Enterprise-class governable agents gain policies, memory, tests

AWS’ agent-building platform, AgentCore, has added a Policy feature to help teams define what an agent can do (and where it can act) as well as which tools and/or data it can touch. New memory and logging abilities let agents remember user intent or preferences over time — important for long-running tasks. AWS also unveiled 13 prebuilt test workrooms that can be used to help agents prove they are reliable, safe, and able to complete a task before being deployed more widely.

AWS launched three “Frontier” agents, including the Kiro autonomous coding agent that is meant to learn team norms and work for hours or days with little input. One is a security-minded agent that does code reviews and policy checks; the other is a DevOps-oriented agent designed to help you deploy and prevent incidents when rolling out changes. You can try preview versions right now.

Model lineup expands and Nova Forge offers staged training paths

AWS has also introduced additions to its Nova model family with four new models, three text generators and one multimodal model that can generate both text and images. And for customers who prefer more control over training stages, Nova Forge provides access to pre-trained, mid-trained, or post-trained models and lets teams complete training with proprietary data. The throughline is flexibility: choose your training stage, bring the data yourself, tune governance with AgentCore.

Pricing relief and startup benefits, including database savings

In a change likely to end up on the desk of every CFO (or finance manager) in technology, AWS announced Database Savings Plans, which lower costs by up to 35% but require commitment based on hourly compute usage for a one-year term. These are automatic savings that apply consistently on supported databases, and any overage is billed at regular (on-demand) rates. Duckbill Group’s Corey Quinn — a loud critic of database pricing for some time now — deemed the change overdue and welcome.

As a benefit to developers and early-stage companies, AWS is offering eligible startups free 12 months of Kiro Pro+ credits, with availability limited to certain nations. The bet is simple: outfit a legitimate agentic coding tool with no upfront cost, and convert usage into durable workloads as teams grow.

Customer proof points and data sovereignty with AI Factories

Lyft shared results for an AI agent designed with Anthropic’s Claude via Amazon Bedrock to address driver and rider concerns. This year, the company has reduced average resolution time by 87% and increased driver usage by 70% — indicators that agentic flows can hit support metrics that matter while lessening human load.

In regulated sectors and government, it announced “AI Factories,” which install its AI systems in customer data centers. Powered by NVIDIA technology and integrated with Amazon’s Trainium3, the configuration is designed to address data sovereignty requirements — keeping sensitive datasets local while leveraging modern AI models as well as acceleration.

Why this matters: a cohesive path to safer, practical AI

The re:Invent package laces together the layers that every enterprise actually cares about: faster silicon, governable agents, flexible model training, and actual cost controls. The emphasis on policies, evaluation, and memory hints that AWS knows agent safety and reliability are still the gating factors. At the same time: on-prem AI Factories and Trainium-NVIDIA interoperability acknowledge a hybrid, multi-silicon future. The strategy is vintage AWS: widen the toolkit, eliminate operational drag, and let customers set the tempo.