Google is set to re-enter the smart eyewear race with a pair of AI-powered spectacles launching next year, in what will be a strategic return to an area it did much to make commonplace some 15 years ago. This time around, the accent is squarely on real-world utility, fashion-friendly design, and deep integration with Gemini, Google’s multimodal AI assistant.

Two devices, two paths: partnerships and product focus

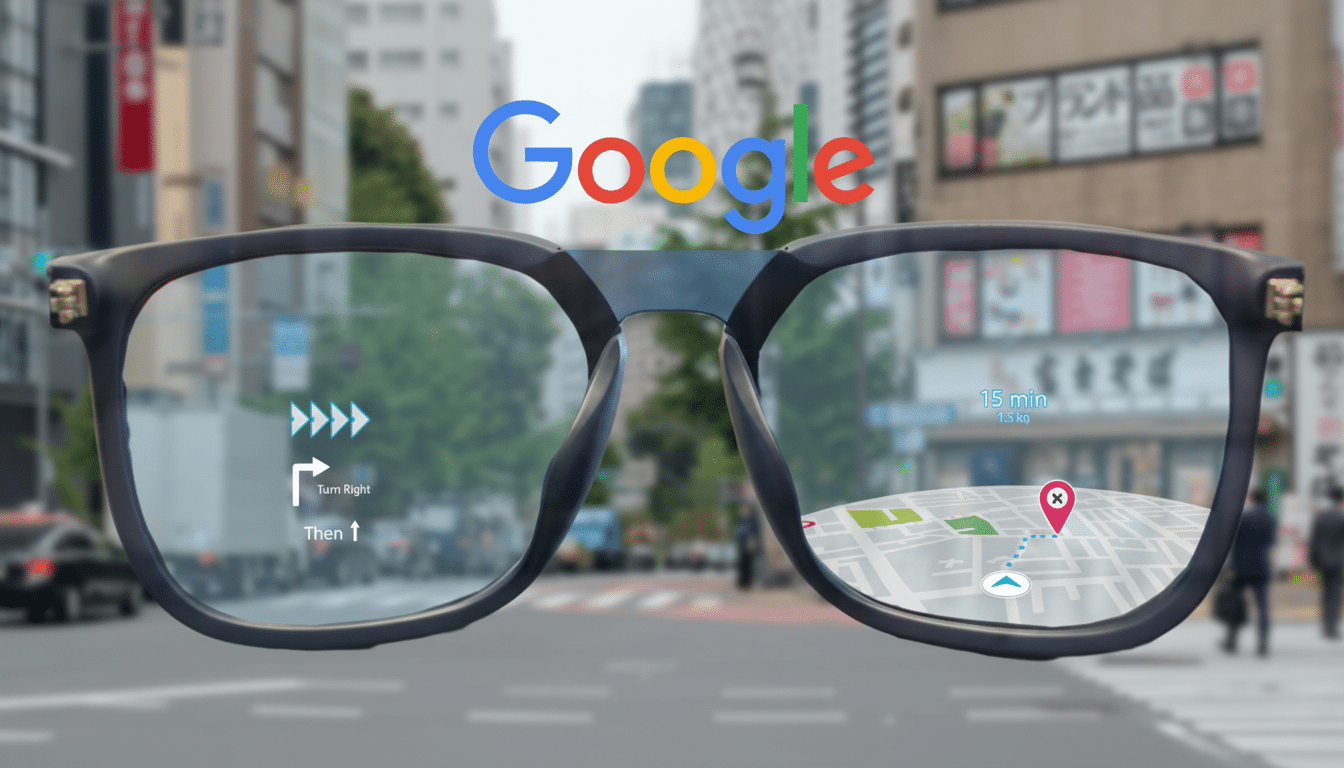

Google says these are two separate products: one in collaboration with Samsung and Gentle Monster, and one with Warby Parker. The first is a slim pair of “AI glasses” with no display. Think hands-free queries to Gemini, photo capture, and receiving audio feedback through unobtrusive speakers—more along the lines of Meta’s Ray-Ban partnership than a typical AR headset. The second, a more high-end pair of “display AI glasses,” adds an in-lens display for private turn-by-turn directions, live translation captions, and glanceable prompts.

- Two devices, two paths: partnerships and product focus

- What the AI glasses without displays will be capable of

- Realizing the ceiling with the display model

- Why the timing matters for Google’s smart glasses push

- The competitive field shaping consumer AI eyewear today

- Key hurdles and how Google could clear them

- Bottom line: what Google needs to win with AI glasses

Both devices will be outfitted with Android XR, Google’s operating system for extended reality that covers headsets and glasses. In practice, that means shared developer tools, a predictable update cadence, and cross-device features such as navigation, assistant capabilities, and media playback controls. It also lays the groundwork for an ecosystem of devices that are able to mature from primarily audio-first experiences to more display-rich experiences without users or developers having to start from scratch.

What the AI glasses without displays will be capable of

The non-display model isn’t here to be a futuristic visor, but rather the daily-wear sidekick we carry with us everywhere. It includes built-in microphones, a camera, and near-ear speakers so you can hear voice prompts, search, and take pictures hands-free with AI features. Google’s lens here is practical: deliver instant answers and light capture without the battery and bulk penalties of a display.

Industry trackers anticipate there would be a natural fit for AR with Qualcomm’s AR-focused chipsets, including the Snapdragon AR1 Gen 1 that fueled recent camera glasses. Google has not announced specific silicon, but the support of Android XR indicates a close link with Qualcomm’s XR platforms for accelerated on-device AI processing, low-latency camera pipelines, and extended battery life. Look for wake-word controls, camera privacy indicators, and speedy image-to-insight workflows through Gemini.

Realizing the ceiling with the display model

It’s the “display AI glasses” where the category gets interesting. An in-lens display allows for silent, glanceable computing: walking directions, translation captions, calendar nudges, and context-aware suggestions that don’t require taking out a phone. If Google can include on-device transcription and translation here, it could bring the live-caption magic of Pixel and Android to a more natural form factor.

Hardware will create the gate. The displays also need to be bright enough for outdoor use without depleting the battery. Heat, mass, optics, and alignment are intractable problems. Still, by fragmenting the lineup—audio-first and display-focused—it’s as if Google designed with discipline: ship something useful now, iterate your way to a premium, heads-up experience when tech allows all-day use.

Why the timing matters for Google’s smart glasses push

The wearables sector is ripe for a different product category beyond watches and earbuds. IDC and Counterpoint Research both point out that smart glasses are still a niche, but momentum is growing as our AI assistants become more conversational and aware of context. Google’s ace is a full-stack play: Assistant powered by Gemini, Maps, Photos, YouTube, and Android XR all knit together with a privacy model that has been polished over the course of phones and watches.

In a crucial aspect of this move, Google isn’t going it alone. Fashion-first partners like Gentle Monster and Warby Parker can help address the timeless issue of smart glasses as gadgets. If these frames can pass the mirror test, then adoption sweeps. Just as Meta’s Ray-Ban line has proven, when it comes to consumer tech on your body, style and simplicity are table stakes; Google seems to have learned that.

The competitive field shaping consumer AI eyewear today

Meta’s Ray-Ban glasses introduced camera-plus-assistant functions to mainstream eyewear and established a standard for battery, weight, and audio quality. Amazon Echo Frames and Bose audio glasses are the first parties to explore notification triage and voice input, while Snap’s Spectacles showed developers are interested in AR experimentation, even if consumer offerings aren’t yet material. Google’s wager is that a cohesive OS, a strong assistant, and app-level integrations will drive the category from novelty to necessity.

Key hurdles and how Google could clear them

Battery life, thermal management, and privacy are the big three challenges. Look for aggressive power gating and offloading to the phone whenever possible; opportunistic on-device AI for speed and cost. Privacy-wise, visible camera LEDs, hard mutes, and transparent capture policies are just table stakes. Google does know this experience, and Android’s permission model should port pretty well to glasses.

On the software front, developers will need to see solid Android XR APIs: scene understanding, low-latency voice capture, trustworthy notification surfaces, and safe background services. If Google ships early with compelling navigation, translation, messaging, and photo capture that are all first-class experiences, third-party developers will come. The tipping point comes when taking a quick glance, or giving a terse voice command, saves more time than pulling out your phone.

Bottom line: what Google needs to win with AI glasses

Google’s approach—to tackle this with an audio-first pair of AI glasses and then eventually release a display-equipped version—seems pragmatic and ambitious. The former is built for daily, on-the-go use, while the latter is here to change how we receive and assimilate information when we are out and about. If Google can provide comfort, battery life, and a Gemini experience that actually feels useful, we might see the long-foretold moment when normal people use smart glasses.