The AI boom is now a race to grab as much compute, power, and space in real estate at a scale the world has never seen. The largest players are signing billion-dollar deals that more closely resemble national infrastructure programs than typical IT contracts — from power purchase agreements and long-term cloud commitments to GPU capacity reservations. On recent earnings calls, Nvidia has described the decade’s AI buildout as “multi‑trillion” and the market is acting accordingly.

What has changed is the pace and format of these commitments. Hyperscalers are prepaying for accelerators, entering into multi‑year energy agreements and packaging equity, credits and technical integration into a single buying decision. The result is a flywheel that only tightens — capital, chips and power amplifying one another, squeezing supply and upping the ante for any new entry.

Cloud Power Plays And Strategic Alliances

Microsoft’s close association with OpenAI served as the template: An initial $1 billion partnership expanded into a multibillion, multiyear extension that combined up-front cash with Azure credits and priority access to GPU clusters. The structure itself is critical — cloud credits push cash burn off the balance sheet of startups, while ensuring the hyperscaler that it will have network utilization and exclusivity over workloads.

Amazon itself followed a similar playbook with Anthropic, for which they set aside $4B and also tightly integrated with AWS Trainium and Inferentia. Google, meanwhile, took a hybrid approach: investing in Anthropic while courting legions of model companies to standardize on Google Cloud’s TPU‑backed infrastructure. Each wager is less about headline valuation and more about anchoring AI training and inference to a single cloud stack for years.

Cross‑cloud spillover to relieve capacity shortages is also an interesting turn. OpenAI publicly acknowledged that it was planning to use Oracle Cloud Infrastructure to supplement Azure capacity, an explicit concession that market demand for accelerators can outstrip the inventory of any individual cloud provider. In contrast, Microsoft’s $1.6 billion bet on the UAE’s G42 linked funding to compliance, export controls and adoption of Azure – an inarguable instance of “sovereign AI” deals where geopolitics meets GPU scarcity.

The GPU Arms Race and Vendor Financing Models

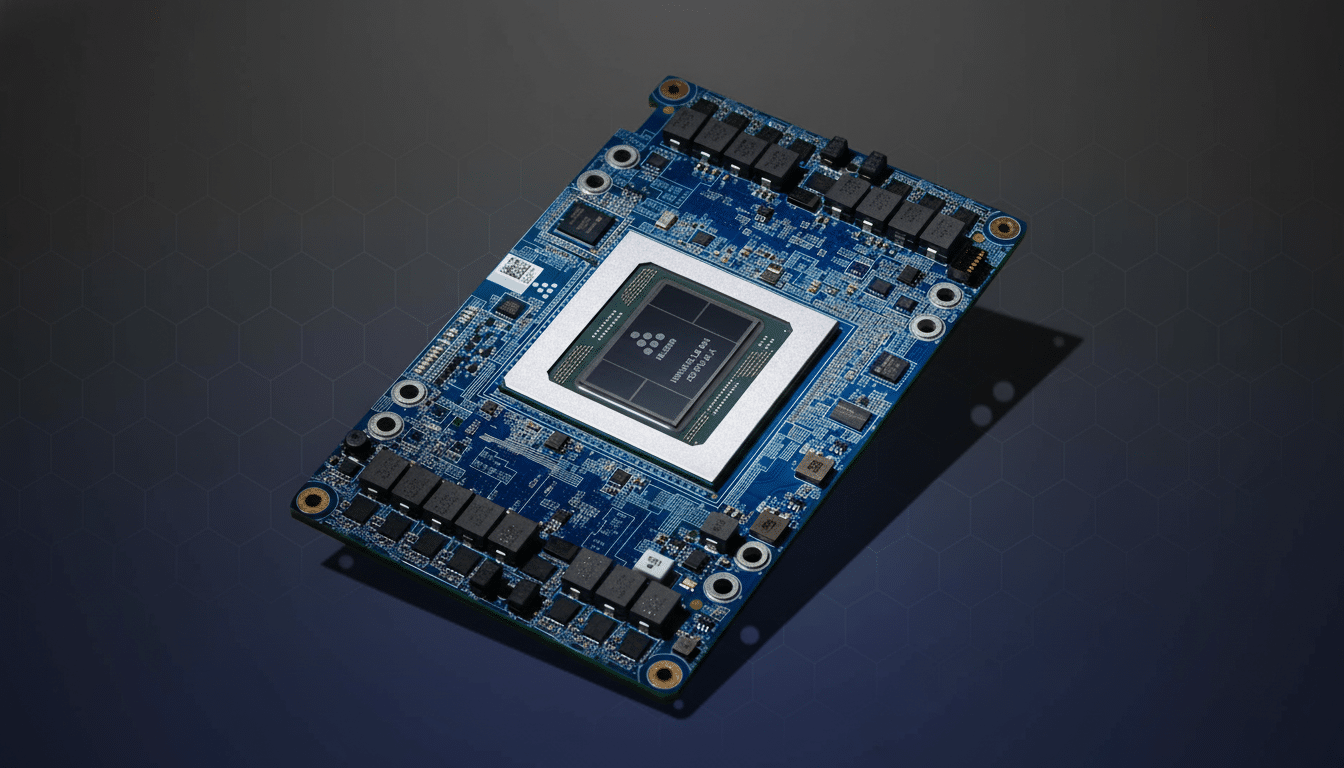

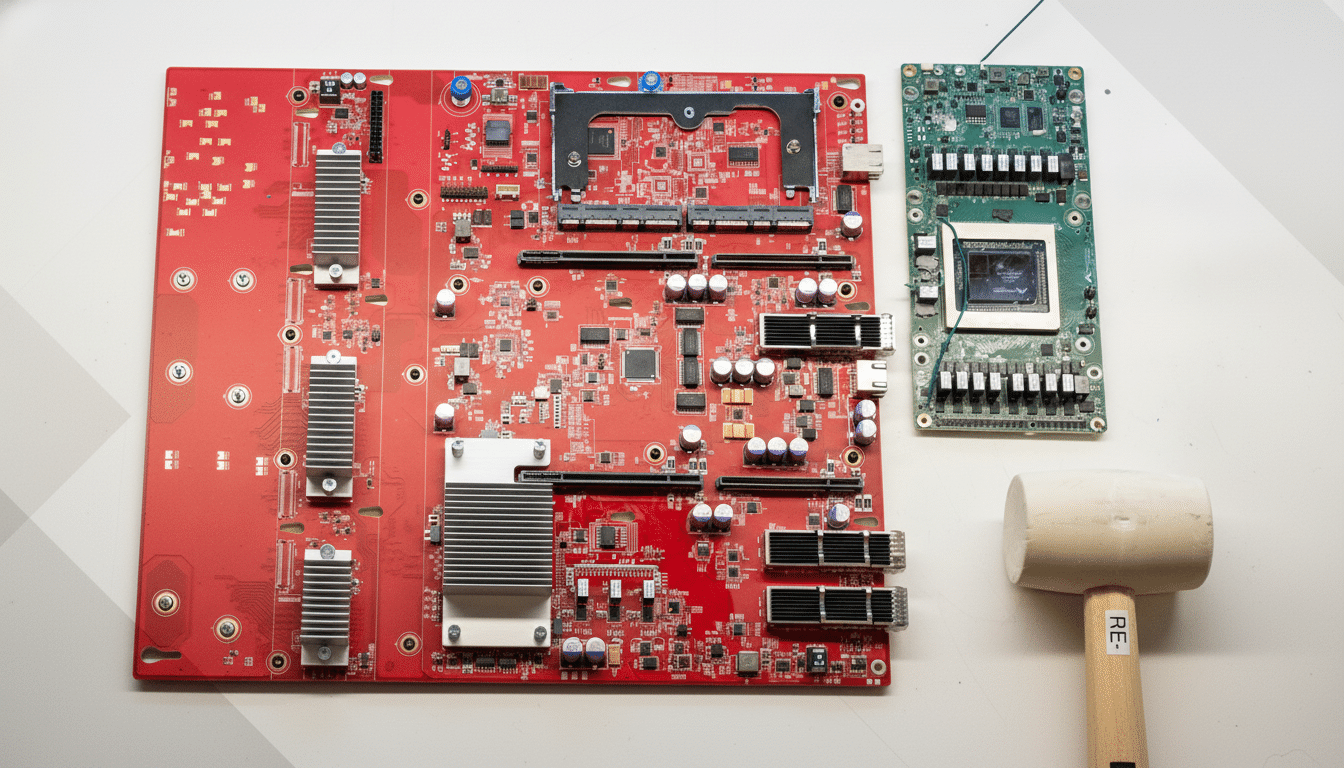

Nvidia is still the choke point for training‑class accelerators. Industry watchers believe the company owns 98% share of the high‑end AI accelerator space, and customers are signing take‑or‑pay contracts, noncancelable purchase obligations and prepayments to ensure delivery of Blackwell generation devices down the road. Omdia and Dell’Oro Group have predicted that revenues for AI servers will be in the hundreds of billions of dollars within a few short years, thanks to GPU‑dense racks and networking that supports high rates of bandwidth.

Sticker shock is baked into the math: A single top‑tier GPU can cost tens of thousands of dollars, and supporting infrastructure such as a modern training cluster can approach hundreds of millions in cost when you factor in InfiniBand or Ethernet fabric, liquid cooling and power distribution. OEMs such as Supermicro, Dell and HPE are reporting record orders linked to multi‑year capacity reservations, while cloud providers have introduced “reserved compute” SKUs that resemble the way airlines handle limited seats — pay to lock in or risk waiting.

The financing is evolving, too. Instead of straightforward purchasing, we’re seeing combinations with discounted compute, priority availability of next‑gen chips or co‑engineering support in return for multi‑year commitments or equity. It is essentially vendor financing for AI capacity, and it allows each side to scale much faster than working off of cash flow would allow.

Data Centers and Power Deals Driving AI Capacity

Compute without electricity and you’ve got nothing but warm silicon. The International Energy Agency forecasts that, due to AI, electricity demand from data centers could reach nearly a terawatt‑hour globally in the next couple of years. That has forced hyperscalers into the energy market in ways that more closely resemble utilities than technology companies.

Microsoft signed a landmark framework agreement with Brookfield for up to 10.5 gigawatts of new clean energy and completed the first‑ever nuclear‑backed agreement through its investment in Constellation, enabling them to power new large data centers on campuses.

Amazon agreed to buy an enormous Pennsylvania data center campus from Talen Energy and paired it with long‑term power from a nearby nuclear plant, highlighting how control of the site and firm, carbon‑free power have become competitive advantages.

Real estate and grid limitations are leading to shifts in where capacity is constructed. CBRE cites record pre‑leasing and sub‑5% vacancies in numerous North American hubs, and interconnection queues for new power have ballooned according to analyses from U.S. energy labs. Colocation titans — including Digital Realty, Equinix and Blackstone‑owned QTS — are responding in partnership with clients by rolling out multi‑gigawatt campuses and innovative cooling using joint ventures that share risk among infrastructure investors.

Sovereign and Local AI Clouds Attract Public Funding

Now governments are part of the deal flow.

The UK is financing national AI research compute, the EU has initiated cross‑border projects for cloud and chips, Japan is supporting local supply to reduce dependence on imported accelerators. Those programs often include criteria about where data must reside, how safety testing is conducted and even how energy can be sourced — making compliance one more axis of differentiation in infrastructure.

What Matters Next for AI Infrastructure and Power Deals

Three constraints will define the next wave of deals.

- Interconnects and packaging: More wafers might not be good enough to solve some bottlenecks in advanced packaging and networking.

- Power and cooling: hard, low‑carbon electricity and liquid cooling supply chains are what will dictate where the largest clusters land.

- Regulation: competition and cloud‑market investigations in the U.S., EU, and UK could also put pressure on exclusive bundles of credits, equity and capacity.

For now, though, the pattern is unmistakable. Compute is the new oil, energy is the new compute, and the winners will be those with volume quantities of power under long‑term contracts across chips, power and land. The AI explosion isn’t simply a battle of the best models — it’s an infrastructure supercycle, and the contracts signed today will determine who can ship tomorrow.