Elon Musk says Tesla is bringing back Dojo3 with a radically different mission: building space-based AI compute. The move follows last year’s wind-down of the automaker’s custom supercomputing effort and signals a pivot from terrestrial self-driving model training to off-planet infrastructure that could one day power AI systems from orbit.

From Dojo Shutdown to a Skyward Space Compute Pivot

Tesla effectively mothballed Dojo months ago after key departures, including the exit of longtime chip leader Peter Bannon. Around two dozen engineers migrated to DensityAI, a new infrastructure startup led by former Dojo executives. At the time, reporting indicated Tesla would lean harder on Nvidia, AMD, and Samsung instead of forging its own silicon path.

- From Dojo Shutdown to a Skyward Space Compute Pivot

- Why Space-Based Compute Could Power Next-Gen AI

- Inside Tesla’s Chip Roadmap for Space-Ready AI7

- Engineering Obstacles to Orbital AI Compute Are Enormous

- Competitive Pressure on the Ground as Autonomy AI Accelerates

- What to Watch Next as Tesla Revives Dojo3 for Space Compute

Musk’s latest comments reverse that narrative. He framed the revival around an in-house roadmap that has progressed far enough to warrant another swing, while recruiting engineers to rebuild the team. The explicit aim this time is hardware tailored for orbital deployment, not data center racks in Nevada or New York.

Why Space-Based Compute Could Power Next-Gen AI

The pitch is simple but audacious. Earth’s grids are tightening as AI demand soars. The International Energy Agency estimates data center electricity use could top 1,000 TWh within a couple of years, roughly doubling from early-decade levels and approaching 4% of global electricity demand. Space offers uninterrupted solar exposure in certain orbits, potentially enabling 24/7 power harvesting without land-use or cooling water constraints.

There are trade-offs. Latency to ground remains nontrivial, and moving multi-petabyte datasets on and off orbit is expensive. Still, Musk is not alone in exploring the concept. Axios has reported industry leaders, including OpenAI’s Sam Altman, are intrigued by off-planet data centers. Musk possesses a unique advantage in SpaceX’s launch cadence and Starship’s projected lift capacity, which could transport heavier compute payloads and large radiators.

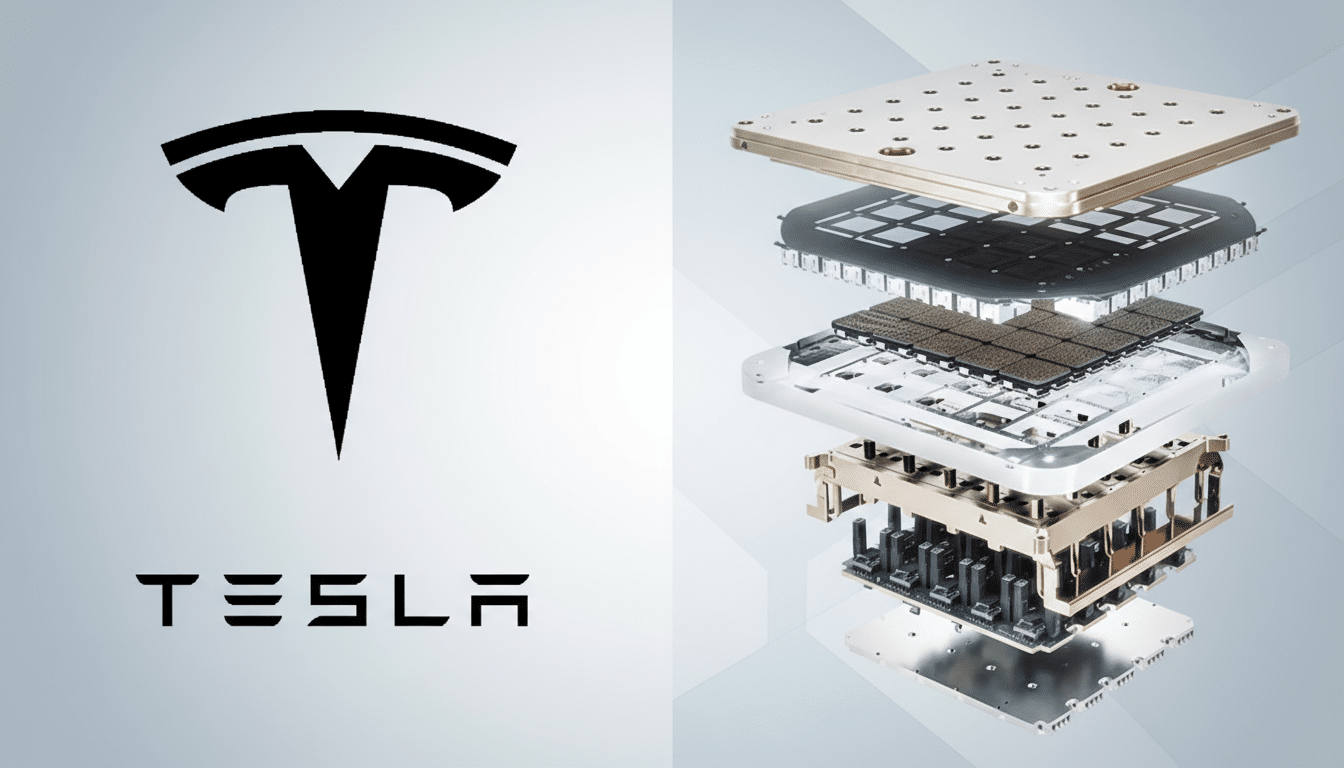

Inside Tesla’s Chip Roadmap for Space-Ready AI7

Tesla’s internal silicon program has advanced through several iterations. The AI5 generation, produced by TSMC, underpins autonomous driving features and the Optimus humanoid robot. Last year, Tesla committed an estimated $16.5 billion to Samsung for AI6 production aimed at both vehicles and training clusters.

Musk has cast AI7, colloquially tied to Dojo3, as the space-bound version. That suggests design priorities that diverge from conventional accelerators: radiation tolerance, reliability in vacuum, aggressive performance-per-watt optimization, and packaging that integrates with orbital thermal management. The company is recruiting engineers to tackle those problems, highlighting a push to ship chips at volumes few semiconductor programs ever achieve.

Engineering Obstacles to Orbital AI Compute Are Enormous

Running high-power AI silicon in orbit is not a trivial leap from running inference on a satellite. Radiation can flip bits and degrade advanced nodes, and cooling multi-kilowatt boards in vacuum requires expansive radiator surfaces and heat pipe or pumped-loop systems. Efficient uplink and downlink are other gating factors for data-heavy training workloads.

There is precedent for smaller steps. NASA and HPE demonstrated in-space edge processing on the International Space Station with Spaceborne Computer-2. The European Space Agency flew an Intel Movidius VPU on PhiSat-1 to filter cloudy images on-orbit, cutting bandwidth needs. But those are inference-class examples. Training-scale compute in orbit demands orders of magnitude more power, thermal dissipation, and fault tolerance.

Competitive Pressure on the Ground as Autonomy AI Accelerates

The timing also intersects with intensifying competition in autonomy AI. Nvidia unveiled Alpamayo, an open-source driving model that targets the same long-tail problem Tesla’s FSD grapples with. Musk publicly acknowledged that rare edge cases make full autonomy difficult and said he hopes rival efforts succeed—a notable nod as the ecosystem broadens beyond closed stacks.

Tesla’s oscillation between bespoke chips and partner silicon mirrors a broader industry calculus. Designing accelerators is costly and risky, yet controlling the full stack can yield performance and cost advantages, especially when workloads are unique—like robotics and potentially orbital compute.

What to Watch Next as Tesla Revives Dojo3 for Space Compute

Three milestones will signal how serious Dojo3’s space pivot is.

- Hiring velocity and the return of key architects who know Tesla’s compiler, interconnect, and packaging stack.

- Evidence of radiation-hardened or radiation-tolerant designs at leading-edge nodes, or a hybrid strategy that pairs modern accelerators with protective shielding and error correction.

- Integration plans with launch and satellite platforms, including how Tesla would handle power, cooling, and data movement.

If Tesla can translate its vertical-integration playbook from cars to compute satellites, it could carve out a novel lane for AI infrastructure. The flip side is clear: orbital data centers remain a moonshot with physics, supply chain, and regulatory hurdles. For now, Musk’s revived Dojo3 is less about near-term autonomy milestones and more about staking a claim on where the next generation of AI horsepower might live—far above the grid.