Davos sounded more like a tech earnings call than a policy summit as Silicon Valley and chip royalty crowded the main stage to pitch, posture, and poke at one another over artificial intelligence. Between grand promises and thinly veiled jabs, the message was clear: AI is the center of gravity, and the power struggle over compute, markets, and rules is fully out in the open.

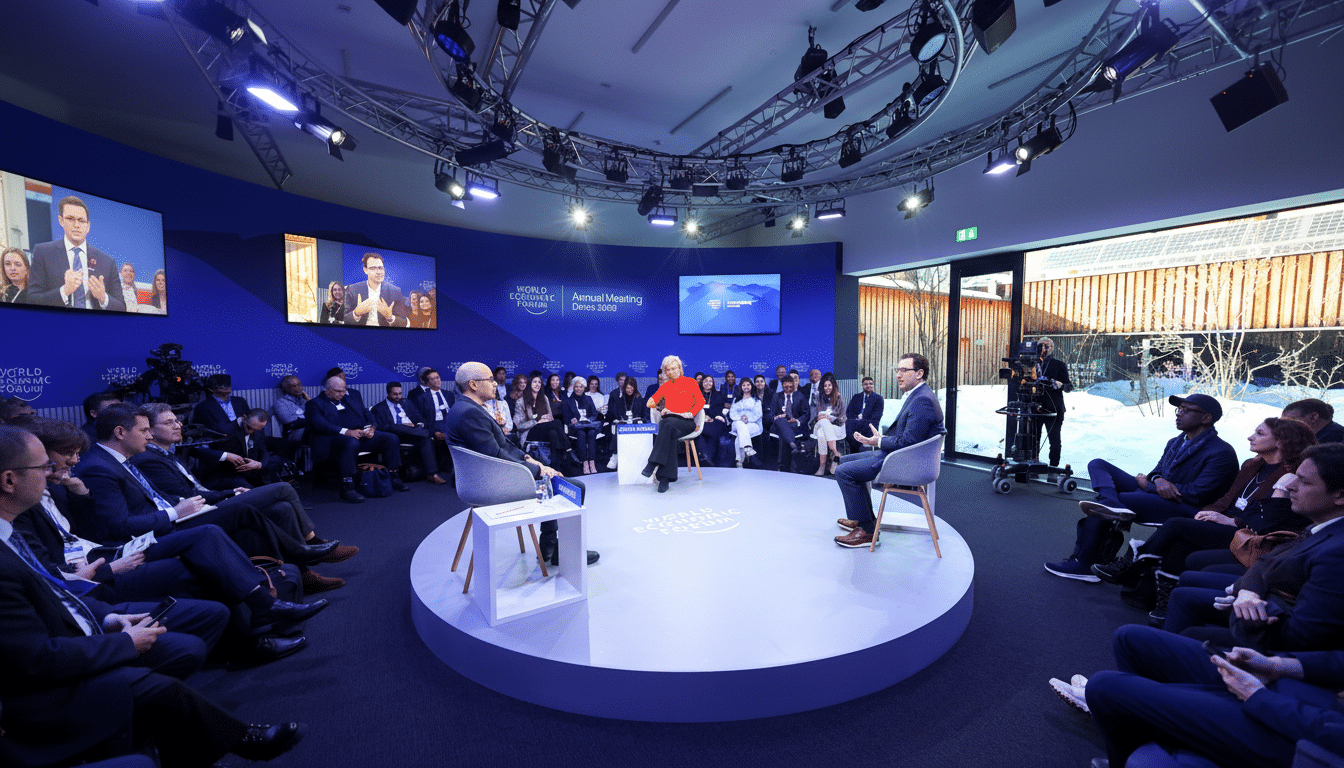

The World Economic Forum’s promenade mirrored the mood. Big Tech pavilions dominated the street, drawing foot traffic away from traditional global priorities. Climate and poverty panels still ran, but AI drew the crowds—and the cameras—as leaders sketched divergent visions for who gets access to cutting-edge models, how fast adoption should move, and who captures the profits.

- AI Hype Meets Geopolitics as Export Controls Take Center Stage

- Usage Push Versus Caution Divides Leaders on AI Deployment Pace

- The Chip Buildout Hits Reality Amid Power, Grid, and Cost Constraints

- Barbs Between Frenemies Reveal Deeper Rifts in the AI Ecosystem

- What Matters After Davos for AI: Policy, Regulation, Infrastructure

AI Hype Meets Geopolitics as Export Controls Take Center Stage

What might have been a product showcase quickly turned geopolitical. One prominent lab chief criticized policy moves that would loosen constraints on exporting advanced chips to China, arguing that compute is now a strategic asset on par with oil or rare earths. The argument: ship enough accelerators and you effectively export capabilities, not just components.

That stance landed awkwardly given that the same lab relies heavily on Nvidia hardware—illustrating the uneasy alliances that define today’s AI economy. Chipmakers want global demand. Frontier labs want near-exclusive access. Governments want leverage. It’s a triangle of mutual dependence with sharp corners, and Davos amplified those tensions rather than smoothing them.

Policymakers listening in have their own playbooks. The EU’s AI Act is nearing implementation, the OECD’s AI Principles are becoming compliance checklists, and the U.S. NIST AI Risk Management Framework is quietly turning into a procurement filter. Yet export controls and data-sharing rules remain the real pressure points, especially as nations treat compute as industrial policy.

Usage Push Versus Caution Divides Leaders on AI Deployment Pace

On stage, a leading cloud boss delivered the bluntest message: if real-world use doesn’t scale, the AI boom risks looking like a bubble. Enterprise adoption is the lifeline—and the scoreboard. The pitch is familiar: copilots in productivity suites, coding assistants for developers, and AI agents for service desks, all tied to usage-based revenue.

Consultancies back the opportunity while warning about uneven results. McKinsey’s research finds that early adopters report productivity gains and revenue uplifts in select functions like software, marketing, and customer operations, but ROI is lumpy and depends on change management, data quality, and risk controls. Stanford’s AI Index has likewise noted that competitive advantage is clustering around organizations with access to proprietary data and massive compute.

This divergence fed on-stage friction. Some leaders argued for wide distribution—getting tools into as many hands as possible—while others urged tighter controls and slower deployment for sensitive domains. It’s not just philosophy; it’s a business model split between volume-driven platforms and boutique, high-margin services.

The Chip Buildout Hits Reality Amid Power, Grid, and Cost Constraints

Nvidia’s chief evangelized more infrastructure spending and more local compute, asserting that the capex wave is still in its early innings. The cloud giants agree, funneling tens of billions annually into data centers and custom silicon to meet model training and inference demand. Investors cheered similar messages over the past year as Nvidia’s valuation rocketed.

But physics and grids don’t move at keynote speed. The International Energy Agency warns that global data center electricity use could roughly double by the mid-2020s, approaching 1,000 TWh, with AI as a major driver. Cities from Dublin to Amsterdam have already faced moratoriums or curbs on new hyperscale builds, driven by power, water, and land constraints.

Jobs were another flashpoint. Chip and data center construction create local employment, but long-term net effects are uncertain. Automation can elevate productivity while displacing tasks. The IMF and OECD both caution that gains may concentrate in high-skill roles unless reskilling programs scale alongside deployment. Few on stage dwelled on that trade-off.

Barbs Between Frenemies Reveal Deeper Rifts in the AI Ecosystem

For all the polished slides, the most revealing moments were interpersonal. Executives tossed subtle elbows at rivals and even partners—rare candor for a venue known for curated harmony. One founder likened modern AI data centers to nations of savants, underscoring why he opposes looser chip exports. A top cloud chief, meanwhile, used a factory metaphor to reduce the mystique of model training and focus on throughput and cost.

Behind the rhetoric is a scramble for talent and supply. Poaching allegations, oversized compensation, and noncompete skirmishes have become routine as labs and platforms race to staff safety, alignment, and systems teams. Stanford’s AI Index has documented the skyrocketing costs of frontier training runs, with compute and researcher scarcity reinforcing the moat for incumbents.

What Matters After Davos for AI: Policy, Regulation, Infrastructure

Three signposts now loom. First, export policy: any shift in chip rules will ripple through training timelines and model access. Second, regulation: EU, U.S., and G7 frameworks will shape evaluation, disclosure, and liability, affecting go-to-market speed. Third, infrastructure: power, water, and permitting will decide where AI actually scales, not just where the slide decks say it will.

Davos didn’t solve the AI debate; it televised it. The CEOs left having sold a vision of ubiquitous intelligence, yet also exposed the fault lines over who builds it, who uses it, and who benefits. If the crowd size was any indicator, the next chapters of that argument won’t be happening in side rooms.