California Attorney General Rob Bonta and Delaware Attorney General Kathy Jennings have sent OpenAI a scathing letter that blames ChatGPT and related products for causing harm to kids and make it clear the company can’t expect to get away with bad behavior. In an open letter, the attorneys general invoked reports of troubling interactions with minors and urged OpenAI to make the app safer and more transparent in the short term, following a meeting with the company.

States increase pressure following disturbing reports

The letter follows a more general coalition push in which dozens of attorneys general wrote to leading AI companies on the issue of youth safety. Bonta and Jennings cited a reported suicide of a youth in California and a murder-suicide in Connecticut after prolonged conversing with an OpenAI chatbot and suggested that protections failed at the times when they were most necessary.

The officials also raised questions about OpenAI’s proposed restructuring into a for-profit company this year as well as their continued review, OpenAI said, of its plan to produce and publish research that would drive it to become a for-profit company — steps OpenAI said it would take to ensure its safety mission. The message: governance and capitalization choices should strengthen, not undermine, the company’s fiduciary responsibility to children and teenagers.

What the attorneys general are seeking now

The AGs also requested that OpenAI describe its current safeguards, risk assessments, and procedures for escalating suspected child endangerment. They want proof that age verification, content moderation and crisis-response pathways are effective in real-world use — not just in the lab testing or policy documents that companies often use as evidence.

They also indicated that remedial action should start straight away in any area where there are shortfalls. That likely involves stronger age gates, default-on parental controls where products are promoted to families and clear routes to human assistance when a conversation touches on self-harm, sexual exploitation, grooming or violent ideation.

The stakes, in numbers

Public health and child-safety groups have long cautioned that digital environments can magnify risk. The Centers for Disease Control and Prevention has noted sustained decreases in youth mental health, with suicide as one of the leading causes of death among adolescents. The volume of reports of suspected child sexual exploitation received by the National Center for Missing & Exploited Children’s CyberTipline exceeds 30 million each year, reinforcing just how large the online threat is and the important of strong detection and reporting.

At the same time, exposure in the classroom and at home to generative AI is increasing. With increasingly sophisticated bots, made by various companies and at home, teens are now playing with chatbots to help with homework, creativity or advice — all areas where well-intentioned systems can offer harmful advice or normalize dangerous behavior if design and limits aren’t carefully considered, say surveys by Common Sense Media and Pew Research Center.

OpenAI’s safety posture under scrutiny

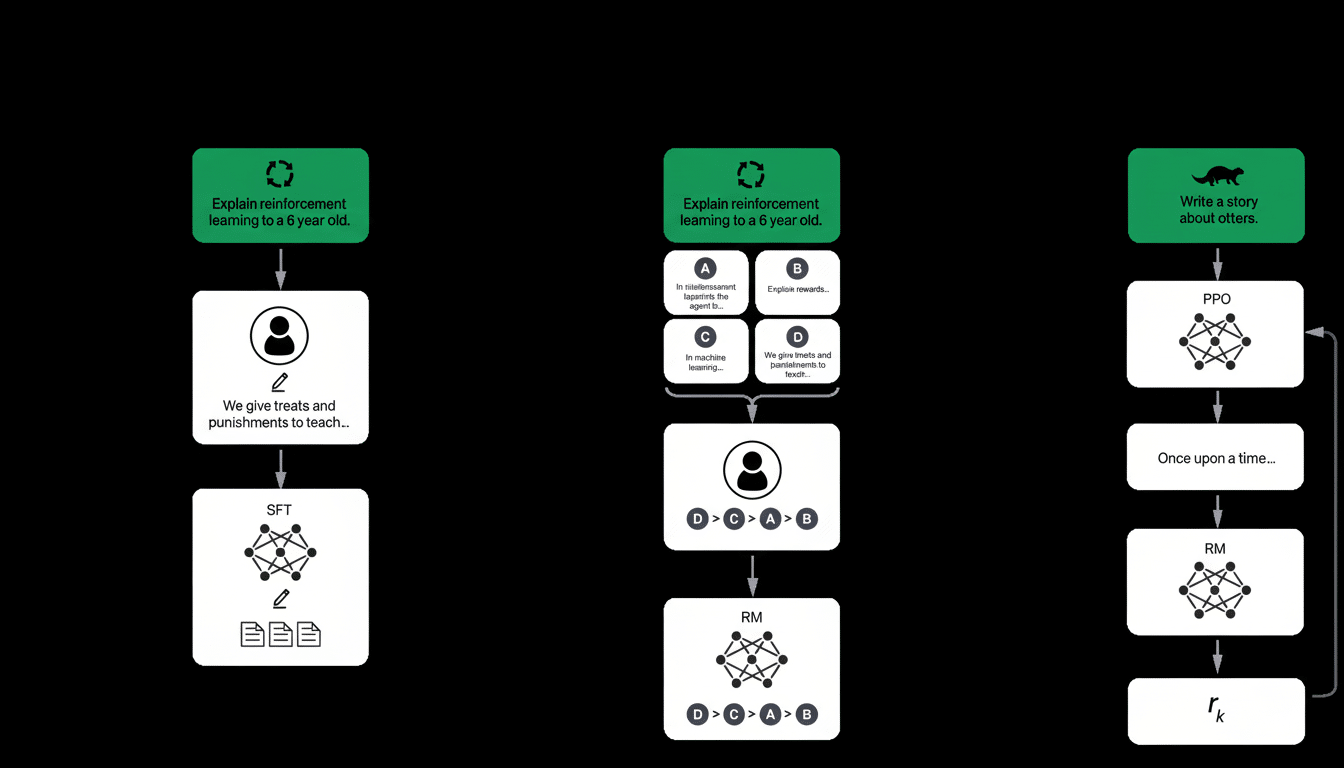

OpenAI claims it uses multiple layers of protection, including filtering on content classifiers, reinforcement learning with human feedback, safety classifiers and red-teaming to limit harmful outputs. It also has usage policies to restrict sexual content with minors, advice promoting self-harm and a number of other high-risk categories.

State laws protecting consumers and privacy empower attorneys general with broad tools to combat deceptive practices and unfair harm to minors. Many firms are already litigating youth-harm suits against social platforms that have attracted national attention. Federal regulators are also busy: The Federal Trade Commission enforces the Children’s Online Privacy Protection Act and has already indicated that murky age tracking and safety claims could bring enforcement actions.

At a global level too, the European Union’s AI Act that imposes the obligations on general and high-risk AIs, the United Kingdom’s Online Safety Act mandating child-risk duties for services whose functionalities can access minors. Even in a world where regulations vary, we are already seeing a near-consensus develop around age-appropriate design, transparency, and safety-by-default and it is likely that anything that uses generative AI will need to adhere by those standards.

What real child protections look like

Experts identify a series of tangible actions, for example: robust age assurance that reduces data capture; default-on youth protections with visible parent controls; crisis response that can surface helpline support and escalate to trained reviewers; strong classifier ensembles to detect grooming, sexual and self-harm content; and speedy take down and reporting mechanisms that are consistent with guidance from the NCMEC.

Operationally, the big task is for companies to have live incident response playbooks, audits against frameworks like NIST’s and evaluate third parties by the extent to which they publish real safety metrics — false-negative rates for risky content, time-to-mitigate, and the share of high-severity incidents escalated to humans. Without metrics, “safety” is still a promise, not a habit.

What happens next

The letter indicates that state enforcers are advancing from general concerns to actual oversight of product decisions and corporate governance. And if OpenAI’s answers aren’t deemed sufficient then the multistate investigations or consent orders are plausible as well, bringing with them the possibility of mandatory safeguards, independent oversight, and fines under consumer protection law.

And the wider industry should view it as a model. The potential of generative AI won’t relieve companies of their duty to protect children. The standard is moving in the direction of provable safety-by-design, and the message from state attorneys general is clear: harm to kids is preventable and nonnegotiable.