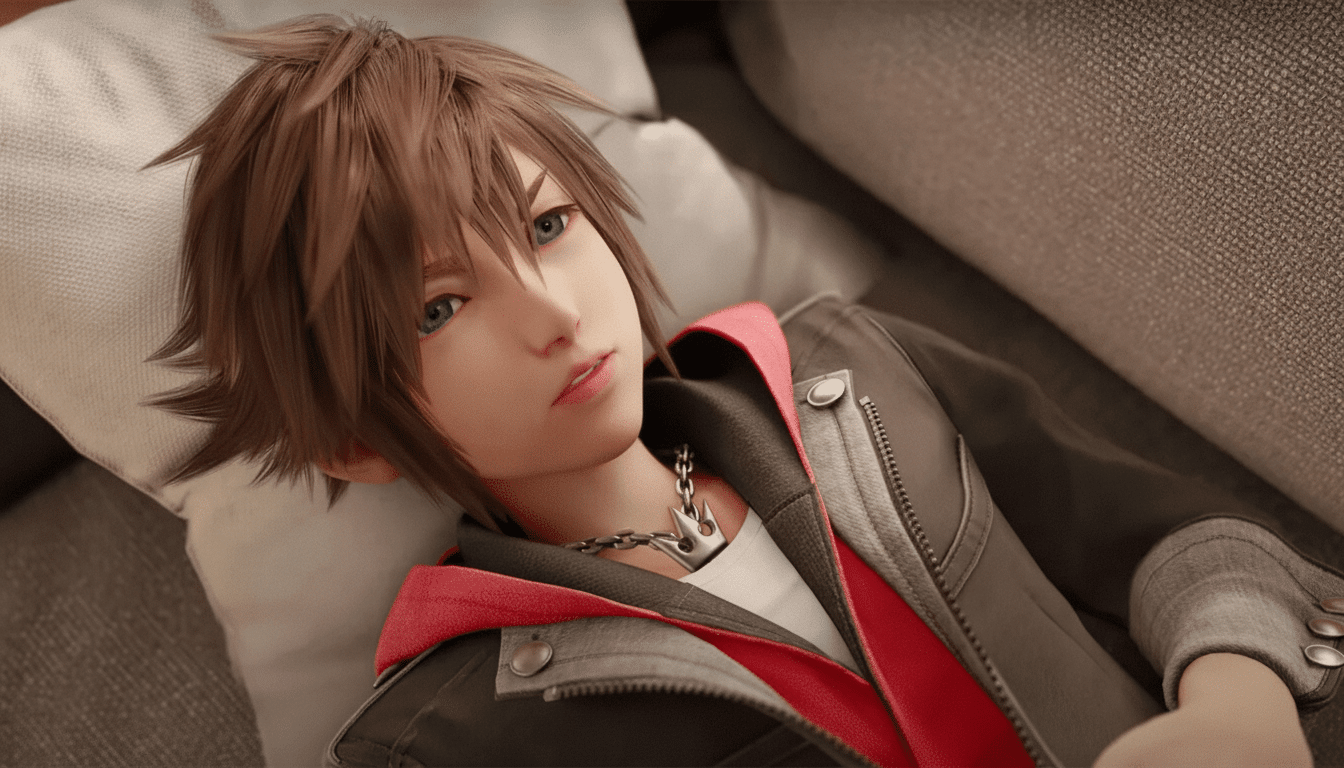

OpenAI is working to close up the rules surrounding Sora, its viral AI video app, with a plan that would put the onus on copyright holders to opt out if they don’t want their characters, worlds, or other objects of intellectual property showing up in user‑generated clips. The move comes as Sora surges up the App Store, and as studios, game publishers, and talent agencies study how their IP can be remixed inside a consumer video generator.

How the Opt-Out Process Will Work for Rights Holders

OpenAI is working on tools that would let rights holders say where Sora should draw the line. Early guidance indicates there won’t be an all‑or‑nothing master switch for every title in a catalog; owners will need to file exclusions with respect to individual characters, shows, or franchises. Such a granular approach might sound promising to brands that would like to let some things happen while stopping others, but it also adds overhead for companies overseeing dozens or hundreds of properties.

The company has started showing in‑app “content violation” notices as it tightens restrictions, an indicator that enforcement is already on the rise. Leadership has separately indicated that Sora’s “cameos” feature — where people can add their own likeness — will have granular controls, so that users can opt in or out of using certain prompts like political content or specific phrases. The system is thus, I’ll state the obvious, moving in the direction of permissions and constraints encoded right into generation.

Why OpenAI Is Releasing Its Megabots Now

Since its debut, users have been quickly testing the limits of Sora with high‑profile characters and major brands. OpenAI had already told Hollywood talent agencies and film studios that copyrighted works could appear in the app, according to reporting from The Wall Street Journal. Executives like CEO Sam Altman have positioned Sora as an “interactive fan fiction” hub that could generate value for rights holders — so long as those owners are free to set rules or walk away entirely.

There’s also a cost and compliance issue. Video synthesis is very expensive to compute, and OpenAI has said that it wants to monetize Sora in order to control demand. Tighter controls could lower the risk of takedowns, lawsuits, or platform whack‑a‑mole that would dilute a paid offering. And the legal temperature is rising: There are a number of copyright cases pending across the AI industry, and increasing regulatory scrutiny in the United States and Europe.

What the Opt-Out Model Means for Major IP Holders

For the biggest rights holders — think film studios, streaming services, game publishers, and sports leagues — the opt‑out default turns the tables on a familiar story.

Rather than hashing out upfront licenses or blanket prohibitions, they will be forced to settle property by property: permit noncommercial fan use, limit it to certain themes, or block it outright. That nuance could lead to brand‑safe creativity and promotional upside but will also necessitate new workflows and monitoring to uphold rules as models evolve.

For smaller creators, the calculus is not so different. An opt‑out path is a way to protect unique characters or art without needing an entire team of lawyers. The matter is usability — how verification works, response times, and how user‑friendly the forms are will dictate whether independent artists can keep up. Previous attempts in related spaces — such as YouTube’s Content ID or deletions of image datasets through tools like Have I Been Trained — show that enforcement systems are made or broken by their availability and precision.

How Users and Creators Will Be Affected by New Rules

Average Sora users should expect more guardrails. Prompts that previously passed freely may set off violation notices, particularly if they reference well‑known franchises or imitate signature styles. Now, the cameos feature’s controls ensure that people can share their likenesses with tighter guardrails around where and how they can be used. That’s a significant shift toward consent‑first media generation and could serve as a model for other platforms dealing with voice, face, and motion cloning.

Those whose content is parody, commentary, or transformative in nature are going to be watching how the filters manage a fair‑use‑adjacent kind of work versus blanket blocks. If a publisher decides to entirely opt out for a franchise such as Pokémon, for instance, talking about it in parody form could even see that barred by automated checks. Clear appeal mechanisms and human review will be critical in edge cases where context is what makes a clip allowable.

How This Approach Compares to the Wider AI Industry

AI platforms are converging on rights interfaces. The strategies vary. So when you visit the first screen of a skill or action, there may be nothing to prevent you from saving content that can be played back on (or with) other devices down the road. Some services focus elsewhere, on using licensed training data or stock libraries; others build in opt‑outs for creators’ content, or tech tools that rights owners can use to prevent uploads that match their works. OpenAI’s approach seems to mix proactive brand controls in the generation process with user‑level consent for likeness — more prescriptive than “notice‑and‑takedown” alone, but less sweeping than an opt‑in‑only ecosystem.

Key Open Questions and What to Watch for Next

The pivotal details are left to the imagination and negotiated later: how rights owners confirm identity, whether rules apply worldwide, when historic takedown prompts expire after an opt‑out, what delineates training versus output filters, and how this is appealed if overly broad. Expect faster iteration (OpenAI has telegraphed a lot of iteration). All while the company grapples with the size, shape, and risks — legal, user‑facing, and economic — of large‑scale video generation.

If the opt‑out model proves feasible, it may offer a template for how generative video and entertainment IP can coexist: as a negotiated middle ground in which rights holders set boundaries, fans create within them, and the platform enforces those lines at scale in real time.