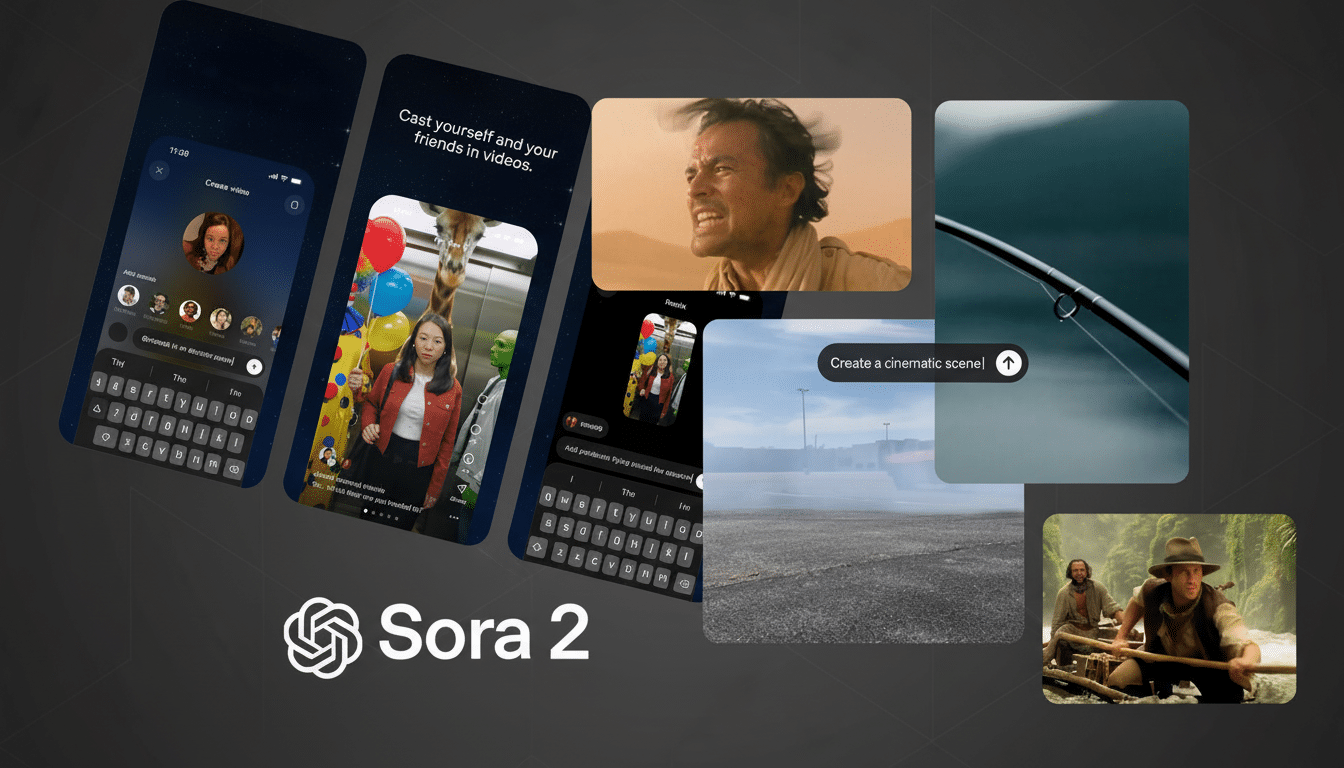

OpenAI has introduced new controls to Sora 2, intended to limit unauthorized deepfakes — narrowing the way its buzzy video model works with user likenesses. The update centers around Cameos, an opt-in Sora feature that allows users to make a thumbnail‑sized digital stand-in of themselves. In response to a deluge of early misuse concerns, OpenAI is incorporating more granular restrictions, clearer consent settings and an even stronger watermark designed to make it harder to fake a personality model while making accountability simpler.

What the New Cameo Controls Really Do in Sora 2

At the core of these alterations are two layers of control: access and content. [Read: How Cameo and Patreon are changing what celebrity culture looks like] Users already had four levels of access for their Cameo — only you, people who ask you first, friends, everyone. Now, OpenAI is matching that with content preferences and limitations that enable you to dictate the ways in which your likeness can be used.

- What the New Cameo Controls Really Do in Sora 2

- Watermarking Goes With Detection Across Platforms

- Why It Matters Now for Deepfakes and Consent

- How Consent and Safety Are Enforced in Sora 2

- Limits and Open Questions About Sora 2 Protections

- What Creators and Brands Should Do Now to Prepare

- The Bottom Line on Sora 2’s Deepfake Safeguards

In blog posts from OpenAI technical staffer Thomas Dimson and head of Sora Bill Peebles, the organization detailed prompt-based guardrails including ‘Do not place me in political commentary,’ ‘Block explicit or sexualized contexts’ or ‘Always show me with a red hoodie.’

- Do not place me in political commentary

- Block explicit or sexualized contexts

- Always show me with a red hoodie

These are policy boundaries for anyone who wants to generate with your Cameo; a consent layer around access permissions.

The controls exist in the app, under profile settings, where you can edit your Cameo, switch to “only me” for private use or opt out altogether at the time of sign-up. The goal is not to take a stand on whether humor belongs in politics, but rather to make it easier for people to say yes to creative use cases while making the hard no stick where it counts — political statements and sensitive topics, like things that otherwise feel reputational.

Watermarking Goes With Detection Across Platforms

OpenAI says it is making Sora 2’s watermark more recognizable, a move that becomes important as generated clips make their way around platforms. Stronger provenance signals can aid downstream detection by social networks, newsrooms and rights holders — particularly when coupled with standards work from groups like the Coalition for Content Provenance and Authenticity (C2PA) and the Content Authenticity Initiative (CAI).

No watermark is bulletproof — cropping the video or recompressing it can disturb signals, as can re-recording — but clearer labeling and automated detection raise the cost of plausible deniability, and make videos more traceable. That’s more and more crucial as increasingly professional-looking synthetic video interweaves with mundane feeds.

Why It Matters Now for Deepfakes and Consent

Deepfake misuse is now a reality. Sensity AI and other threat intelligence teams have recorded a rapid rise in impersonation content and non-consensual deepfake images. Regulators are taking notice: the European Union’s AI Act moves to require labeling of synthetic media, and U.S. agencies like the Federal Trade Commission have cautioned platforms and developers to put in place security measures and make plain what has been manipulated.

The cinematic quality of Sora 2 raises the stakes even further. When a model can generate photorealistic faces and plausible audio in consumer-friendly interfaces, consent and context controls go from “nice to have” to “table stakes.” OpenAI’s decision also concedes that the success of the product relies on protecting creators, public figures and everyday users from harm.

How Consent and Safety Are Enforced in Sora 2

Outside of the user toggles, enforcement usually depends on a combination of policy filters, safety classifiers and human review. Restriction prompts provide the system with a set of explicit rules to check if someone tries generating with your Cameo. If a requester asks for disallowed content — political persuasion or sexualized depictions, for example — the model is supposed to decline, no matter how accessible your Cameo may be.

OpenAI has also flagged forthcoming safety improvements, and has conceded that some users will find the system “overmoderated” in the short term. That conservative posture is typical when a new capability arrives: it winnows the attack surface while detection and appeals processes are proved in battle. The proof will be if there is solid blocking of bad requests and not blocks of good, consensual use.

Limits and Open Questions About Sora 2 Protections

No tool can prevent every abuse, particularly once content is outside of a platform. Actors are trying quick workarounds, remixing actual footage or exporting watermarks elsewhere. All this will require cross-platform cooperation and clear reporting channels as well as redress when someone’s image is used in a misleading or abusive way. Transparency reports revealing how often Sora is blocking Cameo requests, and on what grounds, would also facilitate independent scrutiny.

Another open question is interoperability: whether Sora’s watermarking and consent metadata will be read by other major platforms, by default? More coordination with social networks, media asset managers and cloud providers may be the difference between a signal that works in theory and one that protects people at scale.

What Creators and Brands Should Do Now to Prepare

- Unless you explicitly want broader use, set access to Cameo to “only me.”

- Craft obvious restriction prompts for political, hot-button, endorsements and off-brand categories.

- Add a badge of some kind — an article of clothing, accessory or on‑screen label — so viewers know when your likeness is officially sanctioned.

Watch mentions, and report violations as needed; make sure to screenshot prompts and responses in case you need to dispute.

If your image is used without permission, mix platform reporting with legal redress available under right-of-publicity and impersonation laws that differ by jurisdiction but have been increasingly cited in AI instances.

The Bottom Line on Sora 2’s Deepfake Safeguards

Sora 2’s anti-deepfake measures do not remove risk, but they have significant bearing on consent and traceability as it pertains to digital likenesses. In pairing granular restrictions with a clearer watermark — and in signaling a willingness to turn the dials on safety even at the cost of friction — OpenAI is joining where regulators, standards bodies and industry groups have pushed. The next test is pragmatic: are these controls adaptable to the hurly-burly of life while nurturing real creativity?