Smart glasses keep getting smarter, but their sound still lags. Displays are sharper, cameras are better, and on-device AI is suddenly useful. Yet the audio coming from most frames remains thin, leaky, and underpowered. The missing piece is a new kind of speaker built for eyewear, and I’ve already tried a prototype that shows exactly how the category will change.

Why Audio Holds Back Smart Glasses Today

Open-ear audio is the toughest design constraint in smart glasses. Temples are narrow, batteries are tiny, and drivers sit far from the ear canal, so conventional speakers struggle to deliver clarity without blasting the world around you. For voice assistants, navigation prompts, captions, and calls, intelligibility matters more than window-rattling bass. Analysts at IDC and Counterpoint Research have repeatedly noted that audio quality is a top reason people use or abandon “face-worn” wearables, and it’s where today’s popular frames still compromise.

Traditional dynamic drivers—magnets, coils, diaphragms—were designed for earphones and headphones that seal near the ear. They’re efficient and bass-friendly, but they’re bulky for eyewear and can distort at higher volumes when pushed to fill the space between your temple and ear.

Solid-State Speakers Step In for Smarter Eyewear

The most promising fix is solid-state MEMS speakers: micromachined chips that vibrate ultrathin diaphragms with precise control, not big magnets. xMEMS is the company pushing this hardest for wearables, with chip-scale speakers that are about 1 millimeter thick—thin enough to slide inside the slimmest temple arms. The firm’s Cowell tweeter-class chips already ship in earbuds from brands like Creative and SoundPEATS, proving the tech is past the lab stage.

For glasses, xMEMS has engineered rectangular variants (Sycamore-N) tuned for open-ear listening. In over-the-ear prototypes, its Sycamore platform is dramatically lighter than comparable dynamic drivers—xMEMS cites 18 grams versus about 42 grams for traditional driver assemblies—hinting at the weight savings and slimmer profiles possible on frames. Other audio specialists, including USound, are also advancing MEMS speakers, echoing the trajectory MEMS microphones took a decade ago when they quietly replaced older mic designs across the industry.

What I Heard in Early Smart Glasses Prototypes

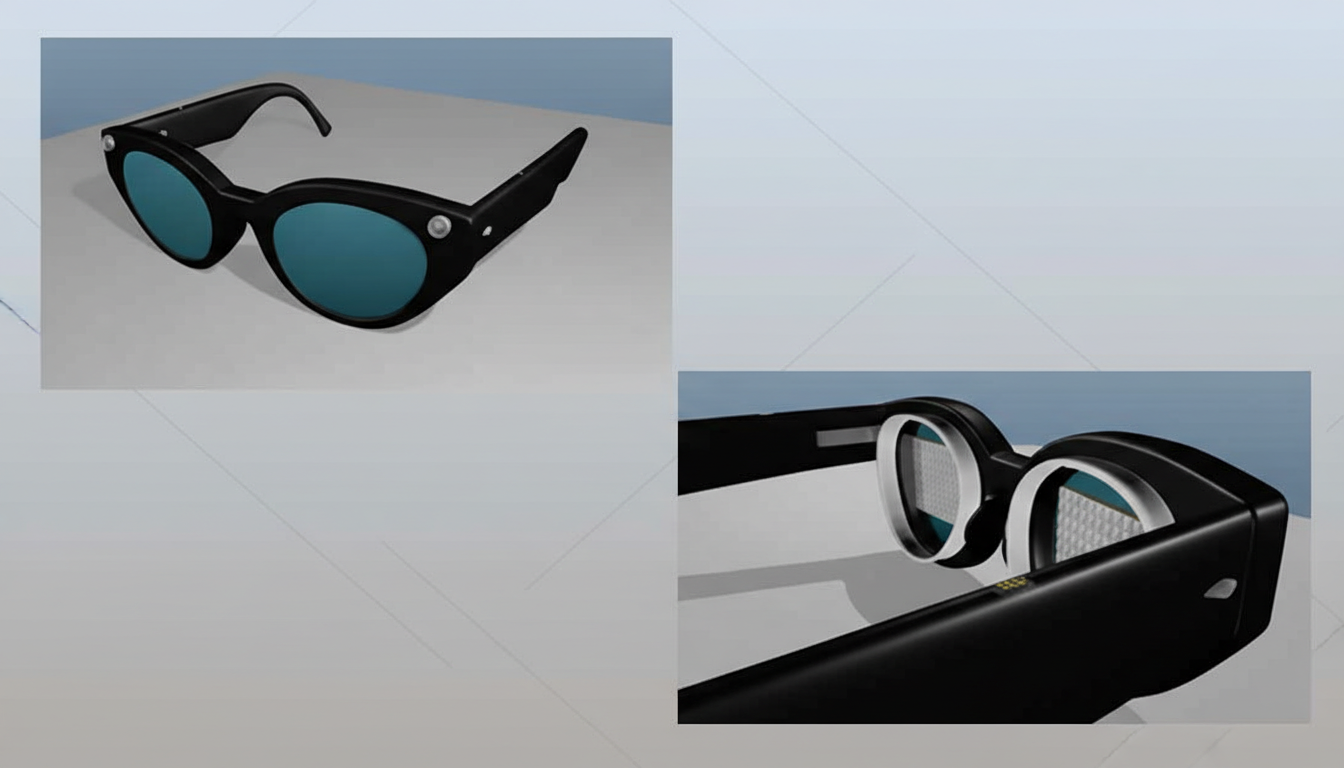

I tested a pair of prototype glasses fitted with xMEMS Sycamore-N modules, using the same music, podcasts, and voice prompts I rely on daily with my Ray-Ban Meta frames. The immediate difference was intelligibility. Spoken words had crisper leading edges, and consonants popped without getting sharp. Music carried more detail at modest volumes, and stereo separation felt wider, likely because the chips can be positioned and angled more precisely inside the arms.

Bass was the surprise. You don’t feel the low end the way you do with drivers that push air; there’s no “thump.” But you hear it—clean, controlled, and without the muddiness that often creeps in when open-ear speakers get loud. In a quiet café, I also noticed less apparent leakage at the same perceived volume. That’s crucial for privacy and for not being “that person” in a shared space.

Comfort matters, too. Because the modules are flat and light, the frames stayed slim, and the clamp force didn’t creep over time. That’s the difference between wearing smart glasses for five minutes to check a notification and keeping them on for a commute or a long call.

Size, Heat, and Power Matter for Smart Glasses

Audio doesn’t live in a vacuum. The same temples that house speakers also make room for batteries, radios, and processors. Shrinking the speaker stack by millimeters can free volume for a larger cell or better antennas, which directly affects call quality and battery life. During a separate engineering demo, I saw a chip-scale cooling module tame a simulated processor hotspot from roughly 65°C down to about 36°C. Keeping the skin-contact area cool isn’t just comfort—it lets manufacturers sustain higher performance without throttling audio features like live translation or voice assistance.

On the software side, the shift to Bluetooth LE Audio and LC3 codecs is timely. These standards are more power-efficient and resilient than legacy SBC, improving performance for continuous speech and notification tones that define “ambient” eyewear use. Pair advanced beamforming microphones—an area where companies like Knowles lead—with solid-state speakers, and you get a far better loop for calls and assistants.

What Comes Next for Smart Glasses Sound Quality

The path forward is straightforward: integrate MEMS speakers into production frames, tune them for speech-first clarity, and pair them with smarter mic arrays. Manufacturers will need to validate durability, moisture resistance, and tuning across different temple geometries. They’ll also have to educate buyers that “less boom” isn’t “less bass”—it’s bass without the blur.

The payoff is big. Better intelligibility makes voice AI actually usable in public. Lower leakage improves social acceptability. Thinner, lighter arms increase wear time, which in turn makes smart glasses more than a novelty. After hearing what solid-state audio can do in a prototype, it’s clear the future of smart glasses has been missing a key component. It finally exists—and once it’s inside mainstream frames, the entire category will sound different.