From flirty wrong-number texts to help-desk heists and a fresh fight over age checks on Discord, digital risk this week underscores a blunt truth: criminals increasingly bypass code and target people, policies, and platforms. Here’s what changed, why it matters, and how to stay ahead of it.

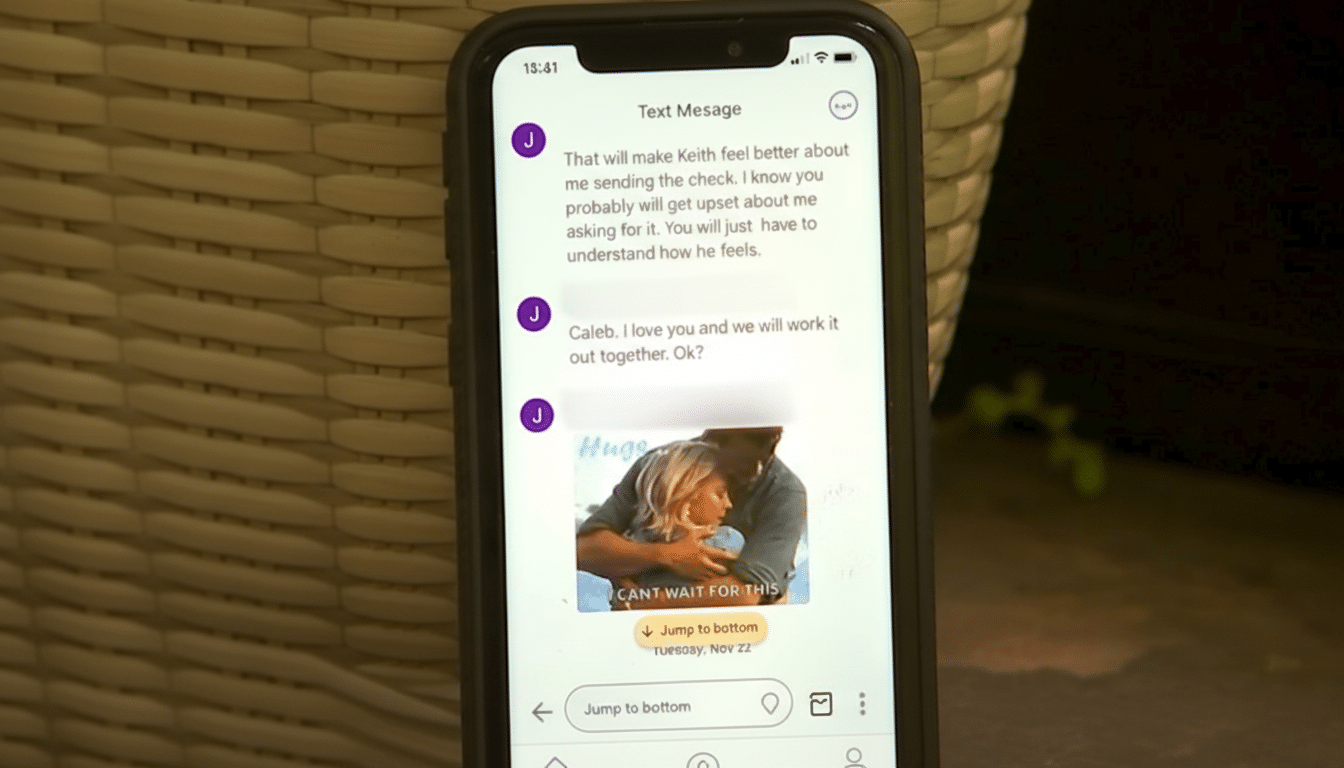

Flirty Fraudsters Weaponize Wrong-Number Texts

Those out-of-the-blue “Hey, how have you been?” messages are not meet-cutes; they’re entry points. Scammers lure replies, build rapport, then guide victims toward money requests or “investment” apps. The Federal Trade Commission has reported more than $1B in annual losses tied to romance-related schemes, with median losses in the thousands, and investigators note a steady shift from public social platforms into private messaging where moderation is thinner.

The mechanics are simple and scalable: bulk SMS and messaging blasts, polished profiles, and fast handoffs to encrypted apps. Tactics often blend romance hooks with “pig-butchering” investment fraud. A red flag many miss is the pivot: if the sender pushes you to move to WhatsApp or Telegram, claims an urgent personal crisis, or references a conversation you never had, disengage and report. In the US, forwarding spam texts to 7726 helps carriers block campaigns.

Discord Age Checks Rekindle Privacy Fight

Discord’s new age-verification push—moving some users into teen accounts if they can’t prove they’re adults—reignited a long-running debate: do verification systems protect minors or mostly harvest IDs? Privacy advocates like the Electronic Frontier Foundation warn that document and face scans create high-value data stores without solving the core harm models, since determined teens and abusers can still evade checks with borrowed IDs or synthetic profiles.

Regulators, including data-protection authorities in Europe and the UK, have urged “privacy by design” with proportionality and data minimization. The concern isn’t abstract: verification data, if breached or repurposed, can become a lifelong liability. For families, the trade-off is stark—safer defaults and content limits versus handing a platform sensitive identity proofs. At minimum, users should look for clear retention limits, independent audits, and alternatives that don’t require uploading government IDs.

Payroll Pirates Exploit Help Desks for Fraud

Researchers at Arc Labs detailed a case where an intruder, armed with old breach credentials and scraps of internal context gleaned from email, called a healthcare company’s help desk and rerouted a physician’s paycheck to a criminal account. The incident, reported by The Register, is a crisp example of modern social engineering: pretext, pressure, and plausible detail trumping technical defenses.

This trend is no edge case. The FBI’s Internet Crime Complaint Center consistently flags business email compromise and payroll diversion as costly, and Verizon’s Data Breach Investigations Report estimates the “human element” factors into most breaches. Attackers know payroll updates are high-impact and time-sensitive—exactly the sort of requests that fluster agents. Organizations should require out-of-band verification for pay changes, lock self-service portals with phishing-resistant MFA, and script help-desk playbooks that make “no” the default when identity proof is thin.

Data Demands And Platform Trust Face New Scrutiny

Trust in big platforms took another hit after reporting by The Intercept showed Google providing extensive user data to US Immigration and Customs Enforcement under subpoena, involving a student journalist-activist. The Electronic Frontier Foundation and the ACLU of Northern California raised concerns about the scope of data shared—reportedly including financial details—and transparency around notification.

The takeaway isn’t that companies should defy lawful orders, but that users underestimate the breadth of what is stored and how quickly it can be handed over. Minimization matters. Split your identity across accounts, prune old data, disable unnecessary syncing, and use end-to-end encrypted services for communications where feasible. Organizations should revisit law-enforcement response policies and ensure they match public promises and legal requirements.

AI Chat App Leak Exposes Private Chats and Histories

A misconfigured Google Firebase database exposed hundreds of thousands of private conversations from Chat & Ask AI, a wrapper app with tens of millions of users, according to 404 Media. An independent researcher said the flaw enabled access to more than 300 million messages from over 25 million users, including sensitive topics and full histories—proof that “wrappers” can multiply risk by layering weak data handling atop other AI models.

Assume anything you type into an AI service can be stored, reviewed, or breached. Favor providers with published security practices, opt-out controls for training, and clear data retention policies. For truly sensitive work, use on-device or self-hosted models, or keep AI out of the loop entirely.

What To Do Now: Practical Steps To Reduce Risk

- Don’t reply to “wrong-number” flirts. Block and report. Verify any unexpected requests via a confirmed channel before sending money or personal details.

- Turn on phishing-resistant MFA for email, payroll, and cloud apps. Enforce out-of-band checks for pay and bank changes. Train help desks to require step-up verification.

- On Discord and similar platforms, prefer verification methods that don’t require uploading IDs, and review what data is stored, for how long, and who can access it.

- Reduce your data footprint: delete old backups, disconnect unused third-party app permissions, and periodically download and purge platform histories you don’t need.

Criminals iterate fast, but most wins still start with a message, a phone call, or a form field. Tighten the human layer, and you narrow more attack paths than any single patch ever could.