Roblox is expanding its use of age-estimation tech across its communication features and making content ratings more uniform, thanks to a partnership with the International Age Rating Coalition, in moves meant to better guide parents and prevent adults from interacting with minors.

Its voice and text tools will need users to input an age estimate based on selfie analysis – along with ID-based identity verification and verified parental consent if suitable. As a result, between them these checks should be able to do a better job than mere birthdate input to minimise the likelihood of juveniles accessing adult content.

What families will see in the app

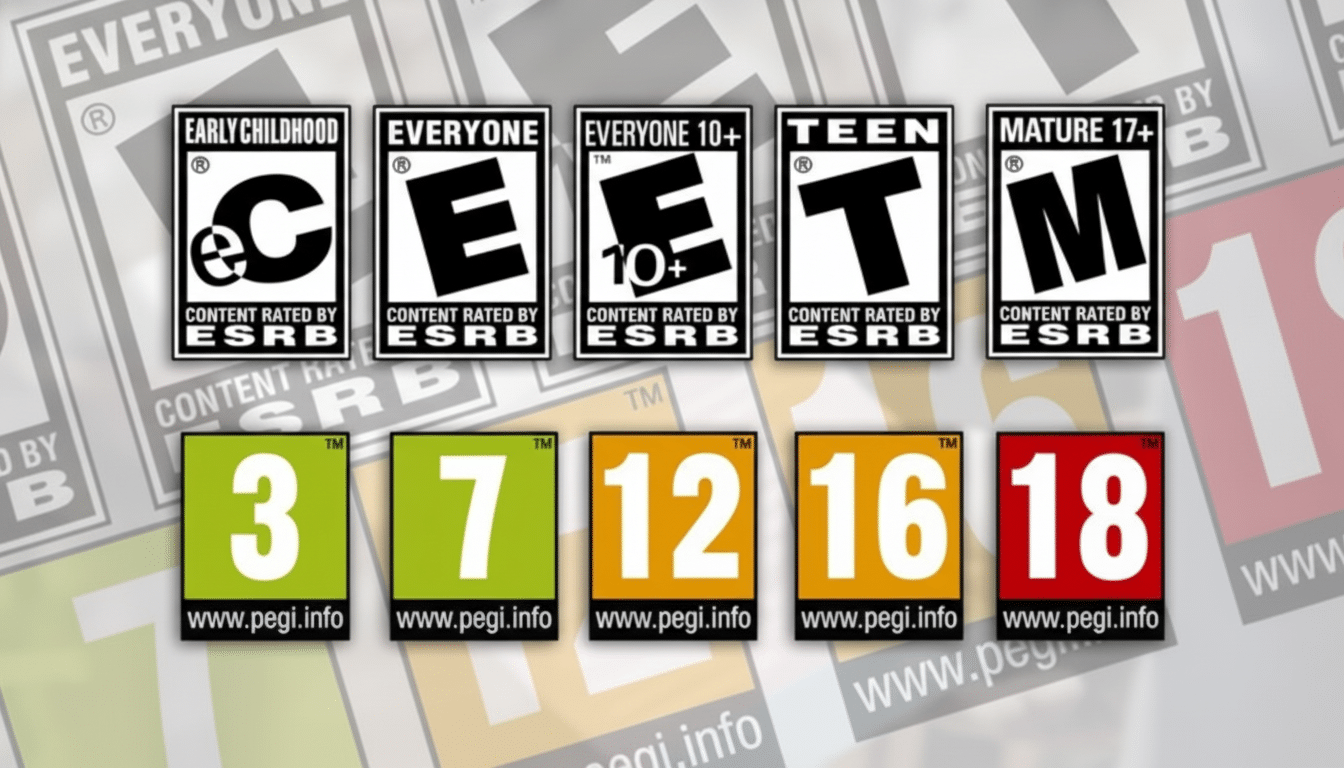

Roblox will replace its homegrown age labels with IARC ratings from established ratings bodies In the U.S., families will see ESRB guidance; across much of Europe and the U.K., PEGI; in Germany, USK; and in the Republic of Korea, GRAC. Those labels come with the familiar content descriptors for violence, blood, crude language, substances, simulated gambling and more.

From the perspective of parents, this puts Roblox in line with their console and mobile stores experience. Look for ratings to pop up near experience listings, with parental controls preventing kids from ducking in the sketchy underbelly of experience sharing based on that rating and, for the younger set, shutting down or limiting the ability to chat.

How age estimation will work — and its limits

Age estimation take selfie to analyse facial features and estimate age group. Roblox says it combines that output with ID verification and parental consent tooling to derive higher confidence before unlocking sensitive capabilities such as unfiltered text chat or open voice channels. In its announcement, the company stresses that estimation is not face recognition, and that partners delete images after they have been used, rather than storing biometric templates.

Age assessment accuracy is getting better, but is not perfect. Independent studies as well as research cited by standards bodies and literature cited by NIST, recognize that performance of error rates will fluctuate based on age band and demographics. Which is why Roblox’s multi-signal approach and graduated permissions (rather than some all-or-nothing gate) are the right design decisions to minimize false positives and false negatives.

There will be a “trust tier,” effectively, of the checks. Users who claim to be 13 or over based on that estimation (and who verify the fact through additional steps that allow TikTok to make a higher-assurance guess) can access some of the communication tools; those who say they are older teens or adults who jump through higher-assurance hoops can get more permissions. Users who refuse estimation can still keep playing, however settings are being tightened considerably.

Regulatory and legal battle is heating up

Platforms are being dragged in that direction, thanks to global policy. The Online Safety Act in the United Kingdom requires services to consider and address risks to children. In the United States, several states have enacted age-verification and parental-consent laws; one of them, in Mississippi, led a social network to shut off access in that state. The EU’s Digital Services Act also mandates that platforms lower systemic risks for children.

Roblox’s move follows growing scrutiny over children’s safety, including lawsuits filed by state attorneys general and private plaintiffs, along with academic and journalistic investigations finding that children had been exposed to inappropriate content on the company’s platform, even when using its supposedly safe child-friendly mode. The company has spent on moderation, parental controls and machine-learning systems like Roblox Sentinel, which tries to sniff out signs of grooming and other harms, but regularized ratings and more robust age gates add another defense.

What changes for creators

The IARC process should make compliance easier. Devs are able to fill out a form with details like themes, interactivity, and monetization, which is then plugged into ESRB, PEGI, USK, or GRAC outputs without having to submit individually in every region. Ratings will determine discovery, monetization options and which users can enter an experience.

Studios seeking all ages could take relook at chat design, user constructed content tools, and reward mechanics so as not to stray into descriptors that elevate their rating. Exposure to simulated gambling elements, for example, frequently prompts tighter guidance and a more limited scope.

Scale and stakes for Roblox

And with over 70 million people using it everyday, millions of hours of engagement every quarter, even slight improvements to the safety experience could impact millions of conversations. Families can make decisions more quickly with a more transparent ratings system. More robust age checks could cut down the likelihood that adults and children would come into contact in open voice rooms or that young users would encounter the content for older teens.

The business case is no less clear: a safer, better-labeled catalog can spur brand partnerships and keep regulators at arm’s length, while offering developers more predictable guidelines for building experiences that serve multiple markets.

Unresolved questions to watch

There are three factors that will determine whether this rollout works: transparency, fairness and usability. The company, Roblox, will also face scrutiny over the way it handles data — including how selfie data is processed and discarded — and the process for appeal by users misclassified by the system. There are fairness concerns, too: age estimation has a legal and historical track record of demographic bias, so the company’s auditing and mitigation steps will be important.

Finally, the user experience must be straightforward enough for families to complete, but robust enough to prevent evasion. Proposed success measures are lowered minor-to-adult contact forums, fewer policy violations in teen spaces, and more satisfied parents via in-experience ratings.

Roblox adding standardized ratings and an extended age estimate is a move toward the mainstream gaming store norms. If run right, it provides parents a shared vocabulary, creators a stable rulebook and young players a safer place to explore — and it could act as a model that other user-generated platforms feel pressured to follow.