AI programs are being greenlit at record pace, yet most never clear the runway. Analysts expect worldwide AI spending to hit $2.52 trillion by 2026, according to Gartner, even as boards grow impatient for hard returns. Behind the hype, too many initiatives stall at demo day, never crossing into production or profit.

Multiple studies put failure rates uncomfortably high. One MIT study suggests up to 95% of generative AI efforts fail to deliver measurable value. The pattern is familiar: diffuse goals, shaky data foundations, and experiments that wow in a slide deck but break under real-world load. The good news is that the playbook for reversing the odds is getting clearer.

Why So Many AI Projects Miss the Mark and Stall

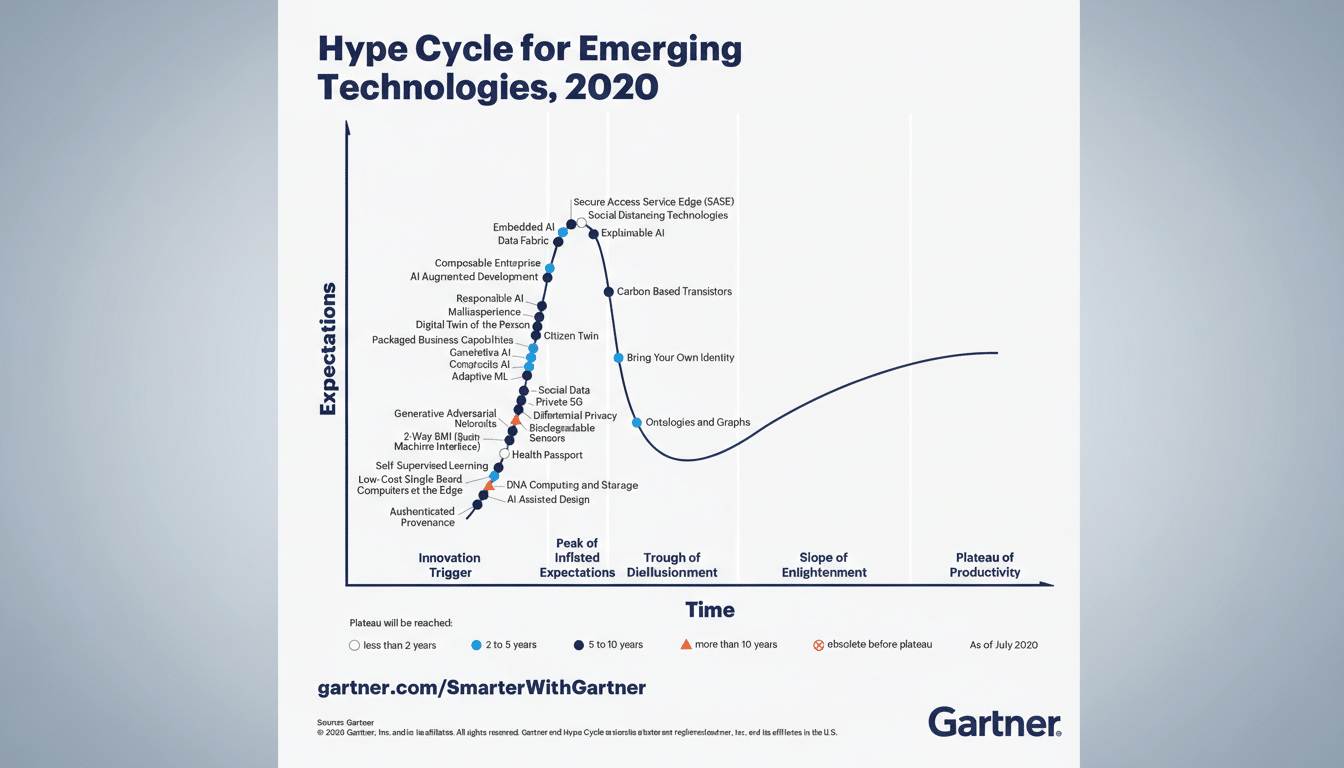

AI’s hype cycle is now entering the Trough of Disillusionment. That’s when moonshots lose altitude and leaders demand evidence. The most common pitfalls are problem selection divorced from business process, underestimating data quality and governance, and ignoring the operational realities of model deployment at scale.

Teams also measure the wrong things. Optimizing model accuracy without tying it to customer churn, fraud loss, or cycle time creates “model wins” but business losses. Add compliance friction, unclear ownership, and the absence of MLOps, and promising pilots become expensive shelfware.

1. Tie AI To Outcomes And Redesign The Work

Start with the job to be done, not the model to be trained. Pick a narrow use case with a clear metric—claims cycle time, first-contact resolution, approval latency—and baseline it. Your north star is a single “overall evaluation criterion” the business already cares about, then design the workflow, controls, and user experience around that target.

Prove value in weeks with a “thin slice” that touches real data, real users, and a production pathway. Use A/B testing to validate lift against the baseline. Move beyond vanity model metrics and report the business delta: minutes saved per transaction, dollars protected, or revenue unlocked. UPS’s long-running ORION routing system is a classic example—applied AI in service of a specific KPI, cutting miles and fuel rather than chasing abstract accuracy.

Bake in human-in-the-loop and exception handling from day one. For regulated processes, pre-negotiate guardrails with risk and legal so approvals do not bottleneck later. If adoption is the goal, incentive structures and training plans matter as much as the model architecture.

2. Build Capacity With The Right Partners

Capacity decisions—what to own, rent, or buy—make or break economics. Gartner expects a massive build-out of AI foundations through 2026, including a 49% rise in spending on AI-optimized servers and $401 billion going into AI infrastructure. You do not need to mirror hyperscalers to win, but you do need a clear sourcing strategy.

For most organizations, the fastest path is to leverage incumbent platforms. That can mean training and serving on clouds like AWS, Microsoft, or Google, tapping foundation models via API from specialists, and extending existing analytics stacks rather than inventing your own. Reserve bespoke model development for truly differentiating capabilities where the data advantage is durable.

Structure commercial terms so partners share outcomes, not just hours. Value- or outcome-based pricing, co-development agreements, and service-level objectives aligned to your KPIs keep everyone pulling in the same direction. Add FinOps discipline for AI—track unit economics per use case, monitor inference costs, and rightsize infrastructure to avoid runaway bills.

3. Treat Data And Operations As Products

Models fail when data is an afterthought. Establish data products with named owners, quality SLAs, lineage, and access policies. Invest in robust pipelines, feature stores, and privacy-by-design. The shift from “data lake” to “data you can trust on demand” is often the single biggest unlock for AI value.

Operationalize from the outset. Build an MLOps stack for versioning, CI/CD for models, and observability for drift, bias, safety, and cost. Define rollback and human escalation paths. Frameworks from NIST’s AI Risk Management Framework and ISO/IEC 23894 provide practical guidance on governance without stalling innovation.

Finally, close the loop with continuous learning. Capture feedback from users, automate retraining where safe, and routinely revisit model and policy assumptions. AI is not a one-and-done system; it is a living product that must evolve with data, regulation, and customer behavior.

The Bottom Line on Making AI Projects Succeed

Most AI failures are not about algorithms; they are about aim, alignment, and operations. In a market racing toward multi-trillion-dollar spend, the winners will look boringly practical: sharp problem selection, pragmatic partnerships, and industrial-strength data and MLOps. Do those three things, and your odds flip from the 90% pile into the small but growing set of AI programs that pay back—reliably.