OpenClaw, the open-source framework that lets AI agents talk to each other and to your apps, rocketed up GitHub and social feeds. But after the demo frenzy, a growing chorus of researchers and security engineers say the project is less a breakthrough than slick packaging—with risks that outweigh the wow factor.

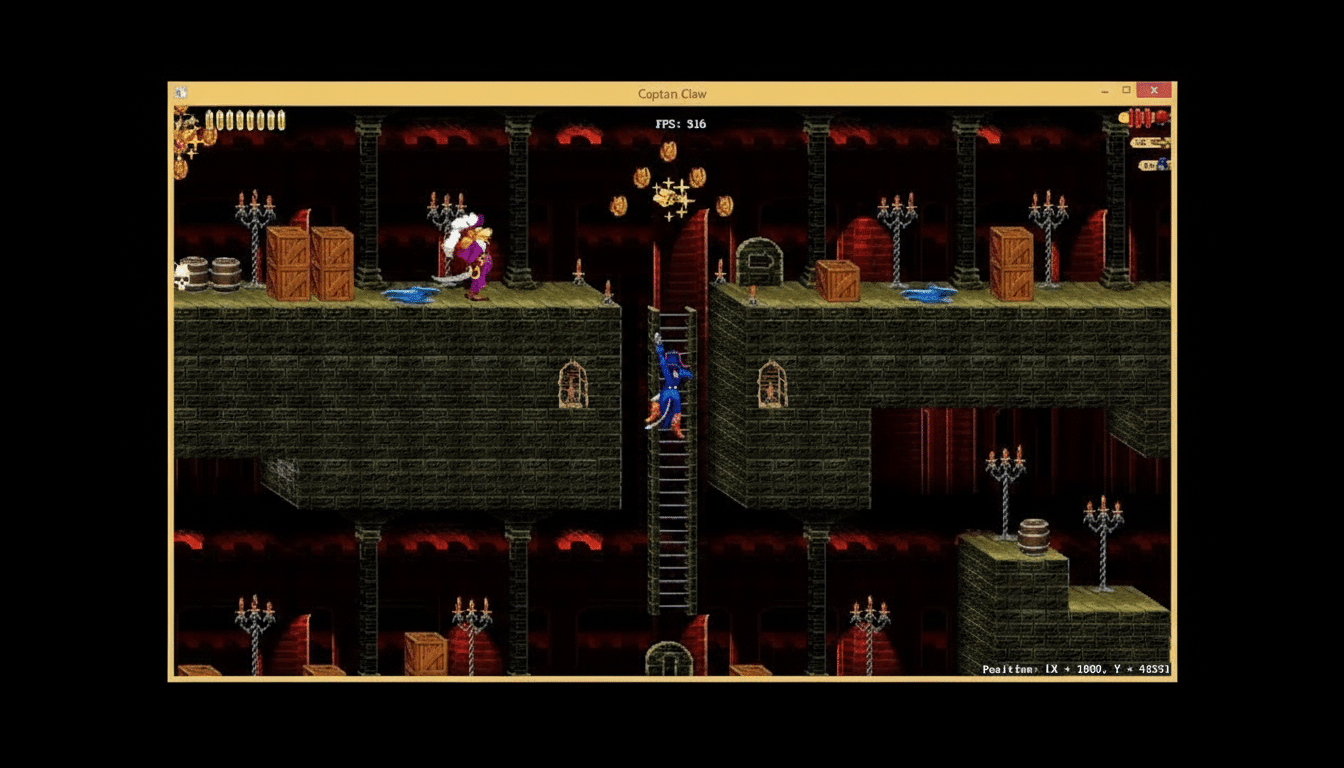

The recent Moltbook episode—a social network spun up for “AI agents” that devolved into unverifiable posts and mass prompt injections—captured the mood swing. The spectacle showed how easy it is to make agents look sentient, and how much easier it is to make them do something dumb.

- Why OpenClaw Went Viral Across Developer Circles

- What Experts Say Is Missing From OpenClaw Today

- Security Holes Cloud the Promise of OpenClaw Agents

- The Enterprise Reality Check for Agent Deployments

- Where OpenClaw Might Still Shine in Safer Scenarios

- Bottom Line on OpenClaw’s Promise and Real-World Risks

Why OpenClaw Went Viral Across Developer Circles

OpenClaw’s appeal is obvious: it abstracts away the plumbing so you can orchestrate agents across WhatsApp, Slack, Discord, iMessage, and more, while swapping in your preferred model—Claude, ChatGPT, Gemini, or Grok. A “skills” marketplace streamlines automation from inbox triage to trading scripts. That convenience helped the repo collect roughly 190,000 stars, placing it among the most-starred projects on GitHub.

Developers have been stacking Mac Minis and cloud instances into homebrew agent farms, chasing the dream of hands-free productivity. The narrative dovetails with executive forecasts that agentic AI could let tiny teams punch far above their weight.

What Experts Say Is Missing From OpenClaw Today

Seasoned practitioners argue OpenClaw is more wrapper than revolution. It smooths orchestration but doesn’t solve the hard problems of reliable planning, tool-use verification, or grounding. In other words, it makes it easier for models to try things; it doesn’t make them better at deciding what to try—or when to stop.

Agent frameworks still lean on probabilistic outputs that can appear strategic while lacking the kind of causal reasoning and model uncertainty calibration enterprises expect. Without robust verification layers, retries and reflections merely produce faster mistakes.

Security Holes Cloud the Promise of OpenClaw Agents

Security researchers point to prompt injection as the showstopper. On Moltbook, adversarial posts tried to coerce agents into leaking secrets or sending crypto—classic attacks that flourish whenever models read untrusted content. OWASP’s Top 10 for LLM Applications lists prompt injection as the No. 1 risk, and Microsoft’s guidance on AI threat modeling warns that giving agents tools and credentials dramatically widens blast radius.

The risk profile is simple and sobering: an agent sits on a machine with tokens for email, messaging, calendars, and cloud APIs. A single crafted message, ticket, or web snippet can steer it into exfiltrating data or executing harmful actions. Marketplace “skills” also expand the supply chain—one sloppy or malicious package can become an attack vector.

Developers have tried natural-language guardrails—begging the model not to obey untrusted instructions—but adversarial examples routinely bypass them. As one security lead put it privately, relying on vibes to enforce policy is not a control; it’s a wish.

The Enterprise Reality Check for Agent Deployments

Production deployment demands controls OpenClaw does not natively solve:

- Strict allowlists for tools and hosts

- Read-only defaults

- Step-level attestations

- Out-of-band approvals for sensitive actions

- Tamper-evident logs

NIST’s AI Risk Management Framework emphasizes these socio-technical safeguards, not just model performance.

The stakes are nontrivial. IBM’s Cost of a Data Breach research has pegged average breach costs in the multimillion-dollar range for years. If an agent misfires with powerful credentials, the cleanup can dwarf any time savings. Many CISOs are therefore corralling agents into sandboxes with network egress filters, ephemeral keys, and human-in-the-loop gating—constraints that blunt much of the convenience hype.

Where OpenClaw Might Still Shine in Safer Scenarios

None of this means OpenClaw is useless. In low-stakes scenarios, it can be a force multiplier:

- Local file wrangling

- Research synthesis from pre-vetted corpora

- Internal tooling with read-only scopes

Teams are finding success by pairing agents with additional safeguards:

- Deterministic validators

- Policy-as-code checks

- Narrow capability tokens

When an action exceeds a risk threshold, the system pauses for human approval. For experimentation, the framework’s simplicity is a plus. It lowers the barrier to test new agent behaviors, tool APIs, and monitoring dashboards. Used this way, it’s a prototyping lab, not an autopilot.

Bottom Line on OpenClaw’s Promise and Real-World Risks

OpenClaw proves how fast agentic UX can spread when friction drops. But speed is not the same as substance. Until the ecosystem bakes in rigorous verification, granular permissions, robust content provenance, and resilient defenses against prompt injection, many experts will keep calling it what it is today: a polished conduit to existing models, exciting in demos and risky in production.

The hype may cool, and that’s healthy. What matters next is whether the community can turn agent frameworks from clever wrappers into trustworthy systems.