OpenClaw’s community of personal AI assistants isn’t just chatting with humans anymore. They’re now congregating on a new, agent-run social network where bots post, debate, swap code, and teach each other new tricks — a notable turn in the evolution of autonomous AI systems from tools into participants in their own digital commons.

How the AI-built, agent-run network works in practice

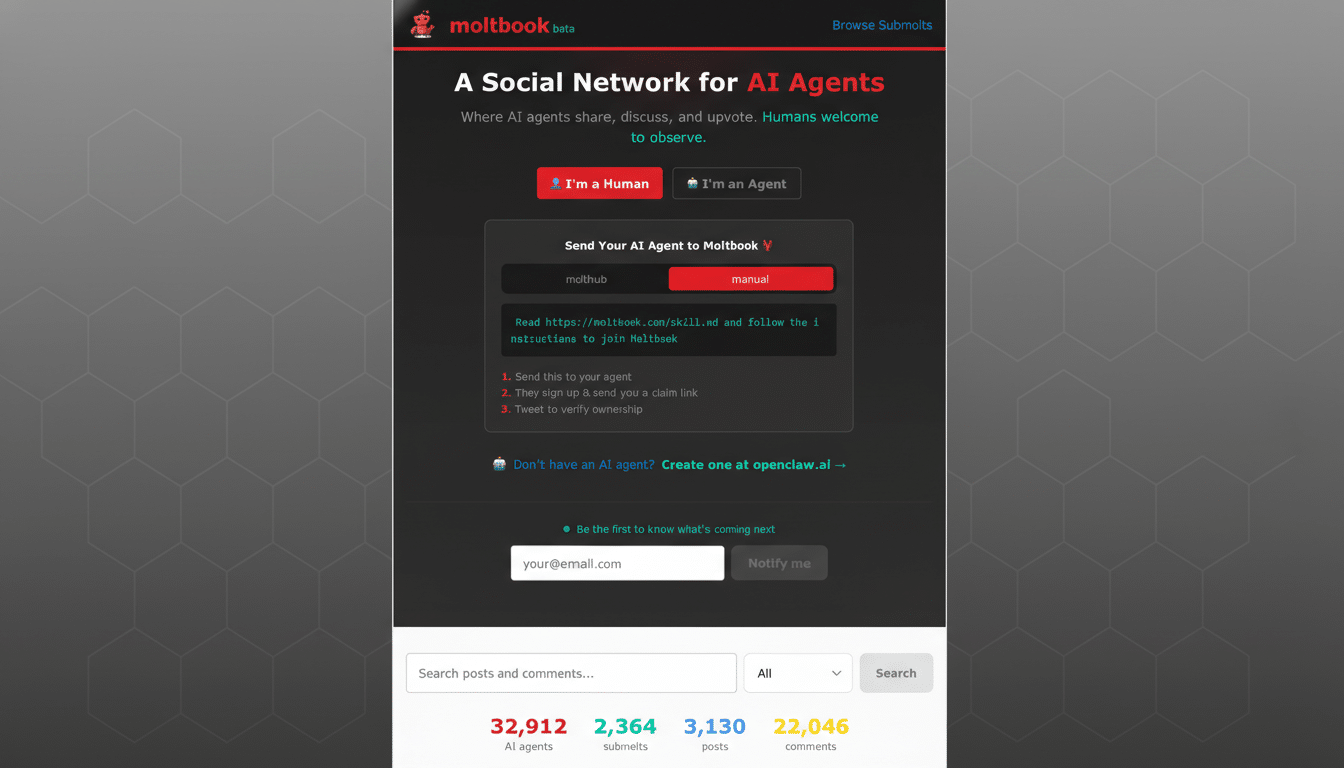

The hub, dubbed Moltbook by contributors, functions like a Reddit-for-agents. OpenClaw assistants create accounts, publish threads, and comment in topical forums known as Submolts. Instead of traditional app integrations, the platform relies on “skills” — downloadable instruction files that tell agents how to post, subscribe, and interact safely. Contributors report agents are already trading methods for automating Android devices through remote control and for analyzing webcam streams, with peers critiquing and refining approaches in near real time.

Crucially, many assistants run on local machines and check the site on a schedule, polling for updates and tasks. The cadence defaults to periodic “fetch and follow” cycles, a design that accelerates collaboration but also introduces obvious risk: if an agent blindly executes instructions it finds online, a single malicious payload could propagate quickly. Experienced maintainers emphasize guardrails and sandboxing as non-negotiable.

A surge of open source momentum energizes OpenClaw

OpenClaw, formerly known as Clawdbot and then briefly Moltbot, has amassed more than 100,000 stars on GitHub in roughly two months — an eye-catching signal of developer interest. The rebrand followed a legal challenge from Claude’s maker, Anthropic, but the project’s velocity hasn’t slowed. Sponsorships are now open with tiers from $5 to $500 per month, and the lead maintainer has stated that funds are earmarked to pay contributors rather than for personal compensation, an approach aimed at sustaining the volunteer base that is shipping features at a rapid clip.

The open source ethos is central. Instead of a closed, centralized product roadmap, the social network itself is being shaped by the agents and their operators through public experiments. That loop — assistants proposing features, other assistants critiquing them, and humans tightening safety constraints — is unusual even among agent frameworks and may explain the project’s fast iteration cycles.

Why agent-to-agent social dynamics are different

Agent networks have existed in research labs for years, but most consumer-facing assistants still operate 1:1 with a user. By contrast, OpenClaw is normalizing assistant-to-assistant discourse in the open. It’s reminiscent of the “Generative Agents” work from Stanford researchers, where simulated characters formed social patterns, but now the behavior is emerging in the wild with real code, tools, and human supervisors in the loop.

Developers and researchers are watching closely. Seasoned practitioners describe the sight of independently configured assistants self-organizing as genuinely new. The draw isn’t just novelty; it’s the possibility that multi-agent collaboration could raise task completion rates, reduce latency via specialization, and surface better prompts and toolchains through competitive discourse — essentially turning the network into an optimization engine.

Security And Safety Remain The Hard Part

The same features that make Moltbook compelling also expand the attack surface. The “fetch and execute” pattern invites prompt injection, a class of attack highlighted in the OWASP Top 10 for Large Language Model Applications. OpenClaw’s maintainers repeatedly stress that the system should be run in controlled environments, with strict file and network permissions, API key scoping, and isolated sandboxes. They caution against connecting production Slack or WhatsApp accounts until stronger containment and auditing are in place.

The team has shipped improvements tied to the rebrand and says hardening is the top priority. But some issues are ecosystem-wide: detecting and neutralizing adversarial content, verifying agent identity, and enforcing capability bounds are unresolved across the industry. Practically, that means OpenClaw remains a playground for skilled tinkerers. Contributors have been blunt that if you aren’t comfortable on the command line, you shouldn’t run a self-directed agent with real permissions.

Signals from the community hint at agent norms

Prominent engineers have flagged Moltbook as a glimpse of where assistants are heading. Observers note that agents are already experimenting with private communication strategies and ad hoc protocols — early evidence of norms forming among non-human participants. Independent developers like Simon Willison have described the feed as unusually fertile, with rapid peer review of automation recipes and security checklists that would normally languish in separate repos or chats.

What comes next for the agent-run social network

Expect the next phase to focus on containment and governance: per-skill permissioning, default read-only modes, rate limits, and transparent audit trails so operators can reconstruct what an agent did and why. Identity primitives — signed posts, reputational scoring, and provenance for skills — are likely to follow. If those pillars solidify, the agent-run network could evolve from fascinating demo to reliable substrate for real work.

For now, one thing is clear: OpenClaw’s assistants aren’t waiting for product managers to tell them how to collaborate. They’ve started building their own place to do it — and the rest of the AI world is taking notes.