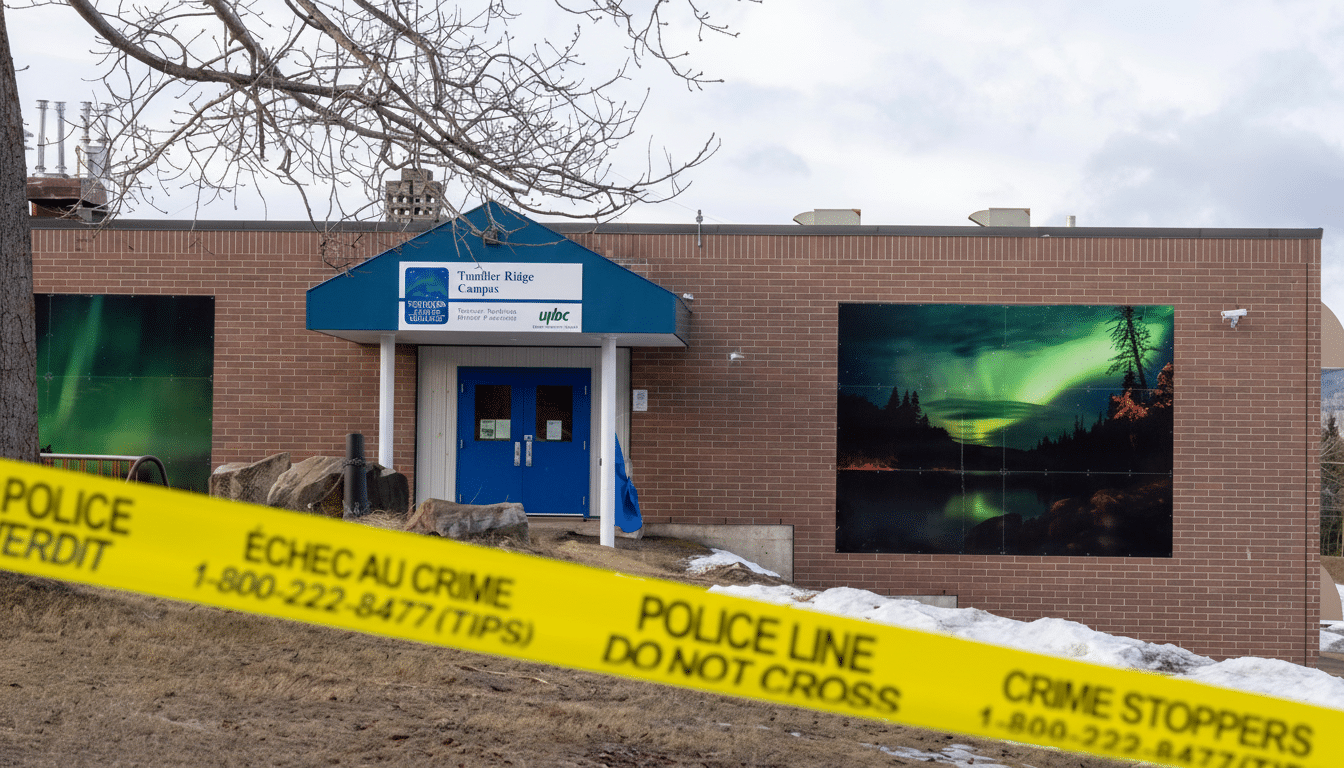

OpenAI employees privately debated whether to contact police after internal systems flagged alarming ChatGPT conversations by a Canadian user who is now accused of killing eight people in Tumbler Ridge. According to reporting by the Wall Street Journal, the company ultimately decided the behavior did not meet its threshold for notifying law enforcement, later reaching out to Canadian authorities only after the mass shooting came to light.

What OpenAI Reportedly Saw in Flagged ChatGPT Exchanges

The suspect, 18-year-old Jesse Van Rootselaar, allegedly used ChatGPT in ways that described or fixated on gun violence. Those exchanges were flagged by OpenAI’s misuse detection tools, and the account was banned months before the attack. The episode, as described by the Journal, triggered internal discussions over whether the company should notify Canadian police, but staff concluded the content did not rise to an “imminent threat” that would warrant disclosure under company policy.

An OpenAI spokesperson, cited by the Journal, said the activity fell short of its criteria for alerting authorities. That decision point—where concerning but not overtly actionable language appears in an AI chat—has become one of the hardest boundaries to define for companies whose products can surface warning signs without offering clear evidence of intent or capability.

A Wider Trail of Warning Signs Across Platforms

The suspect’s digital footprint reportedly extended beyond ChatGPT. The suspect created a Roblox scenario simulating a mall shooting and posted about firearms on Reddit. Local police had also been called to the suspect’s family home in a separate incident involving a fire and drug use. Each of these events, on its own, may not have triggered escalation. Together, they illustrate a familiar challenge: online indicators of risk are dispersed across platforms and jurisdictions, and rarely coalesce into a single, definitive alert for authorities.

This fragmentation isn’t unique to AI systems. Social platforms routinely remove content that hints at violence yet lacks the specificity—targets, timing, means—needed to justify an emergency disclosure. Trust and Safety teams must weigh civil liberties, false positives, and local laws against the duty to prevent harm. The Royal Canadian Mounted Police and municipal forces, meanwhile, often lack the real-time visibility needed to connect subtle online cues before violence occurs.

The Threshold Problem for AI Companies and Police Alerts

Most major platforms, including AI providers, set a high bar for contacting law enforcement: evidence of an imminent threat to life or safety. In Canada, privacy rules allow companies to disclose user information without consent when there are reasonable grounds to believe an emergency threatens someone’s life, health, or security. In practice, however, drawing the line is difficult. Over-reporting risks sweeping in nonviolent users and chilling speech; under-reporting risks missing genuinely dangerous behavior.

By comparison, child safety reporting has a formal backbone: companies in North America submit CyberTipline reports to the National Center for Missing and Exploited Children when they detect suspected child sexual exploitation. No equivalent, standardized pathway exists for vague or intermediate signals of violent ideation in general contexts. As a result, individual companies make case-by-case calls under intense uncertainty.

Regulatory Gaps and Emerging Rules for AI Providers

Policy is racing to catch up. Canada’s proposed Artificial Intelligence and Data Act seeks risk controls for high-impact AI systems but has not yet defined a universal duty to report user threats. In Europe, the Digital Services Act compels very large platforms to assess systemic risks like criminal misuse, and the EU AI Act introduces incident reporting obligations for high-risk AI. In the United States, emergency disclosure is permitted for imminent threats, but there is no AI-specific federal framework outlining when model providers should alert authorities.

Industry groups such as the Partnership on AI and the Frontier Model Forum have discussed voluntary incident-sharing and best practices for red teaming and misuse monitoring. Yet even robust internal guardrails don’t answer the hardest question raised by this case: when does troubling language in a private chatbot conversation cross from “concerning” into “reportable” without trampling privacy and due process?

What Needs to Change to Prevent Future AI Misuse

Experts in platform safety often point to three priorities. First, clearer, measurable criteria for “imminent threat” that reflect the realities of AI chat patterns, not just traditional social media posts. Second, a privacy-preserving mechanism for cross-platform signal sharing so scattered risk indicators can be responsibly stitched together before harm occurs. Third, transparency: standardized metrics in transparency reports for emergency escalations and law-enforcement referrals, audited by independent bodies.

The Van Rootselaar case underscores how AI providers sit closer to early warning signals than many other tech services. But catching signals is not the same as proving intent. Until lawmakers and companies converge on a balanced standard, the sector will continue to navigate an uneasy middle ground—one where a flagged chat may be a cry for help, a dark fantasy, or a prelude to real violence, and the consequences of judging wrong in either direction are severe.

If you or someone you know is in crisis or considering self-harm, contact the 988 Suicide and Crisis Lifeline by call or text for confidential support.