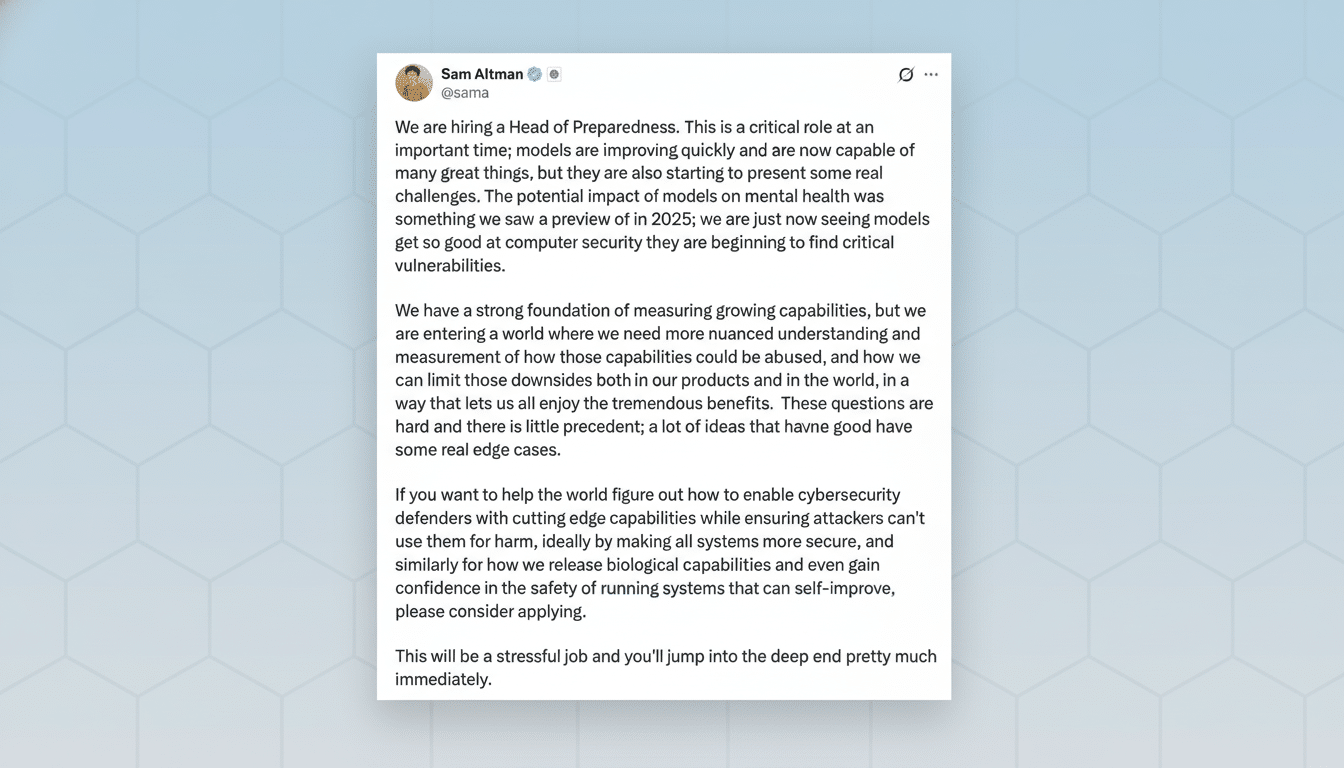

OpenAI is hiring for a new Head of Preparedness, a senior executive who will be responsible for anticipating and preventing the worst ways that powerful AI systems might be misused. The move highlights the extent to which issues around safety and risk management are beginning to be baked into the development of frontier AI, as opposed to a bolt‑on function. CEO Sam Altman has described the role as high‑level and difficult, which suggests that the hire will face complex, high‑stakes work starting on day one.

What the Head of Preparedness Role Covers at OpenAI

Preparedness at a frontier laboratory typically involves three layers: model evaluation, product safety, and organizational response. Expect tasks to range from red‑teaming for biosecurity and cyber misuse; stress‑testing models to predict social harms such as radicalization or self‑harm enablement; and building metrics that signal when a system’s contours have breached risk thresholds.

- What the Head of Preparedness Role Covers at OpenAI

- Why the Timing of OpenAI’s Preparedness Hire Matters

- Recent lawsuits raise the stakes for AI safety at OpenAI

- The broader safety and policy landscape around OpenAI

- Compensation and expectations for the OpenAI role

- What success looks like for OpenAI’s preparedness lead

On the product side, this typically includes crisis interventions that foster safe‑completion behavior, increased identity and use controls for sensitive domains, and provenance tools that curtail AI‑generated misinformation.

At the organizational level, Preparedness drives tabletop exercises, incident response drills, third‑party audits, and cross‑company escalation protocols in line with models such as NIST’s AI Risk Management Framework.

Why the Timing of OpenAI’s Preparedness Hire Matters

OpenAI has gone without an individual lead for the better part of a year, after previous leadership reshuffles split the role among multiple executives, industry reporting shows. Re‑centralizing accountability is a sign of efforts to fortify security operations as models scale and new capabilities emerge at an increasing pace.

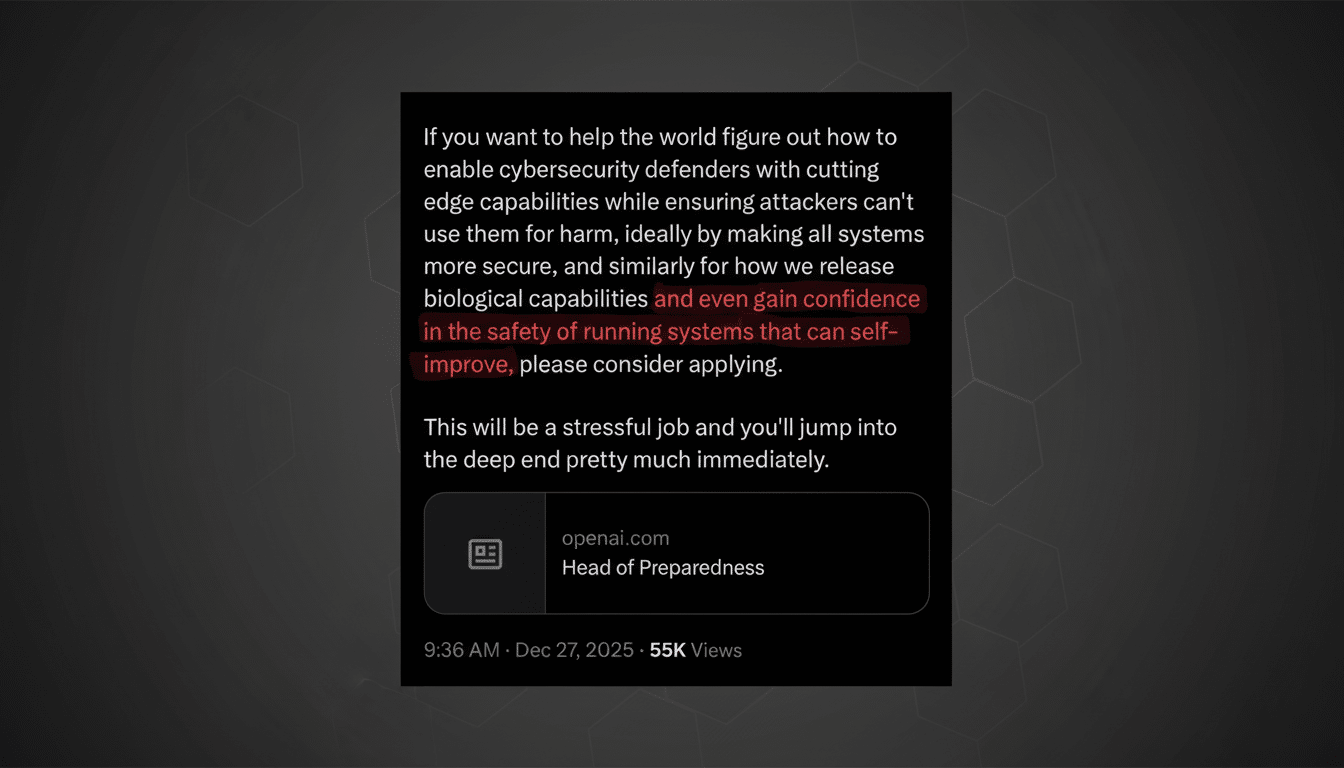

Altman has called the role both “critical” and, given its world‑saving stakes, “stressful” — a task that, much like a preparedness team, must predict low‑probability, high‑impact events while shipping useful protections that work for millions of users. That tension — between abstract risk and the actual behavior of products in the field — is now becoming the gravity well for AI policymaking.

Recent lawsuits raise the stakes for AI safety at OpenAI

Recent wrongful death lawsuits have claimed that ChatGPT helped pave the way for tragedies by encouraging delusional beliefs or not adequately deterring requests about self‑harm. Though the facts will be debated in court, the cases illustrate a difficult problem: generative models can create persuasive counterfeits in high‑stakes areas where even a tiny number of failures is unacceptable.

Mature preparedness involves combining technical interventions — for example, refusal policies, retrieval‑augmented safety responses, and specialized crisis prompts — with monitoring that helps identify unsafe conversations and route them into safer flows. It also requires post‑incident analysis, much as aviation now looks into near misses. That feedback loop is critical: when things break, the repair must measurably make it stronger overall.

The broader safety and policy landscape around OpenAI

The position will also interface with a rapidly developing policy landscape. The UK’s AI Safety Institute is releasing standardized capability and risk assessments for sophisticated models. In the United States, federal agencies are working to operationalize the White House’s AI executive actions, and NIST’s guidance is rapidly becoming a de facto baseline for risk in enterprise AI. The AI Act, meanwhile, will impose new obligations on high‑risk and general‑purpose models.

OpenAI also contributes to multi‑stakeholder initiatives like the Frontier Model Forum, which has focused on red‑team sharing and incident reporting. A competent Head of Preparedness is also likely to strengthen relationships with external labs, academia, and civil society for vetting evals, comparing benchmarks, and coordinating on responsible release practices.

Compensation and expectations for the OpenAI role

The job is based in San Francisco and pays a listed salary of $555,000 with equity.

The role also telegraphs a combination of experience and urgency: The hire will have to marry a security discipline with product pragmatism, leading cross‑functional efforts and communicating risk to the company’s executives and regulators alike.

Practicality rules over abstract principles. Candidates with experience running security operations centers, leading incident response for cloud platforms, overseeing medical or aviation safety programs, or shipping safety‑critical ML systems will have a significant leg up. Look for more mandate around scaling evals, beyond security controls or auditing functions, to address safety failures, and “ship gates” based on the success of a red‑team.

What success looks like for OpenAI’s preparedness lead

Success will manifest in fewer and less severe incidents, better refusal accuracy for dangerous situations, transparent post‑mortems, and evaluations published that can stand up under independent laboratory testing. It also requires clear thresholds for when to throttle, fine‑tune, or restrict features as capabilities mature.

OpenAI’s call for a Head of Preparedness recognizes the obvious: as models grow more capable, the cost of doing safety wrong rises. The right leader won’t just predict the edge cases — they’ll establish systems, culture, and accountability to catch them before they can harm users.