OpenAI has introduced GPT-5.3 Codex, a new agentic coding model that the company says supercharges its Codex coding agent—arriving just minutes after Anthropic unveiled a competing system. The near-simultaneous launches underscore how quickly the race to automate full-stack software work is accelerating, and how central “agentic” capabilities are becoming to AI strategy.

Inside GPT-5.3 Codex: features, speed, and workflow impact

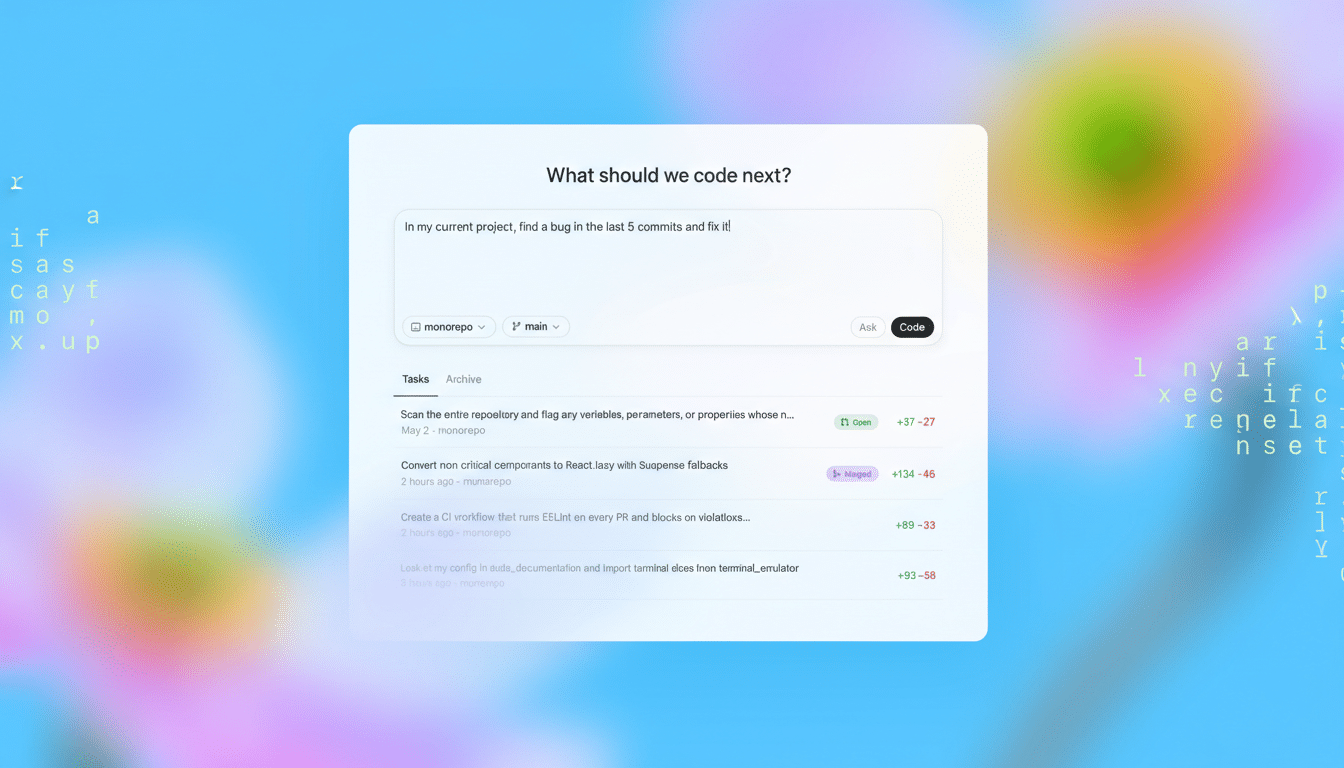

OpenAI positions GPT-5.3 Codex as a step-change from autocomplete and code review toward end-to-end task execution. According to the company, the model evolves Codex from a tool that can write and review code into an assistant that handles “nearly anything developers and professionals do on a computer,” from scaffolding multi-service apps to managing build systems, tests, and documentation.

OpenAI says internal testing shows GPT-5.3 Codex can produce highly functional games and applications from scratch over multi-day runs, maintaining context and iterating across sessions. The company also claims a 25% speed improvement over GPT-5.2, which matters for interactive development loops where latency directly impacts flow.

Notably, OpenAI describes GPT-5.3 Codex as “instrumental in creating itself.” Early versions were used to debug components and evaluate performance, a sign of how agentic systems are starting to assist with their own tooling. While self-assistance is not the same as full autonomy, it hints at a development workflow where models increasingly support data curation, harness generation, and regression triage.

Although OpenAI references performance benchmarks, it emphasizes long-horizon tasks—projects that span hours or days rather than single prompts. That orientation aligns with the trend toward evaluation suites that test planning, tool use, and resilience, not just snippet-level correctness.

A photo finish with Anthropic in the agentic coding race

The release timing highlights a tight contest. Both companies initially targeted the same launch window, with Anthropic moving its announcement up by 15 minutes to beat OpenAI to the punch. For buyers, the optics matter less than the rapid cadence of capability drops—but the choreography signals how fiercely the leading labs are competing for developer mindshare.

Feature-by-feature parity will likely be short-lived as both teams iterate. Expect OpenAI to lean on ecosystem reach and integrations, while Anthropic emphasizes steerability and safety-first behavior. The winner in the near term may be decided by reliability on messy, real-world repos rather than benchmark bragging rights.

Why agentic coding matters for real-world development

Agentic coding systems aim to plan tasks, call tools, read and write files, run tests, and recover from errors with minimal hand-holding. That’s a departure from traditional assistants that stop at code suggestions. In practical terms, an agent might spin up a service, configure authentication, generate CI pipelines, write integration tests, and open pull requests—then iterate based on failing checks.

The business case is straightforward. Analyses from the McKinsey Global Institute estimate that generative AI could automate a meaningful slice of software-development activities, with potential productivity gains in the 20%–30% range for certain tasks. Meanwhile, recent developer surveys report that a majority of engineers already use or plan to use AI tools in their workflow, with interest shifting from autocomplete to agents that manage multi-step jobs.

If GPT-5.3 Codex delivers dependable long-horizon performance, the impact could stretch beyond engineering: QA, DevOps, data pipelines, and even IT ticket resolution are in scope for agentic patterns that coordinate multiple tools and systems.

Risks and guardrails for long-running autonomous agents

Agentic power raises new responsibilities. Long-running agents can drift, silently propagate insecure defaults, or leak secrets through logs if not sandboxed and monitored. Enterprises will look for execution isolation, least-privilege credentials, and clear audit trails that attribute every change to a human or agent step.

Evaluation also needs to evolve. Beyond unit tests, teams should track end-to-end success rates, recovery from tool errors, and reproducibility across runs. Frameworks inspired by software assurance—dependency pinning, deterministic builds, and policy enforcement—will be essential companions to any agentic rollout. Guidance from organizations such as NIST’s AI Risk Management Framework can help structure these controls.

What to watch next as agentic coding enters production

Key questions remain: how GPT-5.3 Codex prices against incumbents, how well it integrates with popular IDEs and ticketing systems, and how reliably it handles messy, undocumented codebases. With Anthropic launching minutes ahead and OpenAI answering in kind, the next phase will be less about headlines and more about sustained delivery on real repositories, service-level guarantees, and measurable developer outcomes.

For now, OpenAI’s move signals a clear direction: coding assistants are graduating from suggestions to software agents. If the claimed speed and scope hold up under production load, agentic development may move from promising demos to standard practice faster than many teams expect.