OpenAI has lined up a tentative deal with Samsung Electronics and SK Hynix to provide high-bandwidth memory for the Stargate initiative, marking a bold attempt to corner one of the scarcest components in modern AI computing. The letters of intent specify production of DRAM wafers for HBM packages and cooperation on certain new data centers in South Korea, putting the two leading memory suppliers on one of the industry’s most aggressive AI infrastructure builds. The interested parties hope to begin collaboration by November 15.

The agreement came after a high-level gathering in Seoul that included OpenAI’s Sam Altman, South Korea’s President Lee Jae-myung, Samsung executive chairman Jay Y. Lee, and SK chairman Chey Tae-won. OpenAI says Samsung and SK Hynix will work to ramp production to as many as 900,000 HBM DRAM units per month in support of Stargate and other AI data centers—a figure that SK Group claims is more than twice today’s industry capacity.

- Why memory is the new AI bottleneck at massive scale

- Inside the Stargate supply strategy for AI data centers

- What 900,000 HBM units a month means for GPUs

- South Korea’s strategic role in AI memory and data centers

- Risks and competitive dynamics shaping HBM and GPUs

- What to watch next as Stargate supply plans take shape

Why memory is the new AI bottleneck at massive scale

For AI training and inference at the frontier scale, performance is increasingly limited by memory bandwidth and capacity, not just raw compute. Next-generation accelerators like Nvidia’s H200 and AMD’s MI300X achieve their throughput by attaching GPUs to stacks of HBM3E using thousands of through-silicon vias, providing terabytes per second (TB/s) of bandwidth to model parameters and activations. Without sufficient HBM, even the fastest silicon goes idle.

That fact has tipped the negotiating balance in favor of DRAM makers that can demonstrate yields in advanced HBM. According to industry trackers such as TrendForce, SK Hynix has been leading over the last few quarters in terms of HBM shipments, with Samsung catching up on its HBM3E ramps and Micron already entering the latest node. By partnering with two Korean heavyweights, OpenAI is trying to de-risk a single-supplier choke point just as model sizes and context windows grow.

Inside the Stargate supply strategy for AI data centers

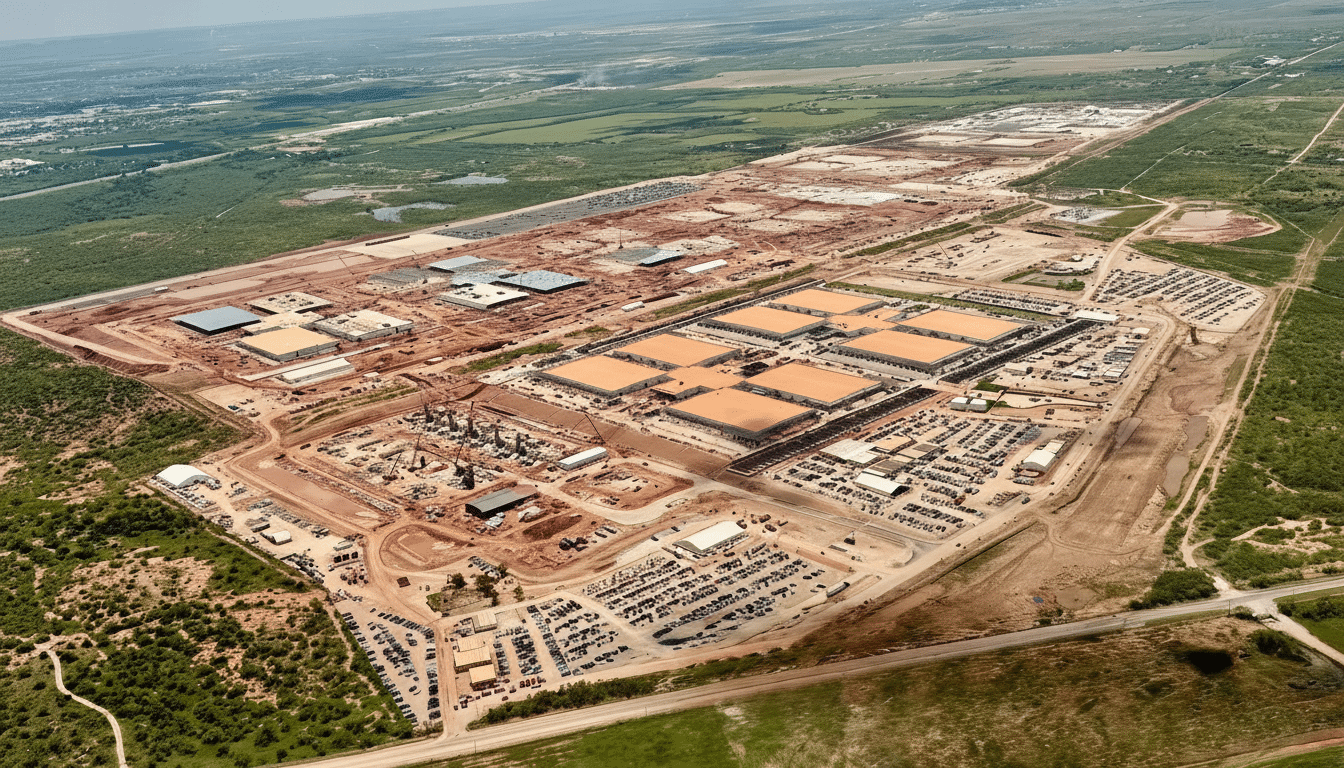

Stargate, led by OpenAI with Oracle and SoftBank also involved, is conceived as a multi-hundred-billion-dollar buildout of AI-first data centers. Company announcements and industry reporting point to historic commitments across the stack: Oracle selling massive capacity in compute revenue over multiple years, Nvidia suggesting it is investing heavily alongside shipping more caches of 10-gigawatt AI training systems, and OpenAI announcing new facilities as part of an effort to take total network capacity to levels measured in gigawatts.

Such ambitions are only plausible with simultaneous advances in power, cooling, networking—and memory. OpenAI sends the message to TSMC that it’s not living in a post-PAM-4 world. By booking HBM supply early and at source proximity, you’re cutting lead-time risk that can already exceed a year when wafer starts, TSV stacking, packaging, and final test are considered. Early booking also provides a demand signal to Samsung and SK Hynix to invest in new HBM lines and advanced packaging.

What 900,000 HBM units a month means for GPUs

HBM packages are measured in stacks, and typical high-end accelerators have six to eight such stacks. On that back-of-the-envelope math, 900,000 stacks a month would be able to supply roughly 110,000 to 150,000 accelerators per month, depending on configuration and yield. Even if only a fraction of the wafer supply goes to Stargate, it gives an idea of the size OpenAI is trying to scope out in response to potential model training cycles not being able to clear.

In addition to raw volume, the technology curve matters. HBM3E is the current workhorse, with capabilities potentially over 4 TB/s per GPU package in leading-edge systems, while future HBM4 in mid-decade should deliver even higher bandwidth at lower voltages. Being able to ramp successive HBM generations with no yield cliffs is just as strategic as booking supply today.

South Korea’s strategic role in AI memory and data centers

Among the memorandums signed is a commitment to work with the Korean Ministry of Science and ICT to select locations for AI data centers in areas beyond the Seoul Capital Area, and a separate partnership with SK Telecom on an AI center. OpenAI will also work with Samsung affiliates on data center projects. SK Group and Samsung will deploy ChatGPT Enterprise and OpenAI APIs to flow throughout their own organizations—a tightening of the operational bond that stretches beyond just supplying components.

The deals are a validation, at the national level, of South Korea’s approach to anchoring the AI value chain on its shores. By connecting memory leadership to advanced investments in data centers, the country can take a larger piece of the economics of accelerated computing: not only the upstream component revenues. Institutions such as the Korea Development Institute have noted that additional productivity gains can be achieved by clustering fabs, packaging plants, and cloud operations.

Risks and competitive dynamics shaping HBM and GPUs

Memory is only one constraint. Industrywide, co-packaged optics, high-layer substrates, and advanced packaging capacity—like 2.5D interposers—are “very tight,” according to the source. Any misstep in these underlying stages can reduce the advantage of additional HBM. The inclusion of geopolitical factors, export regulations, and power availability near data center locations also introduces execution risk for all parties.

On competition, Samsung and SK Hynix are competing to string design wins as Micron’s most recent foray into HBM3E production goes into volume. And we believe that gaining wins in next-generation GPU qualifications can turn share fast when hyperscalers do a technology pivot. OpenAI’s multivendor strategy hedges against such risk while also serving as a mechanism for leverage on pricing and delivery schedules.

What to watch next as Stargate supply plans take shape

Key indicators will be capex guidance from Samsung and SK Hynix, linked to new HBM lines; confirmation of packaging partnerships; and clarity on Stargate’s first-wave data center locations and power envelopes. Also keep an eye on model training cadence at OpenAI, as sustained release velocity will be the tell that memory, compute, and networking pipelines are flowing in harmony.

If successfully executed, the Samsung–SK Hynix alignment provides OpenAI with a plausible route to lock down its memory bandwidth needs for the next generation of AI systems—turning an Achilles’ heel into a moat for Stargate.