OpenAI and Microsoft agree on a revised partnership with artificial general intelligence as the central basis, folding in a governance overhaul at OpenAI with expanded, longer-term rights for Microsoft. The partnership finally resolves months of speculation on control, incentives, and the moment when management can state that a system has passed the AGI threshold.

Recapitalization creates nonprofit foundation and PBC unit

Central to the partnership, Microsoft and OpenAI boards agree first on a recapitalization that formally creates two arms – the OpenAI Foundation, a nonprofit now managing the enterprise, and a public benefit corporation, OpenAI Group, housing the commercial operations. The Foundation maintains equity, which the business values at roughly $130 billion or approximately 26 percent of the PBC. Microsoft’s stake is worth roughly $135 billion and approximately 27 percent on an absolute basis. OpenAI has a dedicated $25 billion in Early Initiatives to cure diseases and construct safety philosophies around approaches, representing an effort to attach those financial upsides directly to broad public benefits.

Microsoft remains OpenAI’s key frontier model partner, maintaining Azure’s exclusivity for access to OpenAI’s front-stage APIs and maintaining long-term intellectual property rights over models and products developed in collaboration, including post-AGI models. The partnership runs through 2032 because of the extended development command in AI design and implementation.

Governance, verification, and IP rights terms through 2032

To manage the risks of validation, any AGI declaration by OpenAI now requires verification by an independent expert panel. The agreement also gave both sides room to move: OpenAI can create products with third parties, and Microsoft can pursue AGI initiatives with other partners or independently. Microsoft’s IP scope will not cover consumer hardware, enabling other device makers and non-Microsoft platforms.

The companies also highlighted that, from a customer standpoint, nothing will change for day-to-day scenarios: Azure will still be the primary cloud for OpenAI models, and long-term IP clarity and model availability will be secured for developers as the systems mature. The recapitalization will shift OpenAI’s center of gravity toward the nonprofit Foundation, which will control the PBC and possess the economic interest. The board’s governance is designed to ensure that the for-profit scale does not overshadow the mission — the equitable distribution of AI-related gains. The nonprofit’s value will grow over time, the board chair has stated. This money is designed to sustain significant public-interest projects over the long term rather than frequent returns.

Structurally, it is a significant experiment: few AI labs have endeavored to connect a profit-generating mechanism to a mission lock-in of this size. For investors and regulators who have been hesitant due to alignment and control issues, the independent AGI verification and nonprofit oversight aim to eliminate such conflicts precisely as technical performance improves.

Research agenda aims to accelerate automated discovery

In a livestream, CEO Sam Altman and Chief Scientist Jakub Pachocki outlined a research agenda geared toward automating scientific discovery. They claimed that same-day systems could already lift multi-hour research chores and project multiplying advances: internal schedules tiptoe around a fully automated AI with research-student capability around 2026, and broader automated research-student capabilities around 2028.

OpenAI hopes that if growth continues, early “small” discoveries will appear within the next few years and “medium” breakthroughs soon after. The fundamental concept is accelerating science throughout biology, components, and software with its help, creaming off “AI” knowledge. The company’s roadmaps are bold, but they reflect a pattern seen throughout laboratories: larger, longer-trained models united with tool use, retrieval, and agentic scheduling.

Safety risks, security measures, and infrastructure needs

OpenAI revealed plans for security measures for small, corporate, and public organizations. These include value alignment, mission definition, precision, adversarial resilience, a reduction in system danger, and principles of the program.

Key security measures outlined by OpenAI

- Value alignment

- Mission definition

- Precision

- Adversarial resilience

- Reduction in system risk

- Program principles

The issue isn’t theoretical: as versions enhance, they become both more helpful and more sensitive to misuse; evaluation frameworks and red-teaming become as crucial as teaching.

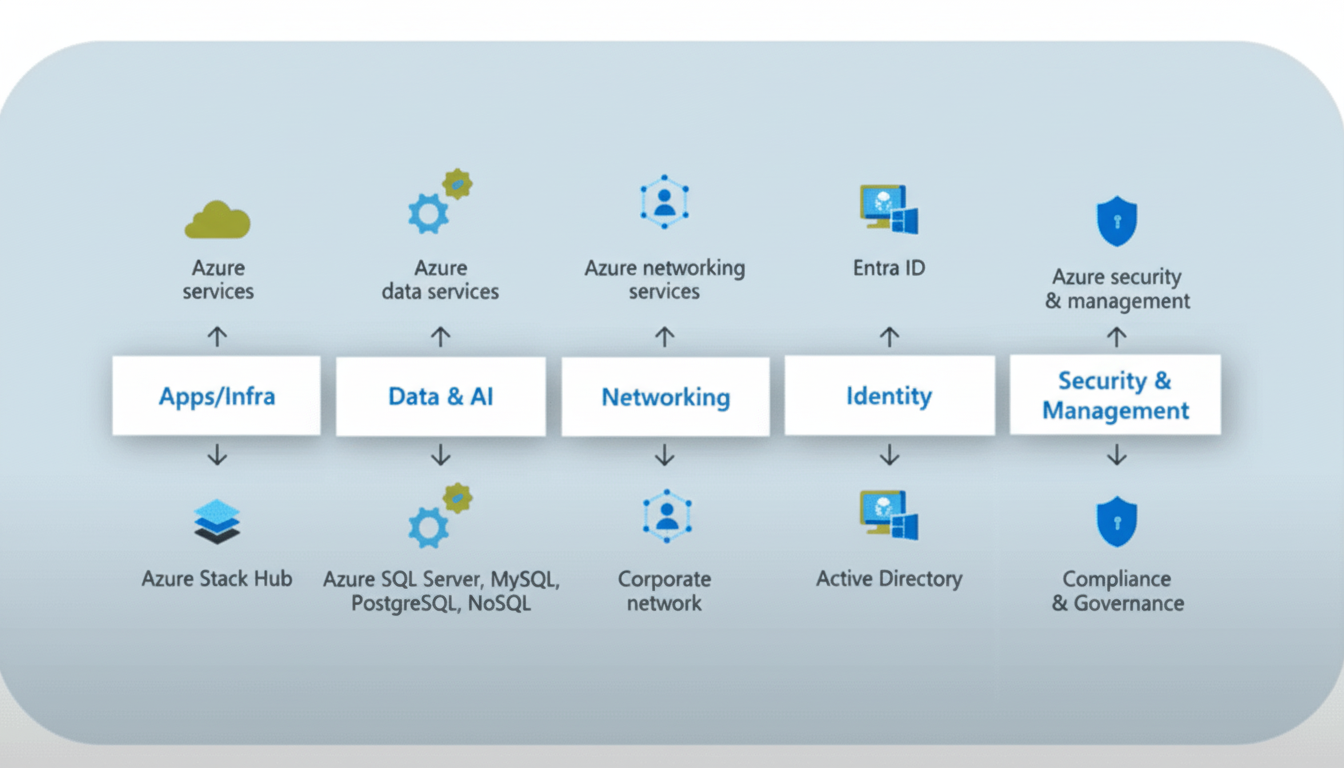

In addition, OpenAI warned of its requirement for more energy, infrastructure, and data centers. Microsoft has invested tens of billions in artificial intelligence-related infrastructure, and this agreement binds both organizations to continue that level.

Infrastructure and compute expansion commitments

- Servers

- High-density GPU deployments

- Next-generation interconnects

- Heat- and water-conserving facilities

Implications for enterprises, developers, and regulators

For enterprises, the message is continuity with clearer guardrails: it is Azure as the primary gateway to OpenAI’s frontier models, and the IP roadmap through 2032 as reduced uncertainty around deploying AI into regulated workflows. For the AI ecosystem, the fact that both partners can engage third parties hints at a more open competitive field than a pure exclusivity pact.

Context matters here. Rival labs are openly chasing superintelligence; US, UK, and EU regulators are watching structural ties between major AI developers and cloud providers. By tying AGI claims to independent verification and concentrating control in a nonprofit, OpenAI and Microsoft are betting that rigorous governance can coexist with rapid capability gains. The upshot is straightforward: the two most closely watched players in generative AI just renewed their vows around AGI, delivering a structure designed to push the science forward while distributing benefits and risks more deliberately. If their timelines prove even directionally right, the next few years of automated research may matter as much as any single model release.