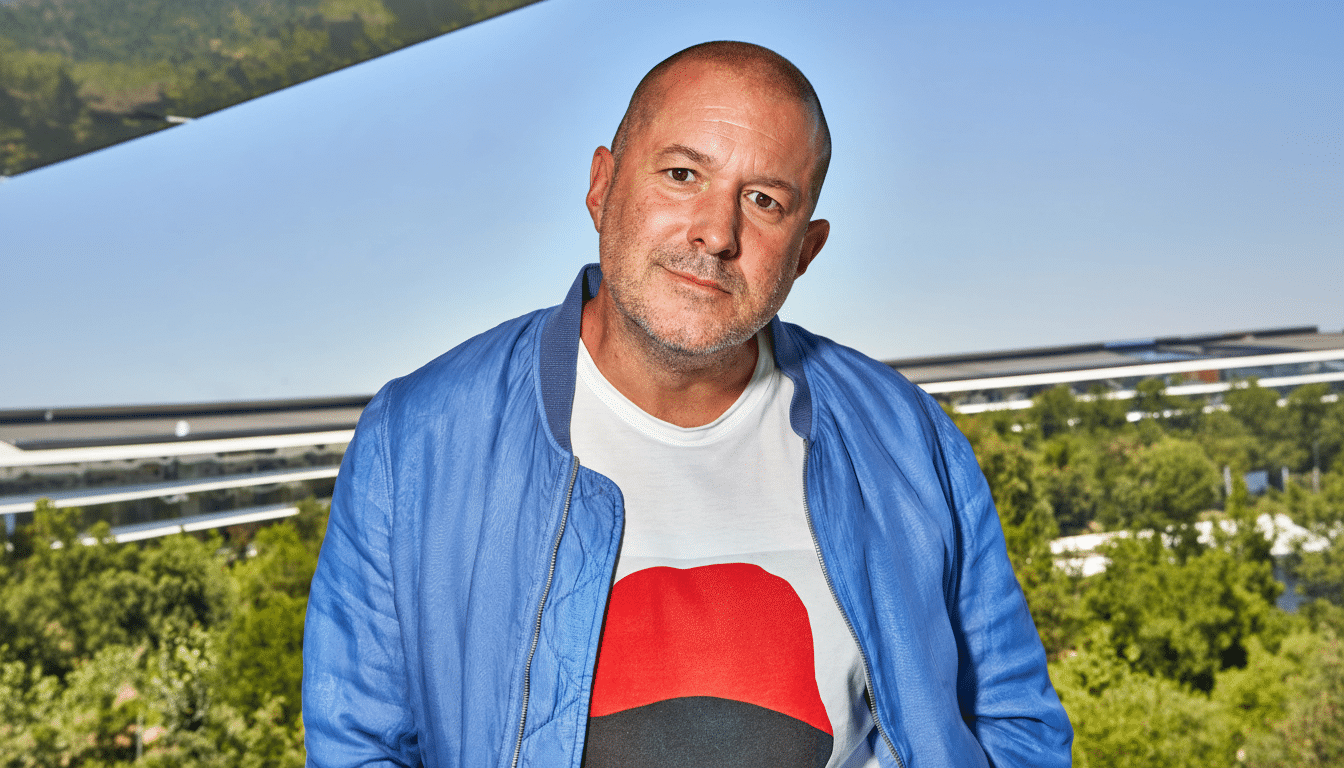

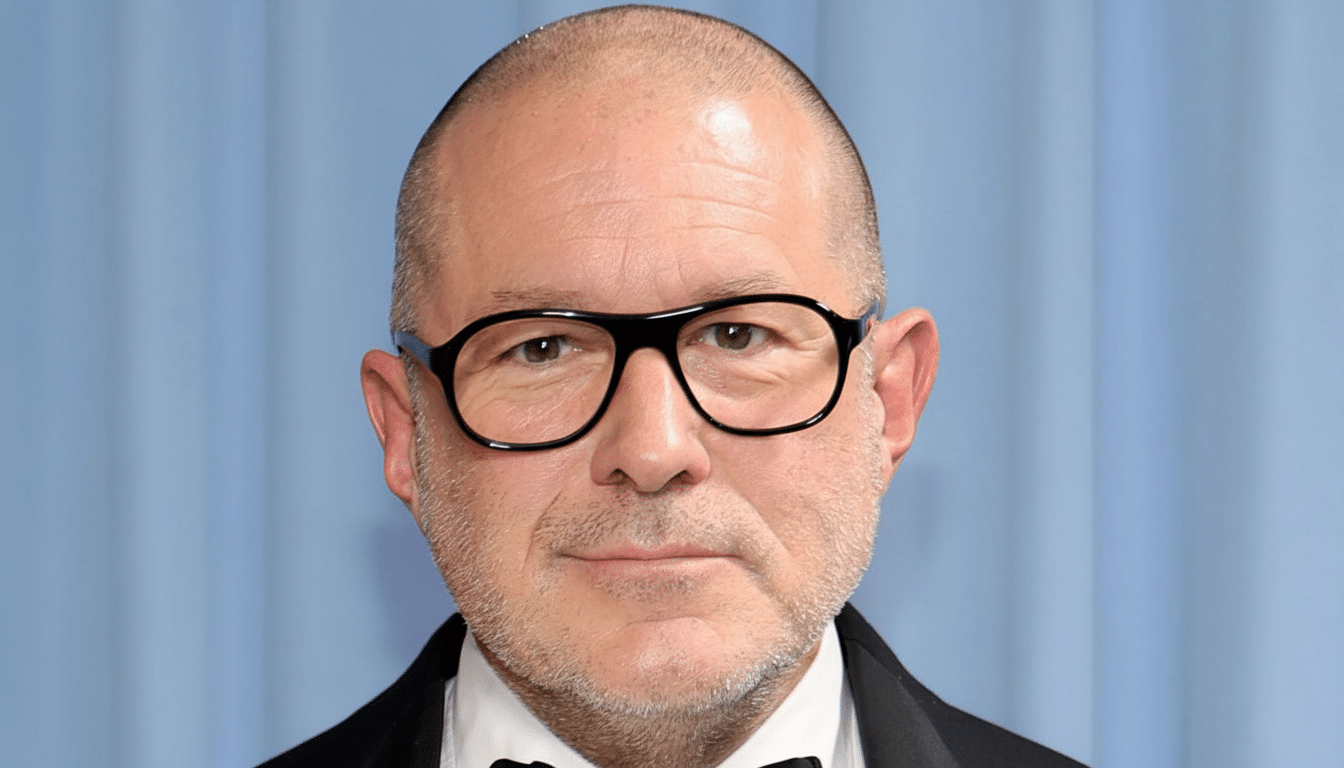

OpenAI’s high-profile hardware collaboration with Jony Ive is getting tripped up on some thorny design and engineering questions, the Financial Times reports, calling into question how to make a screenless, always-listening AI device feel useful, trustworthy, and like it won’t cost you money to use. An earlier report from Bloomberg said that it isn’t set to launch until 2026, and pending decisions about how the device should behave, what its privacy model is, and its compute stack could mean those timelines slide.

A Screenless Vision, A Real-World Friction

The idea is audacious: a palm-sized object that listens to and looks at the world, talks when it feels like we need help, and recedes when we don’t. It belongs at the intersection of industrial design, human-computer interaction, and frontier AI. It is also where past efforts have tripped up, whether over false wake words or terrible handoff scenarios when the assistants misread intent.

- A Screenless Vision, A Real-World Friction

- Always on without being constantly annoying to users

- Privacy and trust are make-or-break for this device

- Compute, battery, and cost pressures challenge viability

- How Do You Engineer A Personality That Users Will Tolerate?

- Recent lessons from AI hardware efforts and launches

- What To Watch For As The Project Evolves

Unlike phones, a screenless assistant can’t fall back on visual affordances—no status bar, no notification shade, no “are you sure?” prompts. Designers will need to encode personality, turn-taking, and boundaries into tone, timing, and delicate cues. If it talks too much, it’s annoying; too little, and it is meaningless.

Always on without being constantly annoying to users

People familiar with the project told the Financial Times that the team is working on an “always on” model, which is substantially more challenging than a model based around a wake word. That assistant must determine when a conversation starts and stops, when users are speaking to someone else, and how to politely exit. Telecom guidance such as ITU-T G.114 reminds us that just a few hundred milliseconds can disrupt conversational flow; now try managing that alongside overlapping talking, background noise, and sensitive content.

There are instructive precedents. Smart speakers from major platforms have grappled with unintended activations in loud rooms and the barge-in timing as more than one person is talking. Wearables have presented the same user pain points: If an assistant butts into a meeting or bounces after being dismissed, users mistrust it. And it’s not simply a matter of getting the “attention budget” right, but rather product viability.

Privacy and trust are make-or-break for this device

It’s a steep trust curve, one that any device constantly listening and looking around with cameras isn’t positioned well on. Consumers are too newly aware of smart devices picking up recordings by mistake, and regulators have less patience than they did a few years ago. Under GDPR and CPRA, consent, minimization, and purpose limitation are not optional “options”; they have to be architected into the silicon, firmware, and data flows.

That would presumably push the team in the direction of on-device processing for hotword detection, scene triage, and redaction, with higher-level tasks like transcription being sent to the cloud. The recent architectures employed by Apple and Google mix a low-power sensing hub with an on-device neural engine to confine as much data flow locally as possible. A comparable hybrid design here would achieve better privacy and latency—but it complicates hardware decisions, thermal management, and cost.

Compute, battery, and cost pressures challenge viability

Modern AI inference is an economic abattoir.

Analyses from a16z and others have demonstrated serving large multimodal models can cost several cents per thousand tokens; real-time voice sessions could quickly add up for each heavy user per day if computing is done in the cloud. At scale, that’s not tenable without aggressive model distillation, caching, or new silicon.

On-device acceleration saves cloud bills but stresses battery and thermals. A pocket-sized box does not have much space for battery and prolonged cooling. Even a small sustained compute load can generate perceptible warmth and drain the battery quickly. Qualcomm’s and Apple’s low-power AI engines indicate a pathway forward, but the task of matching the responsiveness of state-of-the-art chat models within an ultra-compact thermal budget is a frontier problem.

How Do You Engineer A Personality That Users Will Tolerate?

They also note that there is internal debate over the “personality”—how the assistant sounds, when it takes initiative, and how it admits to uncertainty. Consumer studies have indicated that users discriminate against overconfidence and appreciate compact, situated assistance. And Voicebot’s consumer surveys have tracked the relative stagnation in smart speaker engagement—a warning that people tire of novelty if assistants do not continue to prove their value.

A credible approach would put humility and transparency front and center: brief, helpful interjections; clear handoffs to phone or desktop for complicated tasks; visible, physical controls on privacy. Tactile design details, such as muting switches that provide mechanical feedback or LED light language and haptics, can allow unseen computation to feel legible and respectful.

Recent lessons from AI hardware efforts and launches

Recent releases provide a cautionary tale about some of its pitfalls. A screenless wearable that launched with cloud-heavy processing had lag, battery-life issues, and uncertain real-world value, and was met with lukewarm adoption. An AI chatbot designed as a pocket sidekick, in the form of a phone alternative, generated early buzz, but there were fundamental reliability issues. Meta’s camera glasses, however imperfect, found a more mission-driven use case by leaning into hands-free capture and lightweight assistance without promising to replace the phone.

The takeaway is pragmatic scope. Begin with a few jobs-to-be-done—memory aids, hands-free capture, or proactive reminders based on location and calendar—then earn the right to do more. Overpromising AGI on a little device sets you up for disappointment.

What To Watch For As The Project Evolves

Key signals will be whether the team commits to a hybrid compute model, how explicit its privacy controls are, and if the narrative of the product narrows in on a few high-frequency behaviors.

Should Bloomberg’s 2026 timeline be accurate, imagine a year of behind-the-scenes experiments to dial in focus, latency, and battery life, before we see anything public-facing.

The partnership marries one of the most prominent AI companies in the world and a designer who created several iconic consumer hardware products. The ambition is real. So are the constraints. The battle to turn ambient AI from a disembodied marketing term into a beloved, screenless product will likely rely on thousands of small, disciplined choices rather than any one moment of breakthrough—and that appears to be precisely where the struggle is playing out.