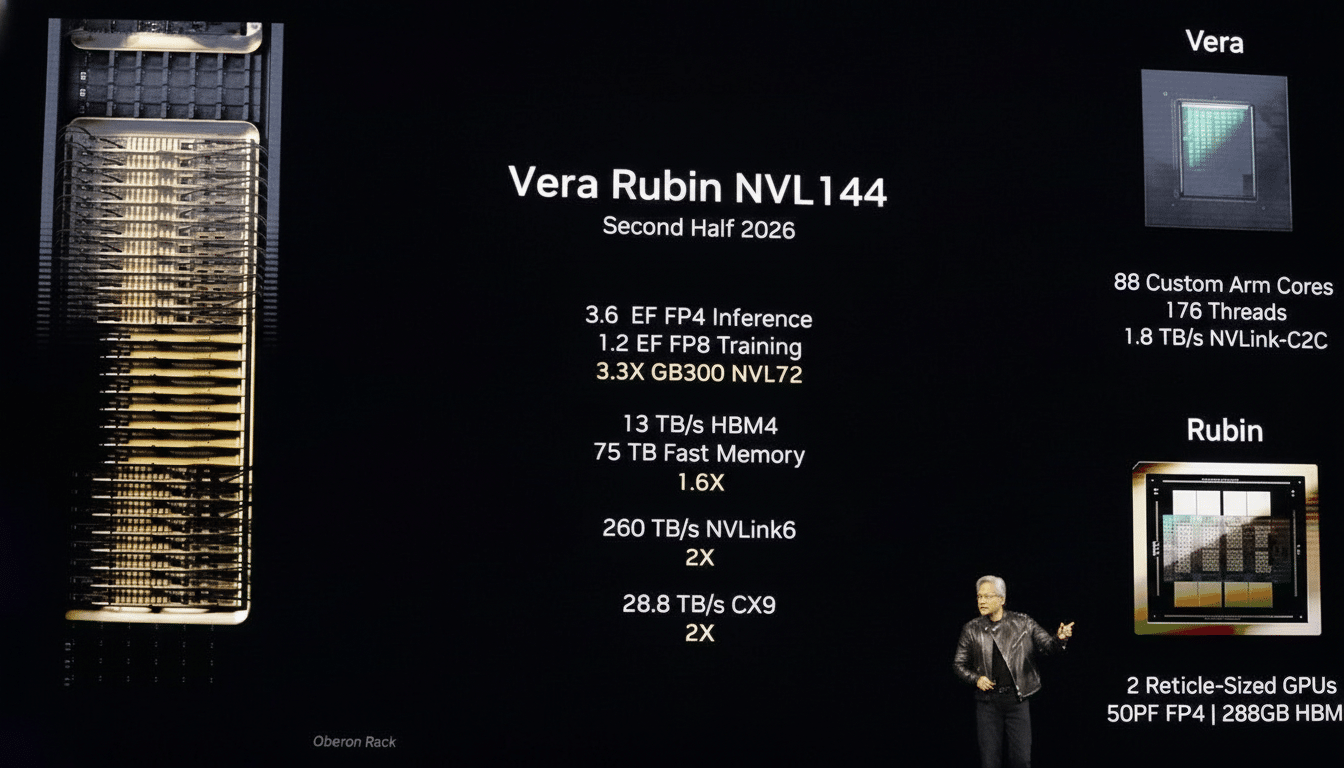

Nvidia additionally utilized CES to announce that its next AI platform, Vera Rubin, was on schedule for release later this year, sharpening the company’s standing in hyperscalers’ search for building greater and more efficient AI systems. Here are four things that matter most about the announcement — and why enterprise buyers, researchers and cloud providers are scanning it for clues.

What Vera Rubin Is: Nvidia’s Next Six-Chip AI Platform

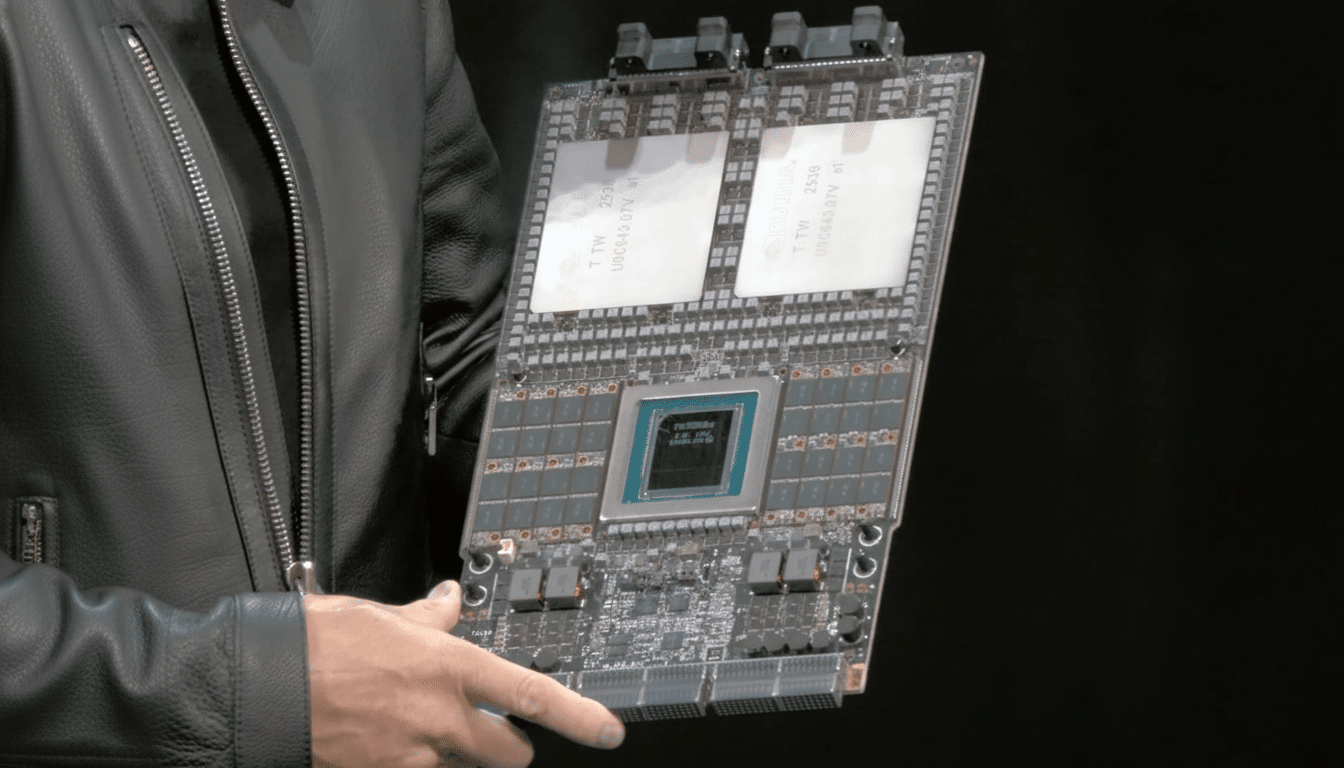

Vera Rubin is a six-chip platform based on what AMD calls a new “superchip” marrying one Vera CPU and two Rubin GPUs onto a single module. The design is textbook Nvidia co-design: pair CPU and GPU silicon, optimize interconnects and memory, package it up as a building block for AI factories. Practically, that translates to fewer integration pain points and greater utilization for workloads that have a mix of training and inference.

Superchips enabled hyperscalers to build colossal clusters more quickly, an urgent priority for companies like Microsoft, Google, Amazon and Meta that together have invested tens of billions of dollars in AI infrastructure. Yahoo Finance observes that these operators are migrating to turnkey systems that shorten time to value — an area where Nvidia has become a major player with the shipping of complete compute, networking and software stacks.

Why It Matters for AI Scale: Training and Inference

The demand curves for both training and inference are thickening at once. Foundation models continue to increase the number of parameters and will soon support an expanded context window. Inference through to production has also switched from pilot to always-on services. At Goldman Sachs, analysts projected that AI-related capex would eclipse $200B by the middle of the decade, with most spend for compute and advanced packaging. And a new platform showing up on an approximately one-year cycle allows buyers to plan multiyear rollouts without having to start and stop deployments.

Perhaps just as crucially, Rubin seems built for mixed loads. Enterprises want to retrain or fine-tune models overnight and serve them during the day on the same fleet. So if Rubin boosts scheduling and memory efficiency in these mixed scenarios, it can increase cluster throughput without increasing the footprint — key for data centers limited by power or space.

Performance and Efficiency Claims for Rubin Superchips

Nvidia claims Rubin can produce tokens — the fundamental unit of LLM output — up to 10 times faster. That alleged target is a sore spot: inference costs now comprise the lion’s share of many AI budgets. Cloud providers charge by the 1K or 1M tokens, so more tokens-per-watt and per-dollar directly mean lower serving costs, better margins for AI-native apps, or some combination of both.

You can expect optimizations to happen in multiple places: architectural improvements in the GPUs and CPU offload path, faster memory, better interconnect bandwidth, compiler/runtime wins in Nvidia’s software stack. Formal MLPerf scores for Rubin were not available, but preceding generations have demonstrated that software updates alone can deliver double-digit efficiency improvements over the life of a product. If Rubin can apply that recipe on new silicon, early adopters could realize significant TCO benefits before waiting for a hardware refresh.

Real-world example: Multilingual chat or agent workflows in enterprises can’t run inference at batch sizes that peak efficiency dictates. If efficiencies in scheduling and memory management techniques for Rubin can support a larger effective batching without causing spikes of high-latency requests, even a small reduction in response cost could have quite an impact at scale.

Availability and Supply Chain Watch: Timing and Capacity

Nvidia confirmed that Rubin is on track to deliver, and will “ship later this year,” but did not provide any other updates beyond the physical installed base. As Wired has covered in other launches, advanced chips usually move into low-volume production first for validation before ramping up. Nvidia remains dependent on TSMC to manufacture, with advanced packaging capacity being the swing factor in AI GPUs since 2023–24.

Two variables to watch:

- CoWoS packaging throughput

- How quickly hyperscalers validate new nodes across their fleets

TrendForce has previously noted that CoWoS is a bottleneck across the industry, and qualification cycles at cloud scale can take months — even in cases where demand is urgent. If Nvidia and TSMC have increased packaging capacity as we presume, Rubin’s ramp will be less lumpy than past generations; if not, there is a good chance that the allocation scheme prioritizes full-stack system buyers.

And now, in the meantime, integration planning begins. Teams will want to model token efficiency and power draw against their current inference mix, analyze networking topology requirements for scale-out training, and estimate the cost of software migration using new APIs to capture early gains in runtime. With Rubin in place to drive the next phase of AI performance-per-watt, the companies that queue up validation workloads early will be the first ones to most effectively transform silicon into business value.