Nvidia has signed a non-exclusive deal to license technology from Groq, one of its most closely watched AI chip foes, and is hiring Groq founder and CEO Jonathan Ross as well as president Sunny Madra and other staffers. The move is an indication of Nvidia’s desire to speed up low-latency inference and expand its silicon and software playbook, while allowing the door to remain open for Groq to still collaborate with other partners.

The deal comes as the demand for generative AI exceeds data center capacity, pushing buyers to scrutinize throughput, energy use, and total cost of ownership. Nvidia remains the leader in AI accelerators, but Groq has gotten attention for a different architecture that focuses on timing — its chips are designed specifically to stream language models fast and with known token latency.

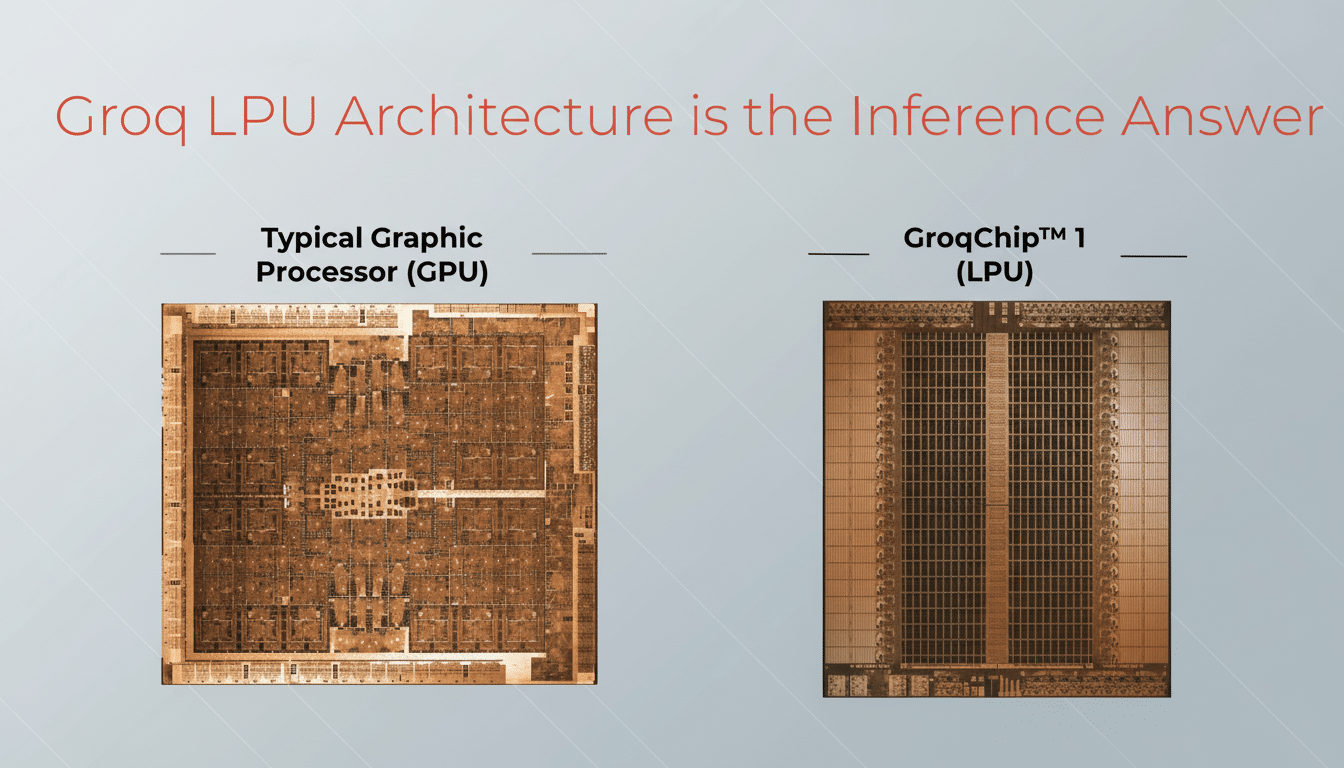

What Nvidia Gets From Groq’s LPU for Faster Inference

Groq’s key invention is the LPU, or language processing unit, which is tailored specifically to do inference once you have trained one of these massive language models. The firm has said its technology is capable of processing massive responses up to 10x faster, at about one-tenth the power consumption, compared to traditional techniques and with deterministic tokens-per-second performance. Groq has demonstrated, in public demos and on company materials, sustained high-throughput inference for real-time agents, coding assistance, and interactive search.

Jonathan Ross brings a deep bench of accelerator experience; at Google, he was part of the team that developed the TPU, a custom AI chip that rewrote training and inference economics in hyperscale environments. Absorbing Ross and Madra and several “key” leaders at Groq, along with a license to Groq IP, indicates that Nvidia may be looking to cross-pollinate around compiler design, scheduling, and latency-reducing inference techniques that could complement Nvidia’s custom CUDA ecosystem (Accelerated Computing & Jetson) with new insights learned from low-level optimizations made in its TensorRT library and Triton Inference Server.

Why Non-Exclusive Licensing Matters to Both Companies

Non-exclusive licensing affords Nvidia flexibility while not preventing Groq from forming its own partnerships elsewhere. That nuance could matter to enterprises that are leery of vendor lock-in: Groq can still sell its LPU systems and services, while Nvidia gets to fold licensed know-how into its software stack and platform guidance. It also minimizes antitrust friction by neither consolidating outright nor closing off optionality for cloud providers, model developers, and systems integrators.

Financial terms were not disclosed, nor was the range of the licensed IP. As a practical matter, keep your eyes out for software updates, reference designs, or benchmarking numbers which show lower-latency responses or better tokens-per-watt on Nvidia platforms as early calls that the Turing cards are integrating well.

Market Impact and the Shifting Competitive Landscape

Nvidia still controls AI accelerators; industry analysts, such as the ones at Omdia, estimate Nvidia’s market share to be north of 80%. No surprise: Nvidia systems top MLCommons’ MLPerf results in training and inference; Nvidia’s dominance persists across the two benchmarks. But the competitive landscape is spreading: AMD is scaling up MI300-class GPUs, Intel has Gaudi for inference, and cloud providers are pursuing their own custom silicon projects such as Trainium, Inferentia, and Maia in complement to Nvidia fleets.

It is a momentum that Groq has rapidly assembled. In September, the company raised $750 million at a value of $6.9 billion, and it says more than two million developers now rely on apps using its technology, far more than roughly 356,000 just a year ago. That growth underscores strong demand for predictable, low-latency inference — especially for chat, code completion, and agentic workflows where every millisecond can make a difference.

For the biggest buyers, power and space have become their chokepoints. In practice, real-world deployments include not only the quality of a model, but also end-to-end tokens per second and tokens per watt. If Nvidia can combine Groq’s approach with its own accelerators and software, companies looking to get more useful throughput out of a rack they’ve already built, or who want to delay significant new buildouts, could find ways to weigh the performance breaks against their energy budgets.

What It Means For Developers And Data Centers

Latency, cost, and reliability are what software developers care about. We chose the Groq LPU architecture as it can provide deterministic performance, which is important for streaming and real-time agents where jitter reduces user experience. Its software ecosystem, from CUDA to TensorRT and its inference server, is already the default for many teams. A licensing tie-in could also bring best practices — for example, optimized kernels, scheduling strategies, and model partitioning — more readily available across popular frameworks without a hardware rewrite.

It’s simple math for operators: the more tokens served up per dollar and per kilowatt, the better. If aspects of Groq’s approach contribute to lowering tail latency, increasing throughput in existing GPU clusters, there is a short-term payoff in utilization and unit economics. On the latter, it’s not inconceivable that we’d see cloud marketplaces and on-prem vendors claiming big improvements in end-to-end inference pipelines to show off, for example, retrieval-augmented generation systems where I/O, networking, and memory often swamp raw FLOPS.

The Road Ahead for Nvidia, Groq, and Inference at Scale

There are still many unknowns, such as the scope of IP licensed, how quickly this can be integrated by Nvidia, and whether roadmaps going forward will bring new inference-optimized products or just software-side enhancements. Yet the strategic intention is crystal-clear: Nvidia doesn’t just want to own peak training performance; it wants to own the lowest-latency, most efficient inference at scale.

Which means Groq will be able to pursue customers and partnerships without having to latch on as an accessory now, especially with its founder finally joining the industry’s pace-setter. If the collaboration produces actual tps-per-dollar and tps-per-watt benefits across popular deployments, it could redraw expectations for real-time AI while re-energizing competition up and down the accelerator stack starting in 2026.