A $30,000 Nvidia H100 GPU is bound for low Earth orbit as part of the Starcloud-1 experimental satellite being assembled in the US by startup Starcloud in a test to determine whether high-performance computing can be done reliably from space.

Nvidia announced the mission on its company blog, and said the spacecraft is approximately the size of a small refrigerator and weighs about 130 pounds. The Redmond, Washington-based company formerly known as Lumen Orbit says the payload is designed to provide orders of magnitude more GPU compute than previous on-orbit experiments.

- Why GPUs in Space Could Reduce Data Center Energy Use

- Inside the Starcloud Demonstrator for On-Orbit AI Compute

- Power, Cooling, and Connectivity Challenges in Orbit

- Surviving Space Radiation with Resilient GPU Systems

- Economics and Environmental Math for Space Data Centers

- What to Watch Next as Space-Based Compute Advances

Why GPUs in Space Could Reduce Data Center Energy Use

Groundbound data centers are reeling from an explosion in energy use tied to AI workloads. The International Energy Agency projects that the world uses roughly 460 terawatt-hours of electricity today for data centers — and that this figure will double by the mid-2020s as AI expands.

Space provides a large supply of solar power and a naturally cold radiative heat sink. Starcloud claims that, following launch, space facilities could cut lifecycle carbon emissions by a factor of ten over terrestrial centers as well as slash energy operating costs. Initial cost estimates for Starcloud’s construction are not provided. Amazon founder Jeff Bezos has publicly endorsed the more general concept of relocating heavy industry and energy-gobbling computing beyond our planet.

Inside the Starcloud Demonstrator for On-Orbit AI Compute

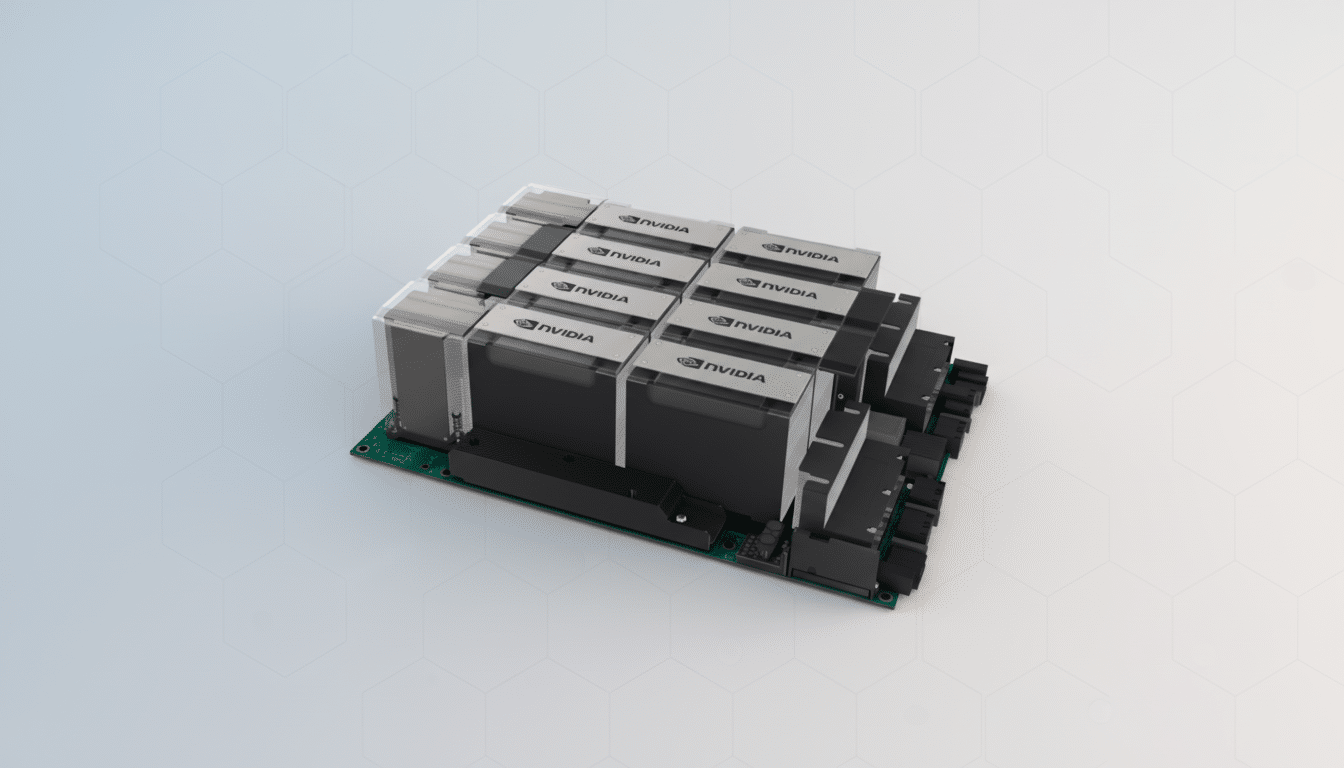

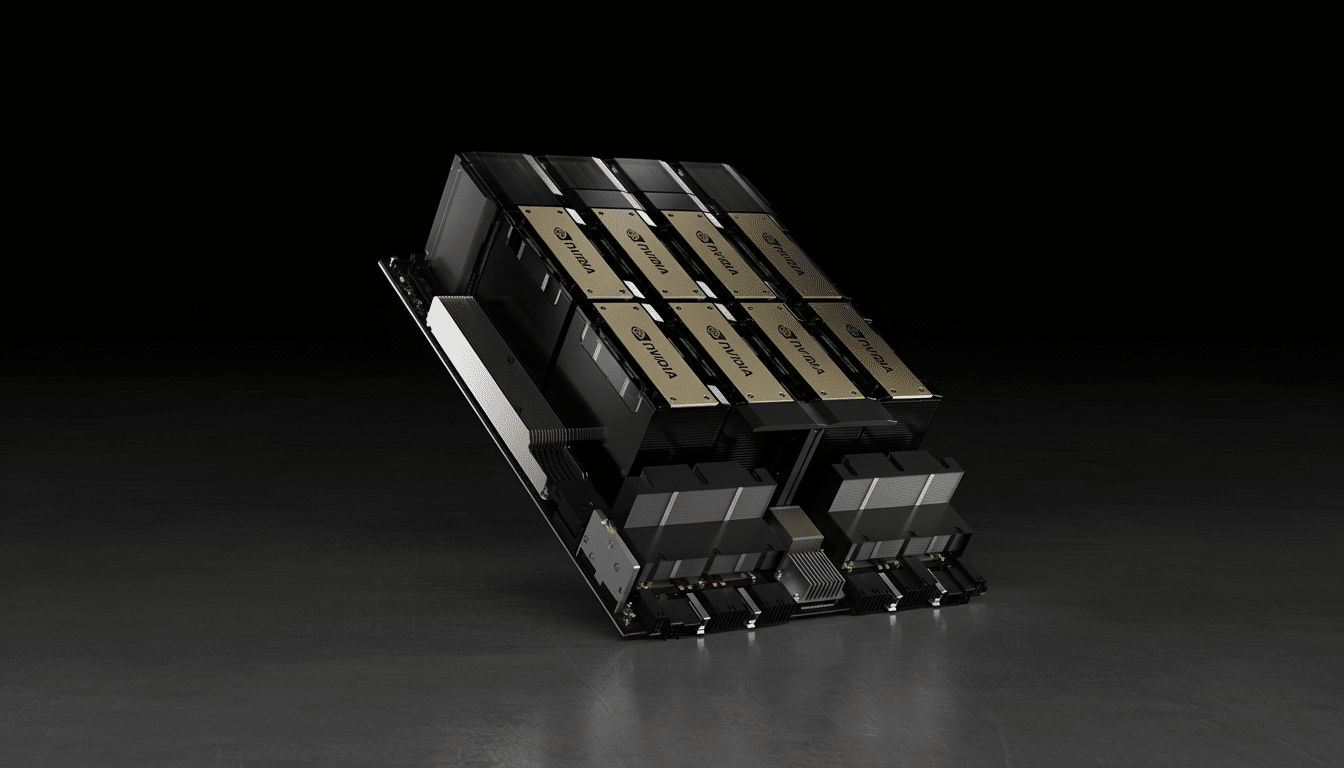

The Starcloud-1 platform will be delivered with an Nvidia H100 — the company’s flagship enterprise accelerator geared toward AI training and inference — along with complementary compute and storage. The firm will verify GPU performance, software stack stability, and reliability of automated fault management during in-orbit operations.

The mission will concentrate on workloads that mirror actual customer demand, including model inference on imagery and video, compression and encryption at the edge of networks, and data triage to reduce downlink requirements. Starcloud has said it will use laser crosslinks to connect with other constellations, citing systems such as SpaceX’s Starlink and Amazon’s Project Kuiper for high-throughput backhaul.

Power, Cooling, and Connectivity Challenges in Orbit

In orbit, sunlight is converted directly to electricity via solar arrays; the grid does not get in the way. Indeed, a single system with an H100 can pull hundreds of watts and around 700 W in some data center configurations, so these scaled systems are going to need careful power budgeting and plenty of surface area for heat rejection from radiator farms.

Despite the myth, vacuum is no better at absorbing heat than spreading jam on toast; spaceships dump waste heat by radiation. This implies that the upper limit of sustained compute is determined by the size of the radiator. Based on deployable radiators, the company’s design avoids energy-hungry liquid chillers common on Earth.

For networking, optical links may offer tens to hundreds of gigabits per second among satellites (giving on the order of terabit/s per s/c), with ground linkage via Ka-band or optical terminals. Round-trip times to low Earth orbit are usually less than 50 milliseconds — for batch inference, training checkpoints, and media processing, this is totally fine but isn’t quite good enough for ultra-latency-sensitive trading or other interactive apps.

Surviving Space Radiation with Resilient GPU Systems

Commercial GPUs are not radiation-hardened, so reliability is ensured by shielding, error detection and correction, watchdog timers, and software-level resilience. Single-event upsets can induce bit flips in both memory and logic; strong error protection, like ECC for DRAM or checkpoint-restart (CR) mechanisms, are needed.

Commercial off-the-shelf compute has been flown before. Hewlett Packard Enterprise’s Spaceborne Computer experiments on the International Space Station found that commodity servers could withstand extended-duration workloads with, appropriately enough, software hardening. The Nvidia H100 test adapts this to accelerated AI compute onboard free-flying spacecraft, where thermal and radiation swings are even harsher — and autonomous recovery is a must.

Economics and Environmental Math for Space Data Centers

The price of a lift to orbit has plummeted while rideshare missions have become increasingly common — today, an industry price for small payloads to low Earth orbit can be in the range of a few thousand dollars per kilogram. For a bus weighing some 60 kilograms, launch is in the low hundreds of thousands — material, yes, but amortizing over multi-year operations.

Here on Earth the electric bill for a high-end GPU isn’t so bad, but at thousands of GPUs the cost of electricity and cooling is a significant percentage of operating costs. The thesis of Starcloud, the company, is that free (solar) power plus radiative cooling could flip the cost curve for light-reflecting satellites, even after accounting for launch emissions and costs associated with orbital servicing. Some European Space Agency–sponsored work, such as the ASCEND concept led by Thales Alenia Space, has come to related conclusions for certain deployment scenarios.

What to Watch Next as Space-Based Compute Advances

Starcloud said a follow-on satellite, Starcloud-2, is being developed as the company’s first commercial platform, should the demo meet its objectives. Nvidia’s participation is another sign of the speed with which AI vendors are investigating untraditional infrastructure to meet demand for compute.

Major milestones include stable operation with H100 through radiation events, sustained thermal equilibrium at peak load, and high-throughput optical networking to ground/peer constellations. Regulators will also be monitoring compliance with debris mitigation and deorbit rules established by the FCC and the Office of Space Commerce.

If the mission is successful, space-based data centers could transform from science project to a niche but useful tier in the global AI infrastructure stack — handling energy-intensive, latency-tolerant workloads and easing the demands on Earth’s grids.