Struggling to keep their heads above water, nonprofits are finally turning to AI as a force multiplier and beginning to experiment with automation.

The idea is nothing new: Startups for years have used incredibly smart algorithms and machine learning systems in order to stretch impressions that boost advertising prime-time reach while reducing cost handsomely.

New survey data from Candid, Bonterra, and Fast Forward highlight growing interest and early pilots, as well as ongoing concerns about equity, privacy, and the responsible use of AI.

Candid reports 65% of nonprofits are hungry for AI, but only 9% feel prepared to use it responsibly. Bonterra’s polling indicates more than 50% of its partners have tried out AI to some extent, though primarily for internal operations. A Fast Forward scan of more than 200 AI-using nonprofits found that the smallest teams — less than 10 employees — are leaning in fastest, often developing lightweight chatbots or domain-specific models trained on publicly available materials.

Why Nonprofits Are Turning to AI for Greater Impact

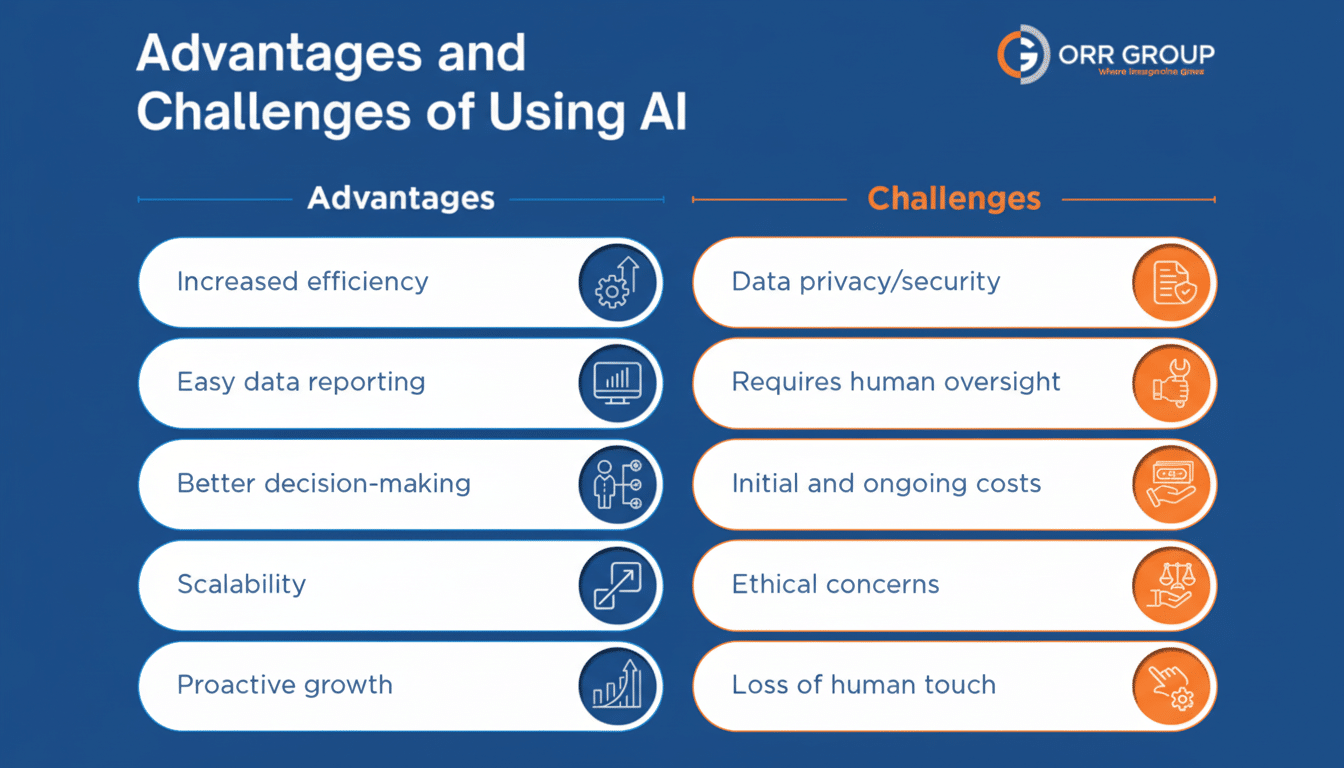

Nonprofits are caught in a familiar vise: increasing demand for services, flat support with no strings attached, and long-term overhead problems. AI promises leverage. Used judiciously, it can cut down on redundant tasks, free up staff for community-facing work, and help programs make data-driven decisions.

Use cases are often pragmatic: summarizing research and policy papers, draft grant narratives and donor thank-yous, segment outreach lists, triage incoming email, schedule volunteers, or flag out-of-the-ordinary results in finance or compliance reviews. In the case of program teams, AI-supported analysis can surface patterns in case notes, hotline transcripts, or survey responses — identifying needs earlier and guiding interventions.

What AI Is Good at Today for Nonprofit Operations

Early adopters are concentrating on internal pilots where the risk is lower and the ROI immediate. Some midsize human services organizations have cut hours from weekly reporting by using AI to structure narrative data and produce first-draft summaries that staff members edit. Environmental and watchdog groups are training small models on public filings to speed up open records review. Community health nonprofits, meanwhile, are experimenting with chatbots that can respond to basic questions and direct more complicated queries to a human.

Fast Forward’s research shows that small teams are particularly resourceful: They use open-source models wherever possible, work only with public or de-identified data, and keep a person in the loop. Crucially, 70% of AI-powered nonprofits in that study engaged community input into tool design and policy, a measure of practical risk hedging against bias and a sign of trust-building while better regulation is absent.

There are credible precedents. Crisis hotlines have applied machine learning to help triage messages, so counselors get to the highest-risk texters more quickly. Food banks and mutual-aid networks are deploying models to predict demand by neighborhood, which informs routes for mobile pantries and staffing. These are optimized workflow-embedded tools, not shiny demos.

Risks Nonprofits Can’t Ignore When Adopting AI

Interest is intense, but so are the red flags. Candid discovered that a third of nonprofits can’t explain how AI links to the outcomes they seek in their missions. The Bonterra survey suggests that almost all respondents fret about how vendors use their data, and a significant number have restricted experiments due to lingering worries about bias, privacy, and security. Some of those fears are legitimate: Models can recreate patterns of systemic inequality, mishandle sensitive data, or veer out beyond their training in high-stakes situations.

Capacity is the other bottleneck. Few organizations have money set aside for AI training, audits, or policy formulation. With unclear governance — what data is in scope, who reviews outputs, how incidents are treated — the load falls on already overtaxed staff. The experts at NTEN and the Stanford Digital Civil Society Lab have stressed that responsible adoption includes organizational policies, not just clever prompt workarounds.

How to Adapt AI Responsibly on a Limited Budget

Start with low-risk, high-volume tasks. Pilot AI on internal workflows (like document summarization, meeting notes, or de-duplication of records) with only non-sensitive or de-identified data. Ensure human checking before it all goes live or impacts client services.

Create lightweight governance. Write a one-page policy about acceptable use, privacy, disallowed data (such as health records or immigration status), retention, and human review. For quality checks, record prompts and outputs. Engage program staff and community advisers early; they will reveal blind spots.

Select vendors with well-defined data protection. Prefer tools that provide the ability to opt out of model training and offer enterprise controls, and make available security attestations. If possible, handle sensitive information somewhere safe or with local models, not in public APIs.

Invest in people. Staff training that costs a few hours can more than pay for itself in short order. There are accessible curricula available through organizations like TechSoup, NTEN, and community colleges. Keep a record of time saved and outcomes improved so that you can testify to funders about why sustaining support for these functions matters.

Engage funders. Fast Forward’s leadership observes that philanthropy can be the catalyst for safe adoption by underwriting capacity: governance, training, bias testing, and community engagement. Even setting aside 5–10% of a grant specifically for digital infrastructure can be transformational.

The Bottom Line on Responsible AI for Nonprofits

AI has the potential to help nonprofits achieve more with less — but only if impact, equity, and privacy are built in from the beginning. The winners will treat AI as their co-pilot, not a replacement: narrowly scoped and community-informed, with outcomes measured against mission. With the right amounts of targeted investment from funders and pragmatic governance, the sector can indeed capture real efficiency gains while both valuing and protecting the people it’s there to serve.