A new multi-model workspace is shaking up the way people work with AI by letting users run a single prompt and view responses from more than 25 leading models on one screen. The pitch is simple but powerful: compare answers from ChatGPT, Gemini, Llama, DeepSeek, Perplexity, and others side by side, then pick the best output without bouncing across tabs and apps.

Why side-by-side AI comparisons matter for better results

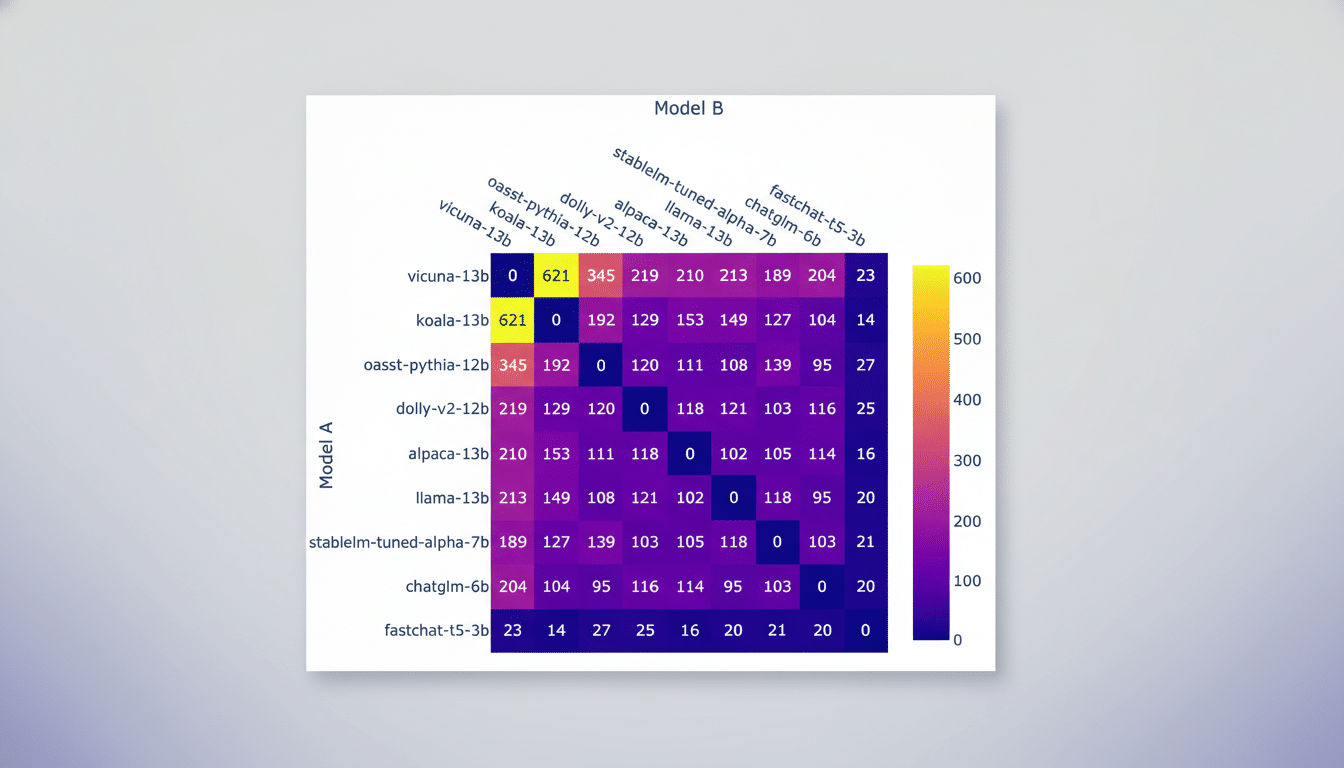

Not all large language models excel at the same tasks. Public leaderboards such as LMSYS’s Chatbot Arena routinely show rank shuffles as models improve or underperform on specific prompts. Stanford’s CRFM, through the HELM benchmark, has long argued that robust evaluation means testing across scenarios, not relying on a single score. Seeing multiple answers at once makes those differences obvious, turning model selection from guesswork into evidence-based choice.

- Why side-by-side AI comparisons matter for better results

- How the side-by-side AI workspace and extension works

- Where side-by-side model comparisons help teams the most

- Evidence and context from research and practitioners in the field

- Key caveats to consider when using multiple AI models

- The bottom line on adopting a side-by-side AI workflow

That transparency is practical. A model that’s brilliant at code synthesis may stumble on nuanced policy analysis; one that’s superb at long-context summarization might be slower or pricier. With side-by-side outputs, users can spot brittleness, measure tone, check citations, and gauge formatting quality before committing results to production or publication.

How the side-by-side AI workspace and extension works

Delivered as a Chrome extension, the workspace centralizes your prompt and fans out the request to a roster of models. Responses render in parallel panels so you can scan for clarity, factual grounding, reasoning steps, or creativity. It supports chat on images and PDFs, prompt libraries for quick reuse, and conversation history for auditing and repeatability—key needs for teams building repeat workflows.

The company positions the service as a cost-saver by consolidating access in one place with a lifetime license option and unlimited monthly messaging. For power users who currently juggle multiple subscriptions, consolidating tooling into a single interface can also simplify permissioning and basic governance, though enterprises should still apply their own review for data handling and compliance.

Where side-by-side model comparisons help teams the most

Content teams can craft a product description once and inspect variations across models to match brand voice, style, and reading level. If one model nails persuasive copy while another produces tighter SEO structure, you can blend the best of both in minutes rather than rewriting from scratch.

Developers can prompt for the same Python function and compare implementations for readability, library choices, and test coverage hints. Side-by-side views make it easy to spot off-by-one bugs, missing edge cases, or insecure patterns. Pair that with stored conversations and you’ve got a lightweight evaluation harness for everyday coding tasks.

Researchers and analysts can upload a PDF, ask for a structured summary with citations, and compare which model does the most faithful extraction. If one response cites incorrectly or hallucinates references, the contrast is immediately visible—vital for due diligence and risk mitigation.

Evidence and context from research and practitioners in the field

Surveys from Stack Overflow and GitHub underscore how routinely developers lean on AI assistants, while research from the Allen Institute for AI documents variability in reasoning and factuality across models. NIST’s AI Risk Management Framework also emphasizes evaluation discipline and context-specific testing. In other words, professionals already need multi-model checks; this tool packages that workflow into a single, accessible pane of glass.

The approach mirrors what many enterprise AI teams build internally: model routing and A/B evaluation to balance cost, latency, and quality. Here, those same principles reach individual users who want quick certainty about which model to trust for a given job.

Key caveats to consider when using multiple AI models

Side-by-side isn’t a substitute for rigorous benchmarking. Models update frequently, and what wins on a prompt today might lag tomorrow. Terms of service and data retention policies vary across providers; sensitive data should be handled carefully and in line with organizational policy. And while the extension surfaces many models in one interface, feature parity can lag behind native apps, especially for the newest modalities.

The bottom line on adopting a side-by-side AI workflow

For anyone who spends real time coaxing results from AI, instant multi-model comparison is a genuine productivity unlock. It cuts iteration cycles, reduces blind spots, and turns model selection into a visible, auditable choice. If your work touches writing, coding, research, or design, this side-by-side tool is a timely addition—bringing the rigor of evaluation labs to your everyday AI workflow.