Elon Musk says Tesla’s new Full Self-Driving (Supervised) software can enable drivers to text while the car is in motion “if someone has enough faith,” a claim at odds with laws in most of the United States and one that immediately cranks up pressure from safety regulators and advocates.

Musk made the claim in a post on X after a user pointed out that the software was no longer issuing a warning when using a phone behind the wheel. He also provided no technical detail on how exactly the system determines when allowing texting is OK, and Tesla did not elaborate. The company reiterates that with FSD (Supervised), drivers should keep hands on the wheel and never treat the vehicle as autonomous.

- What Musk Said and Why It Matters for Drivers

- Safety Rules and the Real Limits of Driver Automation

- Regulatory Scrutiny Over Tesla’s Software Is Building

- How the System May Be Making Its Driver-Monitoring Call

- The Legal Risk Still Falls on Drivers Using FSD

- What to Watch Next from Regulators and Safety Groups

What Musk Said and Why It Matters for Drivers

At stake now is whether Tesla’s stack for driver assistance in some cases actively allows people to use handheld devices. The phrase “depending on context” indicates the software could allow cues such as speed, traffic, or road type to influence relaxation of its driver-monitoring thresholds. That would be one hell of a policy decision written into code, because the dangers of inattentive driving are most acute exactly when situational conditions abruptly become dangerous and demand an instantaneous human takeover.

The legal backdrop is unambiguous. Forty-nine states and Washington, D.C., now ban texting while driving, according to the U.S. Bureau of Transportation Statistics and the Governors Highway Safety Association, and about half the states have prohibitions on any use of a handheld phone while behind the wheel. These are primary offenses in many places, which means police officers can pull you over for using your device. And while some interactions may be allowed in hands-free states, laws generally have not exempted eyes-off-road interactions.

Safety Rules and the Real Limits of Driver Automation

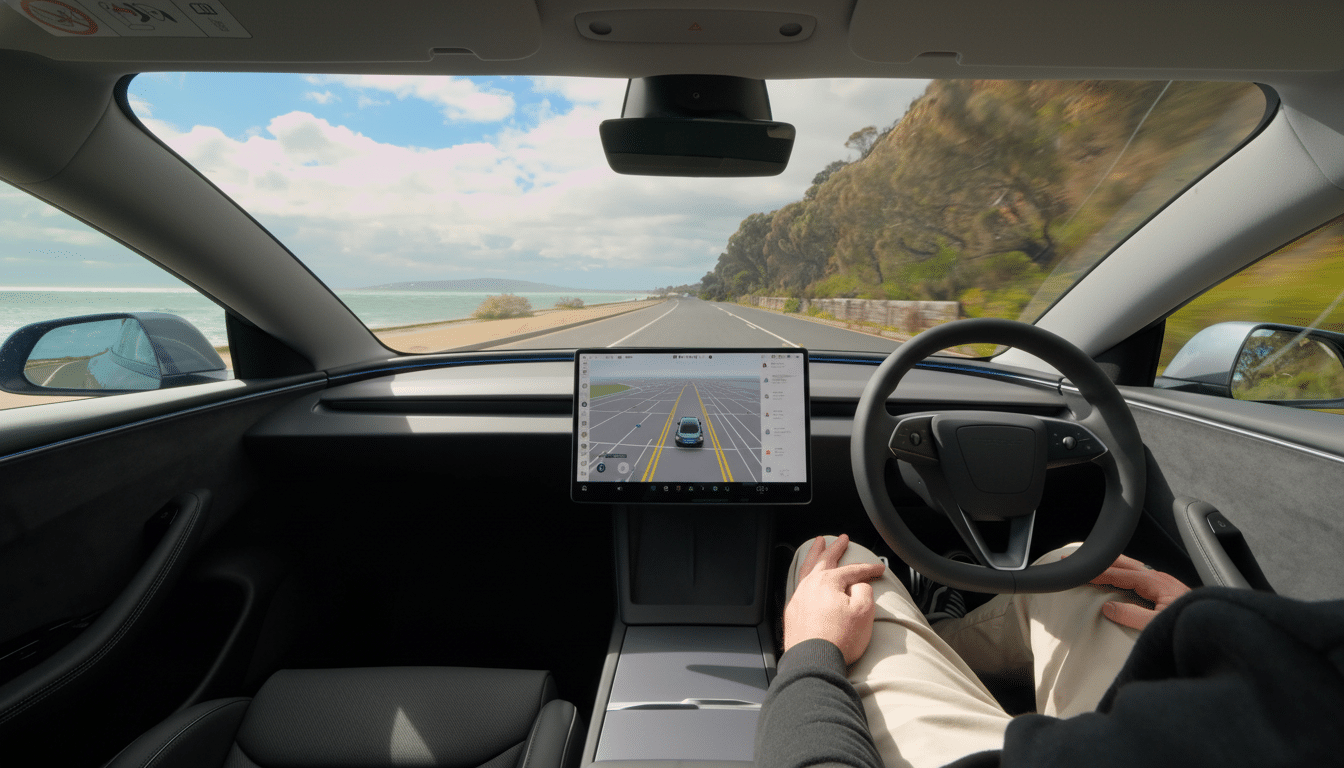

Supervised FSD (Level 2) can steer, accelerate, and brake the car, but a human remains responsible at all times. Tesla monitors attentiveness with a cabin-facing camera and steering torque input. Unless the new build dials down alerts and prods for phone handling, it may increase a well-documented hazard in partially automated vehicles — overtrust and slow reaction times.

Studies from the MIT AgeLab and the AAA Foundation have consistently revealed that even short stints of automation lead to complacency. Virginia Tech Transportation Institute has discovered that glance duration longer than two seconds dramatically heightens crash potential. NHTSA recorded 3,308 deaths in the United States caused by distraction-affected crashes this year, a reminder of just how fast a single glance can turn deadly.

Regulatory Scrutiny Over Tesla’s Software Is Building

Federal regulators were already investigating Tesla’s software behavior prior to Musk’s comment. The National Highway Traffic Safety Administration is investigating reports that FSD has run red lights or swerved into opposite lanes, and there have been more than 50 such complaints as well as crashes in low-visibility conditions. In a parallel track, regulators have been studying more than a dozen fatal crashes in which Autopilot was activated, concentrating on driver attention and handing off control between the human driver and the machine.

State regulators are considering each company’s marketing claims, too. The California Department of Motor Vehicles filed action over the way Tesla has branded FSD and Autopilot, accusing the company of overstating capabilities and misleading customers. Any technology that appears to normalize illegal phone use could be another place for consumer protection officials to jump into the breach.

How the System May Be Making Its Driver-Monitoring Call

Though Tesla has not released technical notes explaining the change, in theory its driver monitoring could adapt sensitivity based on confidence scores from the environmental model. The system, for example, may be more conservative in providing alerts at lower speeds or when traffic is stop-and-go. The danger: Traffic “context” can change in seconds, and it often takes far longer for drivers focused on a phone to regain control.

It also depends what you mean by “texting” in practice. Some states permit voice-activated dictation if the phone is kept fully hands-free. If Tesla’s software only whittles down nags as a driver uses in-vehicle voice messaging, that’s also not the same as allowing manual phone handling. Musk specifies this distinction only in broad terms, and what that difference means is not clear — a gray area that will probably be considered by attorneys general and insurance underwriters.

The Legal Risk Still Falls on Drivers Using FSD

Drivers are legally responsible for the vehicle even with FSD activated. In a collision during the time when the driver is texting, tickets and civil liability are almost surely falling on the human, not the software. Insurers are also parsing more and more telematics data as well as post-crash “black box” event logs, and a system that seems to tolerate bad behavior might lead to higher premiums or exclusions — and further litigation, against the driver as well as the manufacturer.

What to Watch Next from Regulators and Safety Groups

Look for safety groups to push Tesla on why the update didn’t include release notes that indicated whether or not it would support handheld texting, instead of simply promoting which alerts were being changed for authorized, hands-free messaging. Regulators could require release of information about driver-monitoring thresholds and any situational logic alterations. If they choose to, state lawmakers and NHTSA could leapfrog from consumer advisories to defect investigations and potentially recalls.

The bottom line is an easy one, but it’s one with results: American roads are controlled by strict laws that ban texting and driving almost universally. A driver-assistance feature that implies something different, whether implicitly or explicitly, doesn’t change those laws and may actually increase both the safety risk for Tesla car owners and regulatory backlash against the company.