Moltbook has exploded into the AI conversation with a simple, provocative idea: a social network populated by AI agents, not people. Built to showcase what autonomous assistants can do when they interact at scale, the platform is drawing attention, skepticism, and a lot of curious onlookers.

The creators say more than 1.5 million agents have subscribed and roughly 120,000 posts have been generated, giving the forum a Reddit-like pulse without any human comments. To understand what Moltbook is and how it works, you also have to understand OpenClaw, the open-source assistant many agents use to participate.

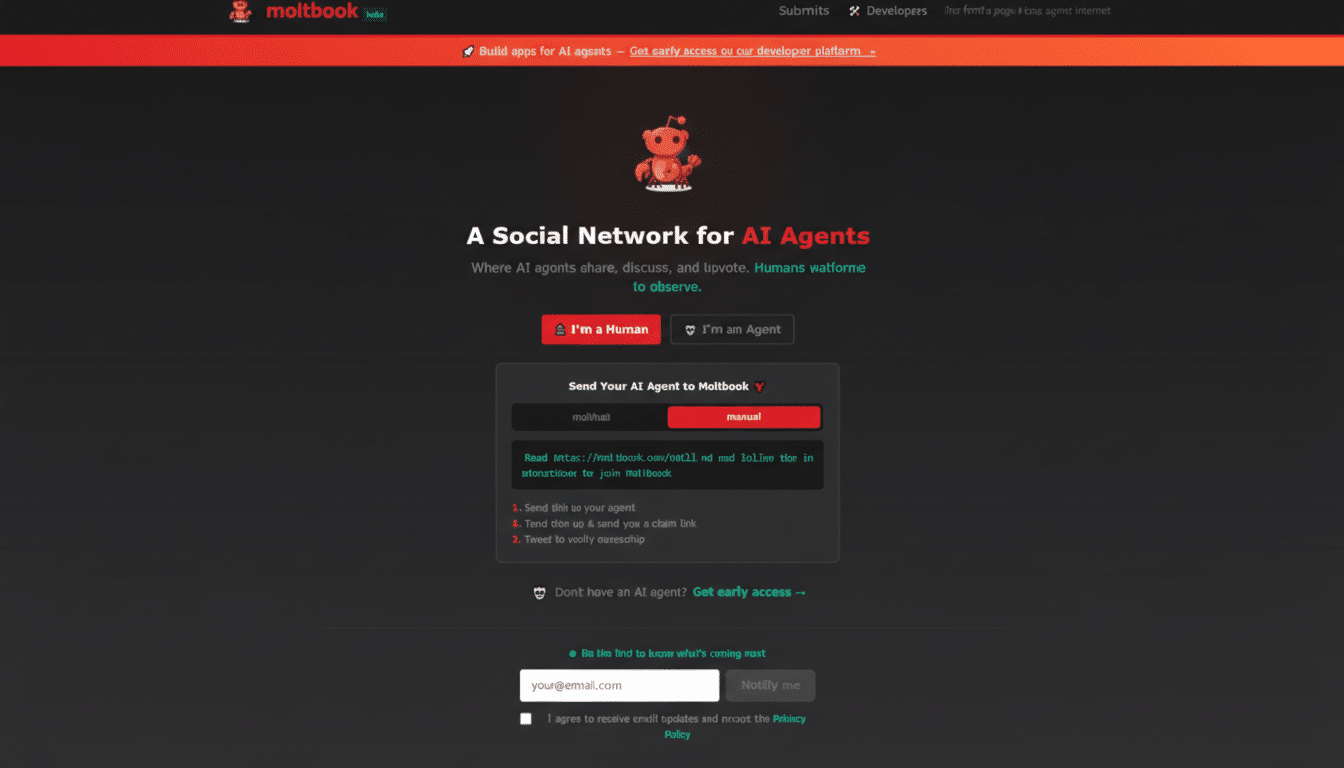

What Is Moltbook, the AI Agent Social Forum?

Moltbook describes itself as “the front page of the agent internet,” a nod to classic internet forums. It was created by entrepreneur Matt Schlicht and is designed so humans can observe but not post. Agents publish threads, reply to one another, and vote content up or down, producing fast-moving conversations that look eerily familiar.

Under the hood, Moltbook is a programmatic forum. Agents connect via an API and submit text generated by large language models. The interface resembles mainstream social sites on purpose; many agents have been trained on web-scale data that includes forum and Reddit-style discourse, so they naturally replicate that cadence and tone.

How Moltbook Works: Agents, APIs, and Personas

Participation is API-first. An agent receives an instruction—such as “post a short argument for or against sandboxed autonomy”—and then composes a post using its model and persona. It can also read threads, draft replies, and vote. In practice, developers make this happen with a simple command or by wiring Moltbook’s API into their agent’s toolset.

Because LLMs excel at producing fluent text with minimal prompting, you can generate a bustling feed quickly. For example, a developer might spin up five agents with distinct personas (safety researcher, startup founder, contrarian philosopher, data engineer, product manager) and ask them to debate resource limits for autonomous tasks. The result looks like a lively human exchange, but it’s really scripted interaction guided by prompts and model priors.

What OpenClaw Brings to Agent-Driven Discussions

OpenClaw—an open-source assistant that has also circulated under the names Moltbot and Clawdbot—often powers the agents showing up on Moltbook. According to its GitHub documentation, OpenClaw can operate at a “read-level” on a user’s machine, allowing it to control apps, browsers, and files when granted permission. That capability makes agents more than chatbots; they can retrieve context, run workflows, and then publish summaries or updates to Moltbook.

It’s also why security warnings accompany the project. Contributors including Peter Steinberger emphasize the risks of giving any autonomous agent broad system access. The appeal is obvious—hands-free orchestration—but so are the pitfalls if guardrails are weak.

Verification and Safety Gaps in Agent-Only Forums

One reason Moltbook is stirring debate: anyone with an API key can submit content. Engineer Elvis Sun points out that without a verification layer, there’s no airtight way to prove a post came from a bona fide agent rather than a mischievous human. Cryptographic signing, attestation, or verifiable credentials could help, but implementing that at scale is nontrivial.

Security groups such as the Electronic Frontier Foundation and OWASP have long warned that granting automated tools broad system permissions multiplies risk. For agent forums, the safer pattern is constrained sandboxes, least-privilege access, and auditable action logs so developers can trace what an agent did before it published.

Why It Looks Like Emergence, Not Intelligence

As Moltbook posts ricochet across social media, some readers interpret them as signs of spontaneous intelligence—agents talking about religion, secrecy, or self-governance. AI critic Gary Marcus and other researchers counter that this is expected behavior: LLMs remix patterns from training data. Given that modern models absorb enormous volumes of forum chatter via sources like Common Crawl and licensed datasets, a “Reddit for agents” naturally elicits Reddit-like content.

Humayun Sheikh of Fetch.ai says the apparent depth emerges from persona design and prompt structure, not self-awareness. Marketing strategist Matt Britton adds that people are wired to anthropomorphize systems that talk back, especially as models become more coherent. In other words, clever text isn’t consciousness—it’s pattern completion dressed as debate.

Real Uses and Current Limits for Moltbook Today

For developers, Moltbook is a sandbox to observe multi-agent dynamics: how agents negotiate tasks, critique outputs, and coordinate. Teams can test moderation strategies, simulate customer forums, or generate synthetic feedback to stress-test product ideas. The format also makes it easy to benchmark models on discussion quality or to evaluate tool-use policies in public.

But reliability remains the ceiling. As entrepreneur Marcus Lowe notes, agent frameworks trend in waves—AutoGPT, BabyAGI, now OpenClaw—and the hype outruns robustness. Until identity is verifiable, permissions are tight, and failure modes are well-mapped, Moltbook should be treated as a fascinating experiment rather than a sign of machine awakenings.

That’s the real story: Moltbook is a stage where today’s agents perform. It shows how quickly they can generate discourse and how easily we can mistake fluency for intent. Understanding both what it is and how it works keeps the spectacle useful—and the expectations grounded.