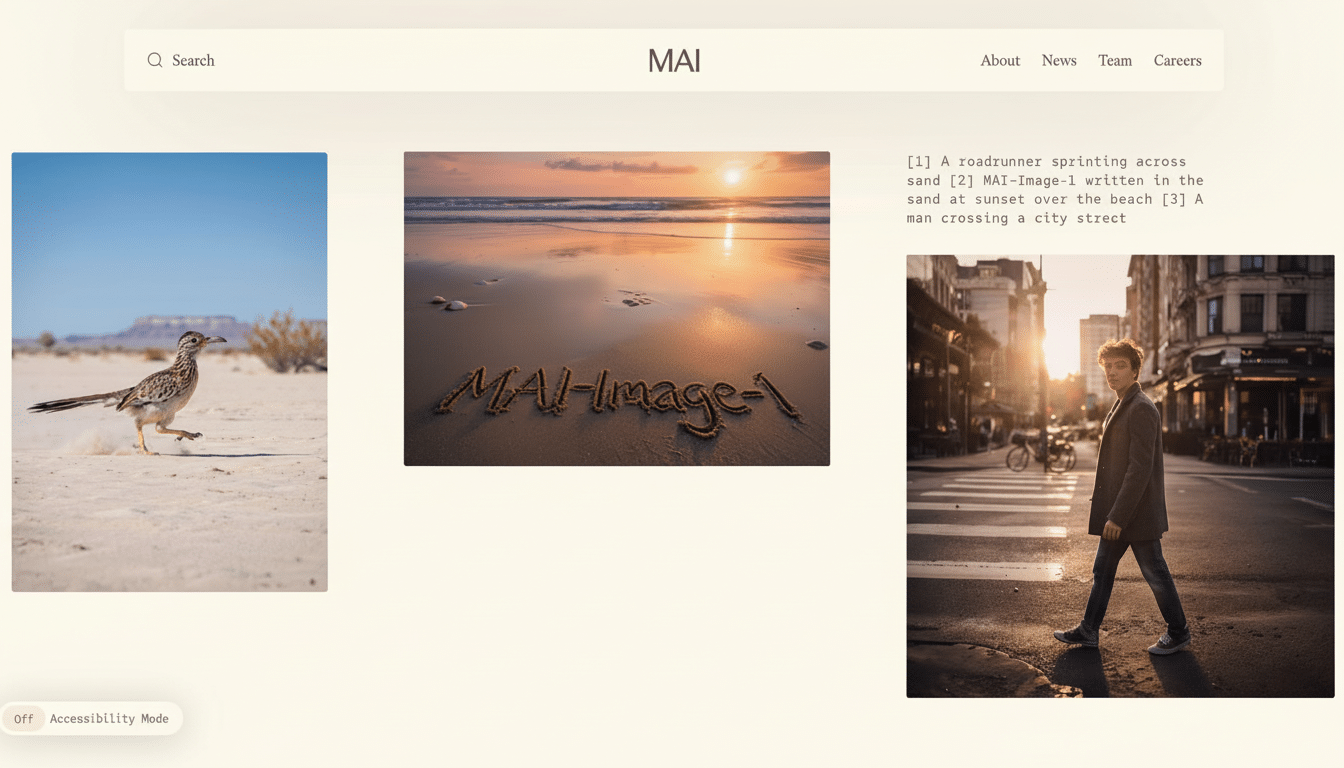

Microsoft has released MAI-Image-1, a large-scale image generation model developed in-house, marking a shift of strategy to determine the development internally from the migration to external vendors as for core creative AI capability. The model emphasizes photorealism, fast rendering and more intentional style control, the company says, and its early performance is also based on testing how well it handles the types of briefs that designers, marketers and product teams get every day.

What MAI-Image-1 Intends to Solve for Creators and Teams

MAI-Image-1 was created with input from professionals using direct modeling to sidestep the cookie-cutter look that can result when models overfit trendy aesthetics, Microsoft says. We’re talking less “default” looking styles and more accuracy to the prompts about lighting, materials and camera selection. The pitch is simple: realistic portraits honoring anatomy and skin texture, product shots that withstand scrutiny and brand assets that remain consistent across iterations.

- What MAI-Image-1 Intends to Solve for Creators and Teams

- Early Benchmarking and Rollout Across Copilot and Bing

- Why It Matters for Microsoft That It Built In-House

- Safety, Attribution and Enterprise Capabilities in Focus

- How It Stacks Up in a Crowded Field of AI Image Tools

- The Bigger Strategic Picture for Microsoft’s AI Plans

The company is focused on curating and evaluating data based around studio-relevant activities – like generating a usable hero image by looking at a mood board, or iterating with brand constraints in place on packaging design. In practical terms, those workflows exist where the users are already: inside Copilot and Bing Image Creator, which Microsoft says will soon include the new model.

Early Benchmarking and Rollout Across Copilot and Bing

MAI-Image-1 is being validated in LMSYS Arena, a platform supported by the community that benchmarks models through anonymous pairwise comparisons. Microsoft claims the system is charting as one of the top 10 text-to-image models on the platform already, though full public evaluations are not widely available yet. This sort of arena testing has emerged into a de facto standard across AI and is what LMSYS did for language models in clustering its quality around user preference as opposed to one-size-fits-all metrics.

When they’re incorporated, Copilot users will have the ability to select MAI-Image-1 as a model that’s available for transactions or be prompted by it in new situations if it becomes the best default option (which I could see closely following Bing Image Creator). With Microsoft’s scale, even small performance wins have a significant impact: speeding up rendering by a second or two can change how teams brainstorm in live sessions or iterate quickly during marketing turnaround cycles.

Why It Matters for Microsoft That It Built In-House

Microsoft’s image AI has relied on external partners for years — most conspicuously with OpenAI’s DALL·E models inside Bing Image Creator and Designer. MAI-Image-1 brings back one of the core capabilities under Microsoft’s direct control, enabling tighter tuning, faster iteration and potentially lower serving costs across Azure. It also allows the company to match its image generation roadmap with enterprise needs, such as auditability and regional data controls.

The model is the third child in a burgeoning family of internal models, coming after MAI-Voice-1 for audio generation and MAI-1-preview for text. That indicates that Microsoft is building a vertically integrated suite of models across text, image and audio — one similarly followed by competitors Google with Imagen and AudioLM, Adobe with Firefly and the family of diffusion modelers from Stability AI. Having the full stack of products at its disposal means it can more easily push multimodal capabilities in Copilot, including producing a product concept with images, alt text and narrated pitch in one flow.

Safety, Attribution and Enterprise Capabilities in Focus

Models being trained and outputs being labeled are under extreme scrutiny. Microsoft is a member of the Coalition for Content Provenance and Authenticity, and our recent AI offerings have provided support for credentialing content with tamper-evident provenance signals. While the company hasn’t published what method of watermarking MAI-Image-1 uses, it’s reasonable to assume that you’d be able to train on content credentials and Azure AI safety tooling based on customer demands for traceability inside their enterprise.

On the policy front, enterprises are calling for stronger intellectual property assurances. Microsoft has also previously offered an IP indemnification promise with respect to some Copilot work product. Many enterprise buyers will want similar protections to apply to imagery generated by MAI-Image-1, especially as copyright litigation in the generative models space continues to develop. As per a recent study from Stanford’s Human-Centered AI Institute, well-defined provenance and opt-out capabilities are now the top purchase criteria for creative AI in large organizations.

How It Stacks Up in a Crowded Field of AI Image Tools

Text-to-image has matured quickly. Midjourney is a current favorite for stylized illustration, Adobe’s Firefly is built into Creative Cloud with strong brand controls and Google’s Imagen models have done impressive prompt following in research demos. Microsoft’s differentiator will probably be as an enterprise at-scale deployment, with strong integration governance and rapid iteration within Copilot where knowledge workers are already spending time.

Real-world use cases suggest some spaces in which MAI-Image-1 might shine: e-commerce teams turning spreadsheets into coherent product galleries; architects transforming room dimensions into photorealistic interiors; support teams iterating locally appropriate visuals with consistent tone and accessibility text. If the model preserves photorealism without succumbing to uncanny artifacts — and does so at predictable latency — it may have a place in those daily workflows.

The Bigger Strategic Picture for Microsoft’s AI Plans

Analysts have followed a move from showcase demos to working AI, with the wins in shaving minutes off common jobs at global scale. According to Gartner, most enterprises will access generative AI through APIs or models in the next couple of years; that furthers the emphasis on reliability, governance and cost management as opposed to headline-grabbing samples. MAI-Image-1 fits that mold: tuned for realistic prompts, wired to Copilot, and based on Azure infrastructure.

Assuming Microsoft isn’t all hot air here, that move could cut down its reliance on third-party models for crucial creative labor and enhance the value proposition of Copilot as a multimodal workspace. The next things to watch: public benchmarking more generally, specifics about safety and provenance defaults, and whether Microsoft can offer enterprise-level IP protection for images generated via MAI-Image-1.