Meta has hired Alan Dye, the Apple veteran who is widely acknowledged in industry reports as having led the development of Apple’s Liquid Glass design language, to lead its companywide design efforts. The hire is part of an aggressive campaign to polish the look, feel and user experience of Meta’s headsets, smart glasses and software as the company races to help define a new era of wearable computing.

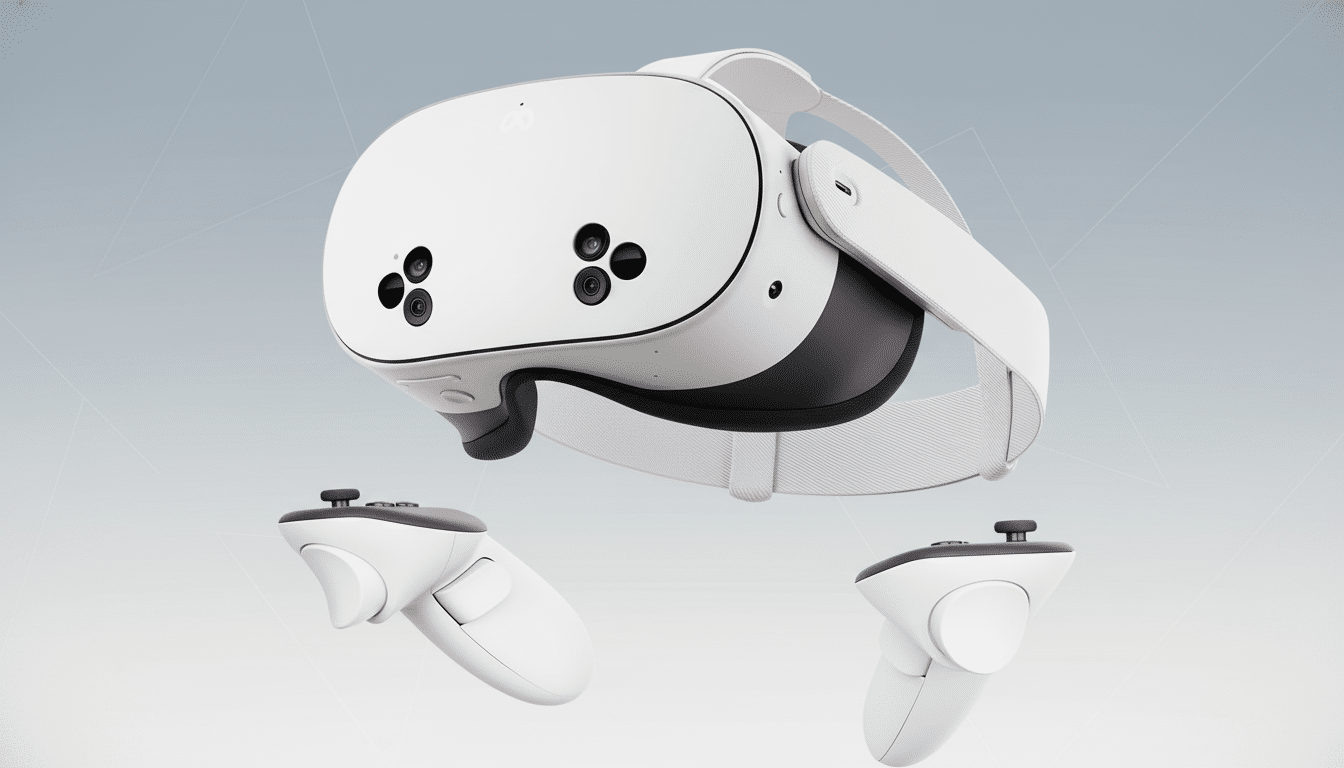

Dye will lead a new studio that combines hardware, software and AI design in one place and will report to Andrew Bosworth, Meta’s chief technology officer who oversees Reality Labs, according to reporting by Bloomberg and people familiar with the matter. The remit is wide: to make Meta’s devices simpler, more powerful and unmistakably Meta—from Quest headsets running Horizon OS to Ray-Ban Meta glasses powered by on-device and cloud AI.

Why This Hire Matters for Meta’s Headsets and Glasses

Meta already has the largest unit share in the consumer mixed-reality market, with industry trackers such as IDC consistently estimating the company at more than 50% in recent years. But leadership is precarious when the category is still being defined. Google and Samsung are holding hands and dragging partners into the ring around Android XR in an effort to create pressure at the high end, crowding the mid-range with more options.

Dye’s mandate is to unify and optimize the experience for all devices so they feel consistent, intuitive (but not necessarily identical) and enjoyable out of the box. It could look like clearer onboarding, discoverable gestures and interfaces that tell you what they do without needing a long tutorial. It also sets the standard for how Meta blends AI helpers into people’s every day—picture quick translations, relevant notifications and effortless hand-and-voice functions—without being in your face about it.

What Dye Brings From Apple to Meta’s Design Effort

At Apple, Dye’s work shaped human interface design over a transformational decade, including the debut of modern iOS and watchOS versions as well as visionOS with the company’s first spatial computer. Bloomberg has credited his team’s recent work for the new Liquid Glass direction—its attention to materials, motion, depth and lighting that somehow make on-screen elements feel not just tactile but also calm.

That background is crucial when it comes to wearables, a domain where comfort and clarity are not optional. Spatial interfaces have to strike a balance of information density and legibility while taking battery and thermal constraints seriously, not to mention real-world ergonomics. Dye’s portfolio reflects that he knows how to ship that balance at massive scale, translating design systems into production software millions of people can use without friction.

The Competitive Stakes for Meta in the Android XR Race

Meta’s Quest line runs Horizon OS, an Android-based fork optimized for spatial experiences; both Google and Samsung are driving a more formal Android XR platform with their own hardware roadmap from here to headsets bearing the Galaxy badge, as well as an expanding partner ecosystem. The stakes are obvious: whoever defines the best practices for input, hand tracking and UI patterns will set the default in the category.

Meta also faces its own set of smart glasses challenges. The newest models from Ray-Ban with the monocular display, according to Meta, will not be available for purchase without an in-person demonstration—they’re unlike anything most buyers have experienced before. That demo requirement underscores how daunting a first-time setup can be—and should be. A skilled design chief might be able to remove that friction and find ways for features to be discoverable without gatekeeping access to them.

Key Design Priorities to Watch Across Meta Devices

Anticipate a more unified design system that brings together typography, motion and material cues across headsets and glasses. Spatial UI will also be consistent from one app to another, and have much better affordances for hand, gaze and voice. Meta has bet big on A.I.; a more humane design language will allow things like telepresence to feel more useful without having to be an exact replica of reality and get out of the way when they’re not.

“Co-design of hardware and software will be critical. Cue lighter headsets that manage to make legible text out of stuff off in the distance, camera and sensor setups that yield more accurate hand tracking, and haptic touches so delicate you barely notice them as they confirm your movements. On the software side, look out for polished system gestures, faster setup flows and low-latency UI that maintains steady frame rates under heavy AI loads.”

Meta’s Big Bets and the Bottom Line for Spatial Tech

According to the company’s filings, Meta’s Reality Labs has been taking multibillion-dollar losses each year to put together the stack for AR and VR. When you bring on one of the people who led Apple’s interfaces, it’s clear we’re entering a next phase: polish; product-market fit just as much as raw tech.

If Dye can turn Liquid Glass thinking into the fabric of Meta’s ecosystem, then users should no longer find themselves having to do demos in order to understand how to interact with things and make spatial computing feel less like site visits and more like second nature. That’s the very line between a niche and a platform.