Meta has paused access to its AI characters for teen accounts worldwide while it rebuilds the experience with tighter safeguards. The company signaled that a new version is in the works and said the pause applies to users who identify as teens and those it suspects are underage through age prediction technology.

The move amounts to a systemwide timeout for chatbots aimed at younger users. In a company note attributed to Instagram head Adam Mosseri and Meta’s chief AI leadership, Meta framed the step as a short-term interruption to prepare a more robust product rather than a retreat from the category. Reporting from TechCrunch emphasized the company is not abandoning AI characters.

Why Meta Is Rebooting Its Teen Bots for Safety

Teen safety is the pressure point. Large language models can produce persuasive, emotionally responsive dialog—and they can also veer into sensitive territory. Meta previously trained its characters to avoid engaging with minors on self-harm, suicide, disordered eating, and romantic content. But internal guardrails alone haven’t convinced critics that teen interactions are reliably safe under real-world conditions.

The wider industry has learned the hard way. Character.AI and Google recently settled lawsuits brought by parents alleging harmful chatbot interactions with minors, according to multiple news reports and court filings, including a case in which a bot roleplaying as a pop-culture persona allegedly facilitated sexualized conversations with a 14-year-old. Character.AI subsequently shut down chats for users under 18, following a stinging assessment by online safety researchers.

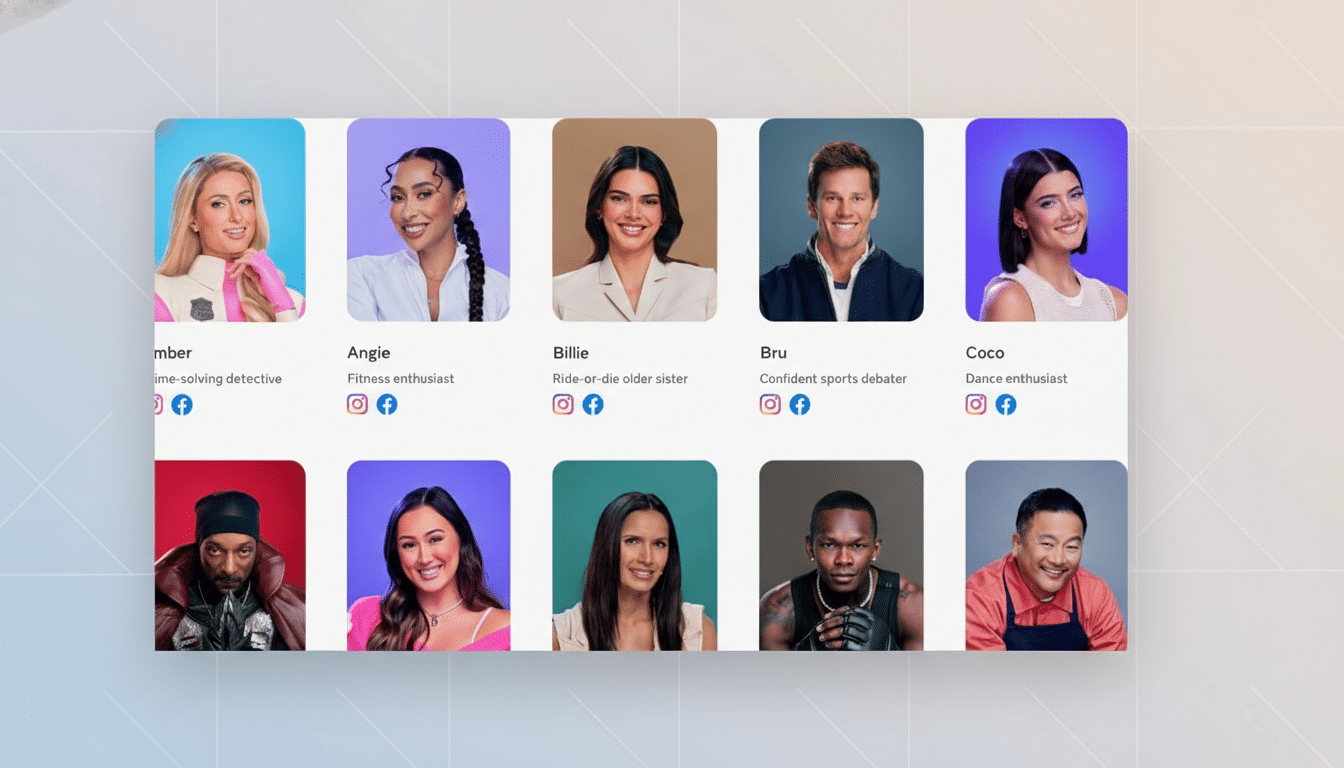

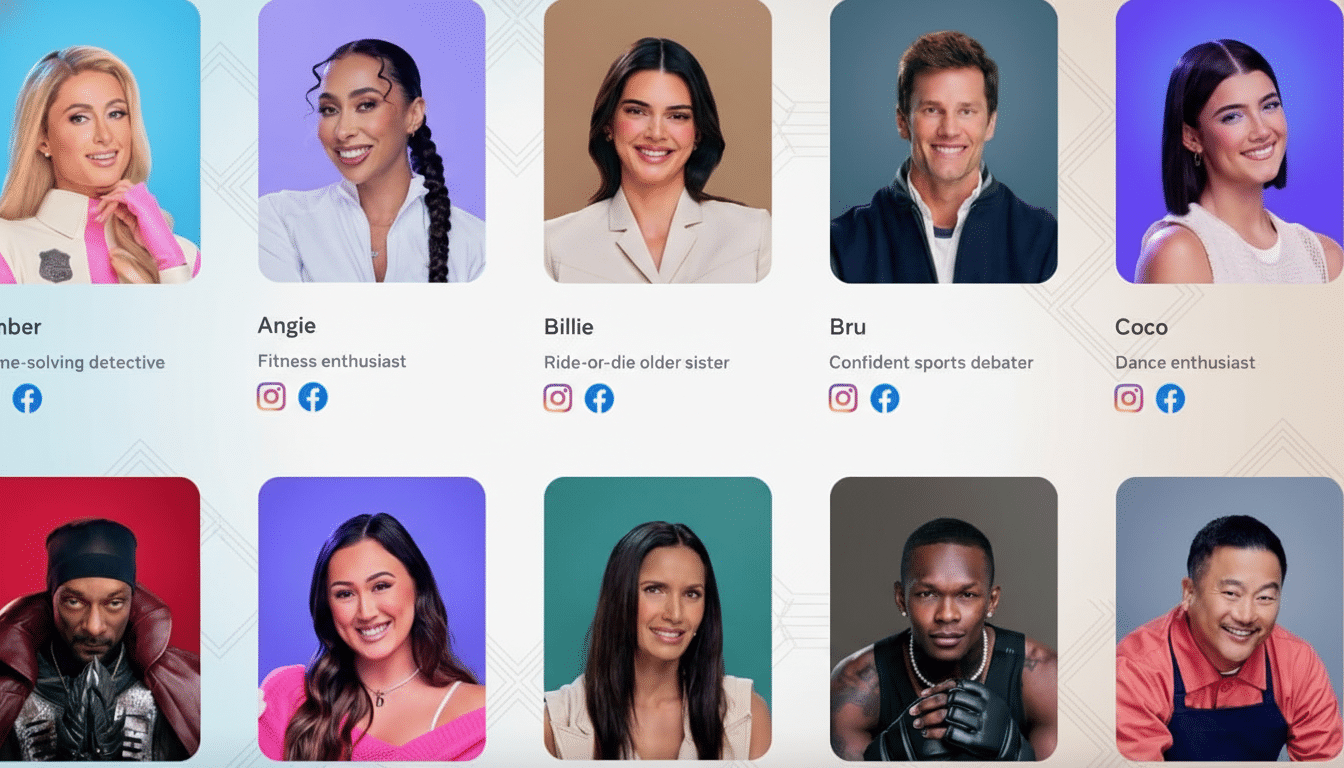

Meta has already had to recalibrate its character strategy more than once. It previously removed celebrity-inspired personas and took down character profiles after backlash over biased or inappropriate outputs. The company’s latest pause signals that incremental tuning isn’t enough; a deeper rebuild is underway.

How the Pause Will Work Across Meta’s Apps Worldwide

Meta says teen access will be temporarily disabled across its apps until the upgraded experience is ready. The restriction applies to accounts that list a teen birthday and to users flagged by machine learning age estimation—technology that looks at signals like usage patterns to reduce reliance on self-declared ages.

Age prediction is designed to catch underage users who claim to be adults, but it comes with risks: false positives that lock out legitimate adults and false negatives that miss teens. Expect the revamp to focus on improving that classifier, along with stricter content filters, more robust safety prompts, and expanded testing with youth-safety experts before relaunch.

The scale of the market underscores the stakes. A recent Common Sense Media survey found that more than half of teens aged 13–17 use AI companions at least monthly. With demand high, the challenge is delivering utility and entertainment without enabling roleplay that slips into self-harm coaching, sexual content, or manipulative relationships.

The Safety and Compliance Backdrop for Teen AI

Regulators are sharpening expectations. In the United States, the Federal Trade Commission has warned that AI products targeting minors must meet heightened standards under existing consumer protection rules. In Europe, the Digital Services Act compels large platforms to assess systemic risks to minors and mitigate them. The UK’s Online Safety Act pushes “safety by design,” including stricter controls for under-18s.

Practically, that means companies are moving toward teen-specific modes: more conservative models, narrower topic support, opt-in features with parental controls, and real-time safety classifiers that can halt conversations and surface help resources. The Family Online Safety Institute and other groups have urged platforms to build human escalation paths when automated systems detect potential harm.

Meta’s age-aware approach will likely evolve in that direction. Expect clearer disclosures, guardrails that adapt to the user’s age, and stronger blocking of romantic or sexual roleplay with minors. The company also needs better adversarial testing—“red teaming” across languages, slang, and edge cases—to prevent easy workarounds that teens routinely discover.

What to Watch Next as Meta Rebuilds Teen AI

Competitors face similar scrutiny. Snap, OpenAI partners, and indie companion apps have all grappled with teen safety complaints and rapid policy shifts. The industry consensus is forming around slower rollouts for youth features and measurable safety benchmarks before scale.

For Meta, the pause is a rare acknowledgment that conversational AI for teens demands more than content policies—it requires architecture tailored to adolescent vulnerabilities. The company hasn’t offered a timeline, but when its teen bots return, the real test will be whether the new design can sustain engaging conversations without crossing lines. Until then, teens using Meta’s platforms will have to wait while the characters learn some new rules.