Meta is presenting itself as the software layer that could make humanoid robots widely useful, with Andrew Bosworth, its chief technology officer, saying the goal had become for Meta to be the backbone upon which other manufacturers would build. Instead of manufacturing arms and actuators, Meta would like to license, well, a robotics software stack (one that leans on its AI research, gargantuan compute infrastructure, and track record of open tooling) in hopes of seeding a broad ecosystem.

The software bottleneck slowing useful humanoid robots

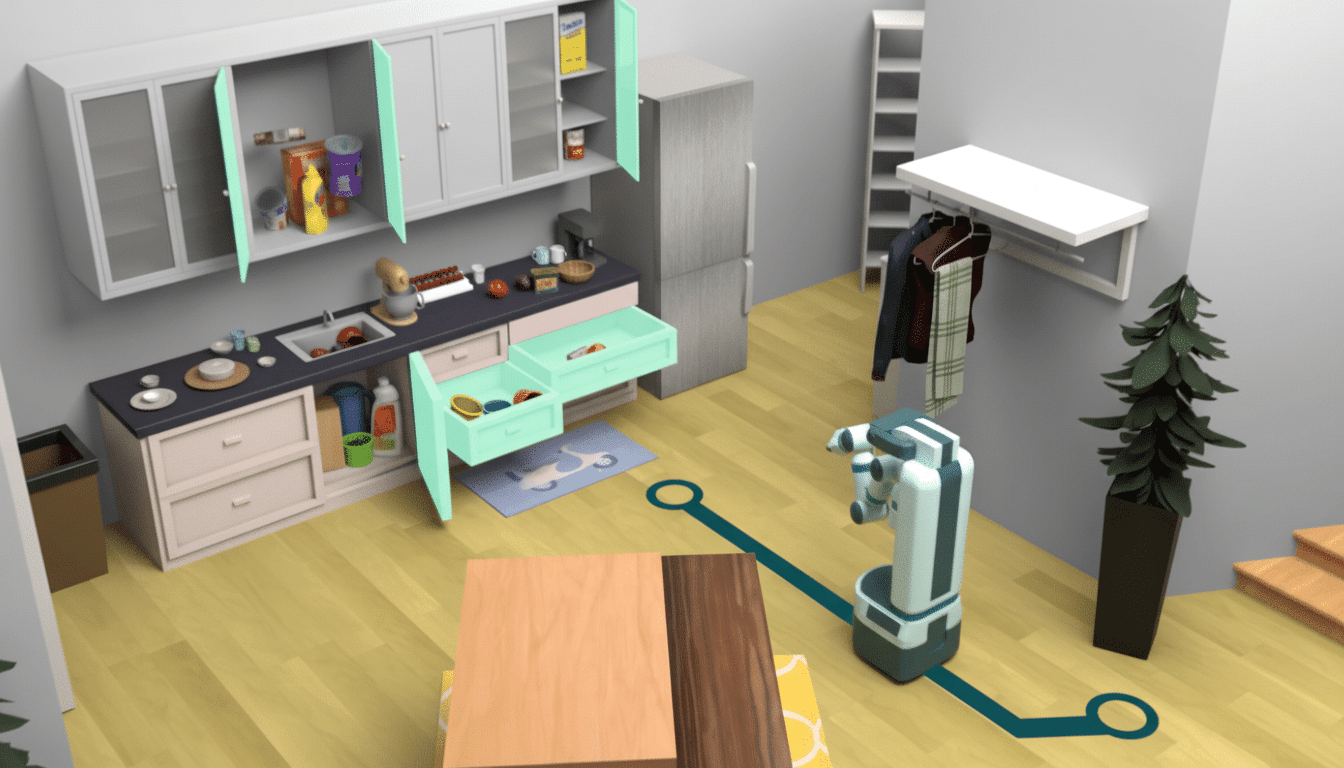

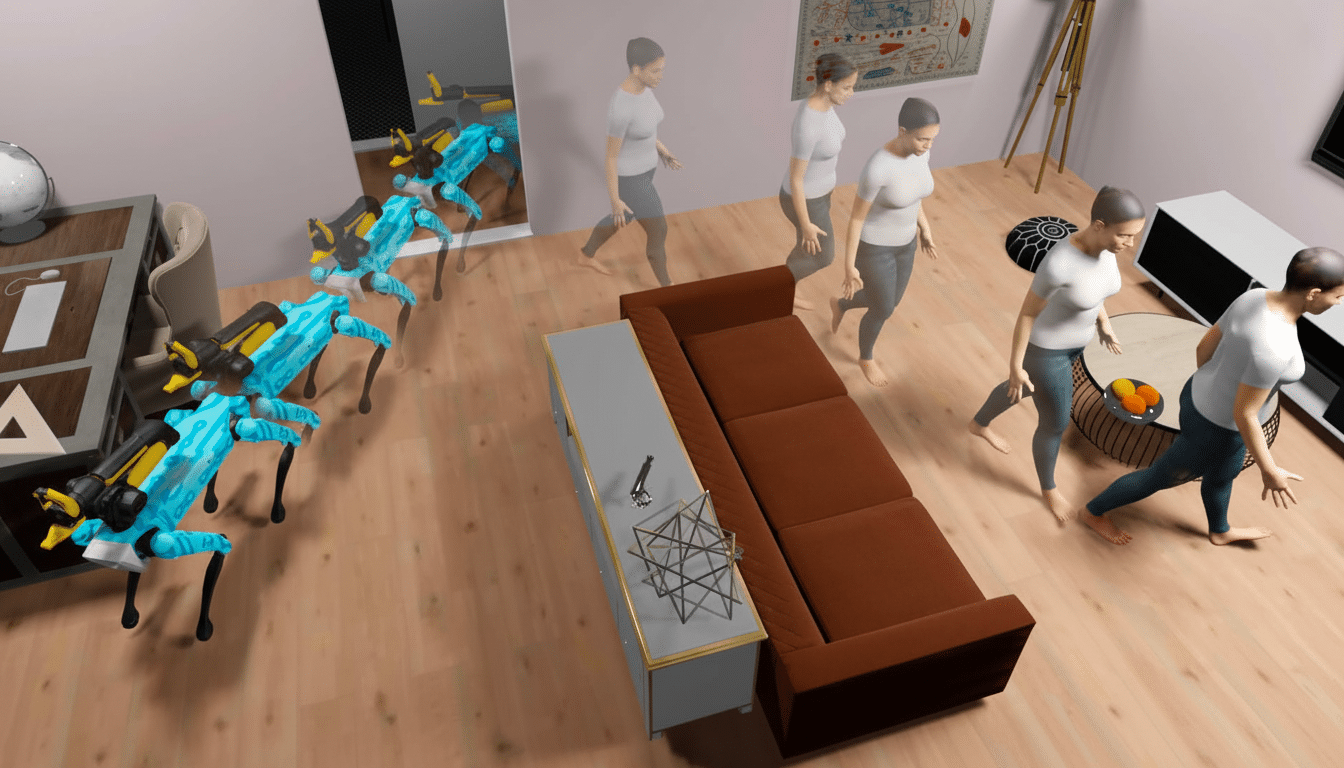

Designing a humanoid body is difficult, but making it perform everyday work is harder. The real bottleneck is software that can see the world, plan its course of action, and control dexterous hands without crushing a glass or dropping a key ring. That demands robust “world models” and rich simulacra to make sure that the policies trained in virtual environments can be transferred to real, messy homes and factories.

Meta’s AI teams have some credible building blocks here. FAIR created AI Habitat, a popular simulator for embodied AI (see the Perspective by Lake), and the company’s research on big multimodal models and egocentric datasets (including Ego4D) is squarely addressed to perception from human-like perspectives. A common stack would also have to integrate those capabilities with low-latency control, tactile reasoning, and safety layers that can make decisions in the face of uncertainty.

Rinse and repeat: a playbook like Android’s, minus phones

The strategy mimics Google’s Android playbook: dominate the software and let partners judge, sell, scale hardware. Android runs about seven in 10 smartphones worldwide by shipment share, according to the big analyst houses, while Google’s own devices comprise only a tiny sliver. Meta’s strategy would gun for robot makers ranging from startups to industrial incumbents, positioning its stack as the standard “OS” for humanoids.

If all goes according to plan, Meta wouldn’t have to sell robots. It would control the developer ecosystem, the data pipelines, and the upgrade loop — where every deployment feeds back experience that uplifts the base model, which in turn raises all boats. That compounding advantage is the flywheel that drives today’s best conversational and vision models.

What Meta’s robotics software stack should include

A respectable backbone has to meet the robotics world where it is. That includes first-class support for ROS 2 and MoveIt, compatibility with Nvidia’s Isaac stack and Jetson modules at the edge, and connectors to popular simulators like MuJoCo as well as Omniverse Isaac Sim. It also requires a policy programming model trained for real-time constraints, not just cloud-scale batch jobs.

On-device inference will be pivotal. Humanoids can’t be waiting for the network to determine what force they use to squeeze a carton. Expect a hybrid architecture: small controllers for reflex and safe running locally, and heavier planning and self-improvement in the cloud. Meta’s recent foray into agentic AI and “world model” research matches that split, but turning lab demos into field-reliable stacks for transmission and control involves a sort of beta-testing engineering on latency, calibration, and fail-safes.

Data remains the moat. Teleoperated showcases, first-person video from human activities, traces of haptic interactions, and records of post-incident logs (logging data after an event has taken place) should all be unified into such a training corpus. Meta has a history of coordinating distributed data and training pipelines — again, including PyTorch’s meteoric rise and the company’s scaled LLM work here — which could mean a boomerang robotics platform if combined with serious evaluation benchmarks.

The field is crowding and becoming more competitive

Meta won’t be alone. Nvidia was wooing robot makers with an end-to-end silicon and tools story, selling a vision of a future where “general purpose” robots were a multi‑tens‑of‑billions market; its CEO has spoken in public about the coming ChatGPT moment for robotics. Tesla is vertically integrating with Optimus, wagering that autonomy know‑how transfers to factories. Startups like Figure, Agility, and Sanctuary are competing to prove real‑world utility and moving to form partnerships with hyperscalers to streamline training.

The open-source community also matters.

- ROS 2 is used in thousands of labs and production pilots.

- Academic–industry collaborations such as Open X‑Embodiment have demonstrated that sharing demos across robots can improve generalization.

If Meta can be a generous, standards‑friendly contributor — much as it has been by incubating PyTorch (now under the auspices of the Linux Foundation’s PyTorch Foundation) — then it has a way forward with roboticists who appreciate openness and reproducibility.

Timelines, risks, and reality for Meta’s robotics plan

Even bullish insiders admit this is a long road. Industry reporting suggests that Meta’s platform may be years away from powering third‑party humanoids. That’s not surprising: besides deft manipulation, vendors need to solve for uptime, battery density, and waterproofing as required by actual workplaces — and a thicket of safety and liability standards that reach far beyond app stores.

Still, the prize is enormous. A successful backbone would provide Meta with long-term leverage in a market that has the potential to be as strategically important as the smartphone era. The scale of the data and models for embodiment that the company has already seeded for XR use cases is already very high. If it can unite that research with a practical, partner‑first platform — one that glides into existing toolchains and hums steadily along on warehouse floors — Meta might shape how humanoids learn, grow, and work together.

Next proof points to monitor:

- A widely adopted public SDK.

- Reference robots from trustworthy partners and environments doing useful tasks without constant human supervision.

- Transparent evaluations that show progress in dexterity and safety.

Should those arrive, the idea that Meta is to become the spine of humanoid robots will have graduated from ambition to architecture.