Enterprises are racing to install AI agents alongside human teams, but the hard part isn’t spinning up a model—it’s deciding which work to hand it. Speed and cost look seductive. Yet without management-grade decision rules, leaders risk doing the wrong things faster.

Across industries, a new consensus is forming: treat AI agents like an adjunct workforce and use clear delegation tests before assigning tasks. This shift echoes guidance from management scholars and practitioners, who argue the call is managerial, not purely technical.

- Why Delegation Requires Management Discipline

- Test 1: Using a Probability–Cost Fit to Guide Delegation

- Test 2: Balancing Task Risk and Reviewability Before Delegating

- Test 3: Ensuring Sufficient Context and Control for Agents

- Applying the Three Delegation Tests in Real-World Practice

- The Bottom Line: Treat AI Delegation as Managerial Rigor

Why Delegation Requires Management Discipline

AI can produce in minutes what takes humans hours, but capability is uneven across task types. Ethan Mollick of the University of Pennsylvania notes the real constraint is knowing what to ask for—and how to verify it. The Stanford HAI AI Index reports that model performance still varies widely by domain, with non-trivial hallucination rates on complex, open-ended prompts. In short: treat delegation as you would with junior staff—define outcomes, set constraints, and inspect the work.

Test 1: Using a Probability–Cost Fit to Guide Delegation

Start with expected value. Delegate when the AI’s probability of producing an acceptable draft, multiplied by the value of the outcome, exceeds the total cost of using it (prompting, reviewing, revising, and potential rework) compared with a human baseline.

As a rule of thumb: if a task takes a human 2 hours and an AI can draft it in minutes while review takes 15–30 minutes, delegation pays off when success odds are high and revision cycles are short. Evidence supports the upside: an MIT–BCG field study found consultants using a large model completed more tasks faster and with higher quality on creative ideation, yet performance dipped on analytical tasks outside the model’s “frontier.” McKinsey estimates generative AI could automate activities representing 60–70% of work hours in some occupations, but the payoff depends on this probability–cost balance at the task level.

Test 2: Balancing Task Risk and Reviewability Before Delegating

Delegate when the consequences of a mistake are low and the output is easy to check. For high-stakes tasks—regulatory filings, financial disclosures, medical advice—keep humans in the lead or design rigorous human-in-the-loop controls. The NIST AI Risk Management Framework highlights validity, reliability, and transparency as core safeguards; your delegation threshold should tighten as impact risk rises.

Make verification explicit. Prefer tasks with objective ground truth (e.g., extracting fields from invoices, triaging support tickets) over subjective or novel judgments. The Stanford AI Index notes that even top-tier models can drift or confabulate under ambiguity, reinforcing the need for review gates and clear acceptance criteria.

Test 3: Ensuring Sufficient Context and Control for Agents

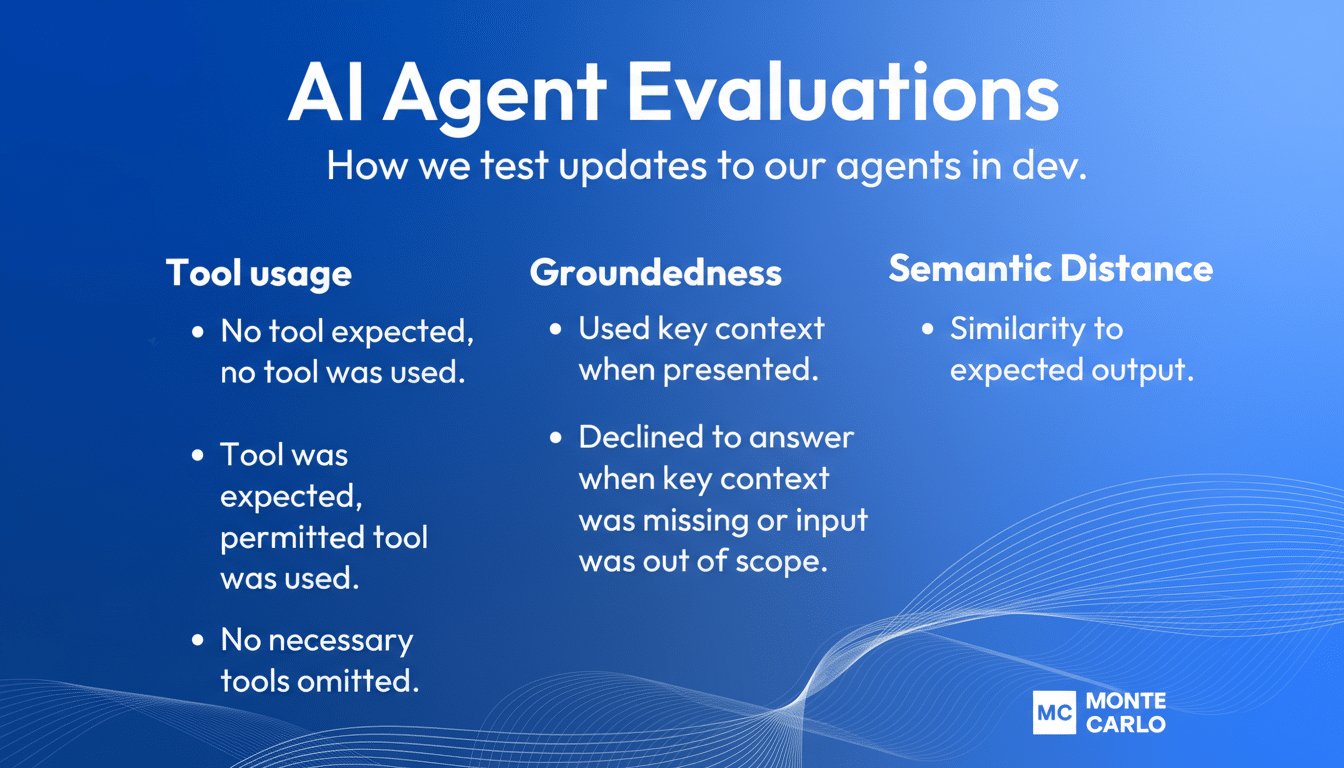

AI agents excel when you can supply the right context and guardrails. Delegate when the task can be grounded in authoritative data, policies, and tools, and when you can constrain behavior. Retrieval-augmented generation, function calling, and policy checks turn a general model into a domain apprentice.

When the job relies on tacit knowledge, shifting requirements, or sensitive data without a safe way to provision it, hold back or redesign the workflow. Standards like ISO/IEC 42001 and data governance practices help ensure context is current, access is controlled, and outputs are auditable.

Applying the Three Delegation Tests in Real-World Practice

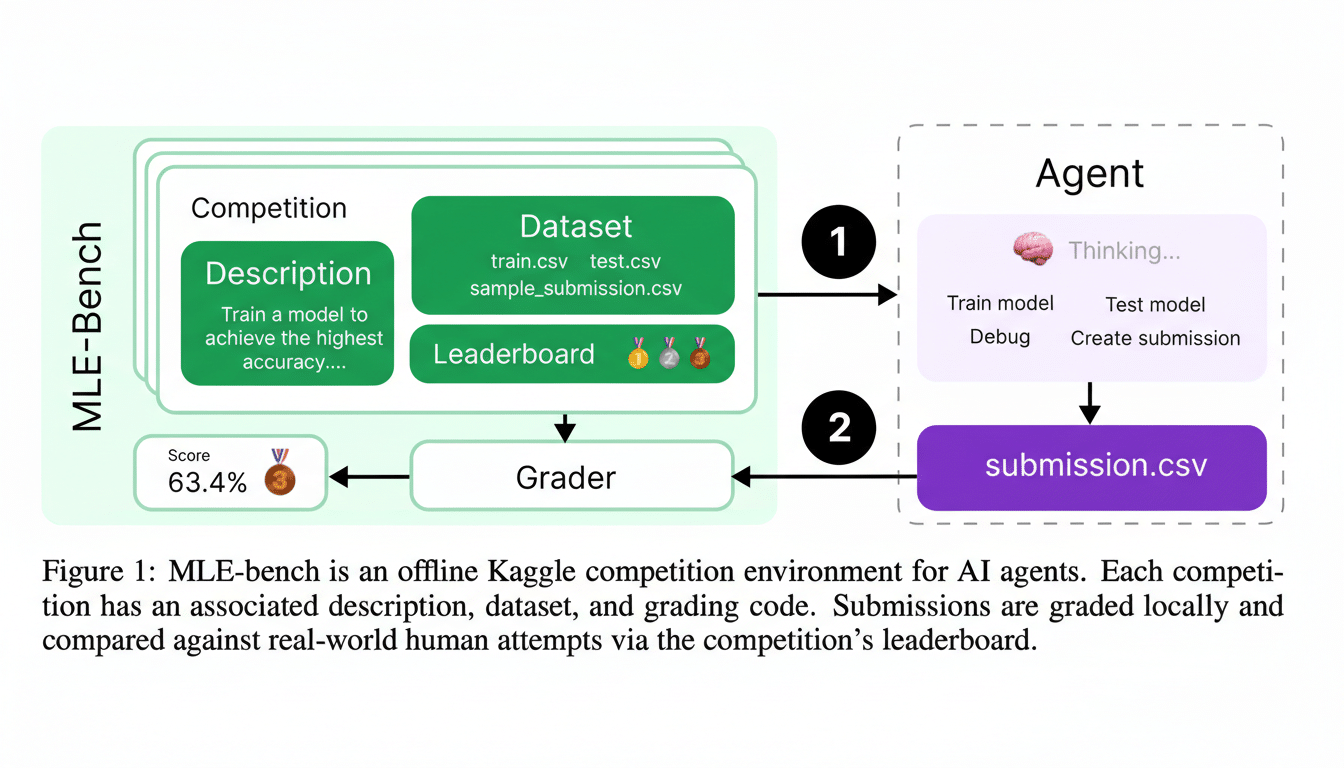

Run small pilots and measure. Define a human baseline for time, quality, and error rate; then track AI-assisted performance on the same metrics: time saved per task, review time, rework rate, escalation rate, and cost per output. A Stanford–MIT study of customer support found agents with AI assistance saw productivity gains averaging 14%, with the largest improvements among less-experienced reps—precisely the profile where risk is moderate and review is straightforward.

Consider contact centers: AI now drafts responses to routine questions, while humans resolve edge cases. The arrangement passes all three tests—high probability–cost fit, low risk with easy review, and strong context via knowledge bases. Marketing teams report similar wins by having AI produce first drafts of briefs or subject lines that humans refine, while legal and compliance teams keep final authority on anything binding.

The Bottom Line: Treat AI Delegation as Managerial Rigor

Delegating to AI agents isn’t about novelty—it’s about managerial rigor. Use the three tests: probability–cost fit to confirm there’s a time and quality payoff, risk and reviewability to keep failures safe and fixable, and context and control to ensure the system knows enough and stays within guardrails. Leaders who operationalize these checks will capture the gains and avoid the traps, turning AI agents into reliable teammates rather than unpredictable shortcuts.