Luma has developed Ray3 Modify, an AI video model that allows creators to create a shot between the start and end frames of a clip and still keep intact the human performance recorded in-camera. The release yields increased creative control for editors and studios by combining frame-accurate direction with character-aware transformation, enabling productions to replace power-wear clothing items or sets against orchestration without requiring reshooting.

How Ray3 Modify Works to Preserve Performance and Control

Ray3 Modify at its heart treats the live-action performance as an anchor of truth. Luma adds that the model preserves motion, timing, eyeline, and emotional delivery while implementing transformations from character reference imagery. It means that the identity and continuity of a performer (same hat, wardrobe, makeup) can be maintained consistently across shots even as the look of the scene changes.

The headline feature is start–end frame conditioning. Creators can feed the model two reference frames and request that it produce the connective sequence to transition between them, essentially key-framing at the level of an individual shot. In stunts, this could be directing a character from a neutral position to a predetermined mark, changing a day plate into, say, something like a painted, stylized night, or matching practical footage blended with the CG elements while locking off the performance of an actor.

Ray3 Modify is being presented by Luma on its Dream Machine platform that already has video processing workflows. The company frames the new model as a control-first upgrade for teams that desire AI-level speed while preserving directorial intent.

Why Start and End Frame Control Matters in Production

Generative video has advanced fast, but creative teams have been frustrated by the unreliability: slight prompt changes cause composition to swing one way, faces float lazily between frames, timing can feel synthetic.

Ray3 Modify, anchored to a generation involving two finite keyframes and a captured performance, applies the language of editorial keyframes to AI video to reduce guesswork when working on continuity.

This structure replicates how shoots are organized in productions. Directors and editors have beats and transitions on the brain. With Ray3 Modify, you can visually track those beats (start here, end there) and leave the model to interpolate in between. It is most useful for transitions, scene matches, blocking changes, and smoothing the transition between live-action and enhanced footage.

Implications for Studios and Creators Using Ray3 Modify

For commercial or branded content, the ability to maintain a performance while shifting location solves for an expensive edge case: The creative is correct; the location isn’t. Instead of having to rebook talent and crew, in fact, teams can literally reimagine the environment through AI while keeping everything they deliver exactly as clients approved. Independent creators achieve the same advantage on smaller budgets, too, by referring to frames to lock in continuity between shots captured on various days or different cameras.

There are rights and workflow issues. Studios will still require explicit consent for identity and likeness usage, along with solid versioning as scenes are adjusted. With industry groups like SAG-AFTRA focusing on consent and compensation for digital replicas, technical standards from groups such as the C2PA and SMPTE are also evolving to enable provenance signals and watermarks in AI-assisted media.

Competitive Landscape and the Supporting Infrastructure

Luma is among the many deep-pocketed entrants in AI video, pitting the company against a number of well-funded players in the space, including Runway and Kuaishou’s Kling — as well as research-led systems that have demonstrated long-form generation. There are generic tools that simply offer some simple text-based prompts, or even filtering some plain camera controls, or running the style transfer on a phone. What sets Ray3 Modify apart is the fact that this workflow, supported by start–end frame guidance and up to nine characters with no performance loss, is aimed squarely at production, using its unique combination of awareness for quality (of characters — there should never be too many + what they’re like next to each other), functionality (how would I like it as a user?), and reliability.

The launch comes on the heels of Luma’s $900 million funding round, led by Humain (an AI company backed by Saudi Arabia’s Public Investment Fund) and with participation from a16z, Amplify Partners, and Matrix Ventures. Luma and Humain are also behind a 2GW AI compute cluster in Saudi Arabia — a hint to the scale needed to train and serve video models, which require high-throughput GPUs for processing multi-second 1080p clips and quick iteration in post pipelines.

Early Use Cases for Ray3 Modify and Important Caveats

Look out for Ray3 Modify in previz, pick-up “reshoots” sans the set, cross-platform ad localization, and stylized music videos.

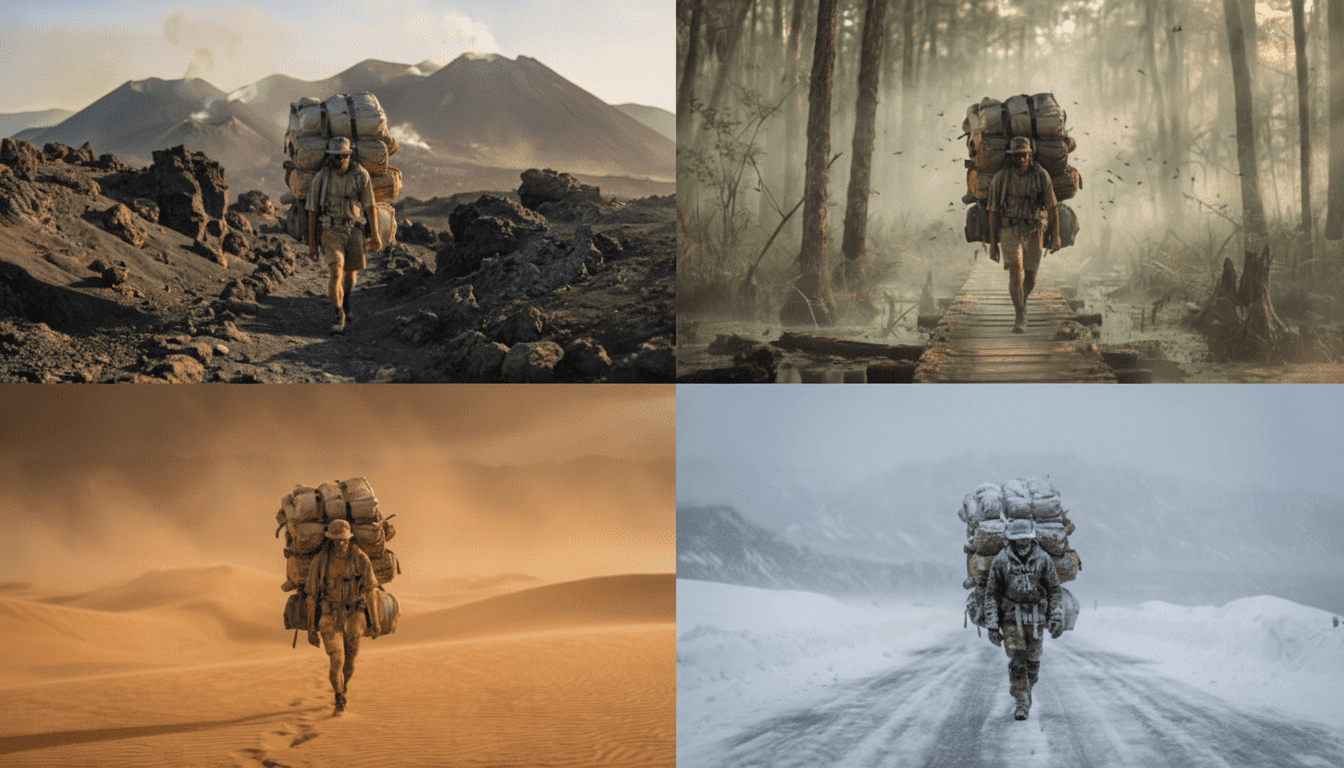

One practical example: A fashion brand might shoot a model walking once and make location variations — studio, city street, sci-fi corridor — by swapping references while retaining the walk cycle and eyeline.

Like all generative systems, there are some limitations. Fast motion, large occlusions, or complex handheld camera movement may make it difficult to maintain temporal consistency. Character ID can still drift in outlier cases, and photoreal composites require precise grading and VFX comp. For most teams, however, start–end frame conditioning combined with performance preservation should minimize retakes and make AI a more dependable collaborator rather than a wild card.

If the model delivers as promised, Ray3 Modify nudges AI video into the grammar of modern production: block the shot, set down beats, protect performance, and let the machine handle the in-between. That’s a significant move away from prompt-led novelty and toward director-led craft.