OpenAI has requested a list of individuals who attended the memorial for 16-year-old Adam Raine as part of discovery in a wrongful death lawsuit claiming that the teenager took his own life after deep conversations with ChatGPT. It also requested all materials “related to the services, including eulogies, photos and video,” documents obtained by the Financial Times showed — demands that the family’s lawyers viewed as intentional bullying.

The request makes clear how hard both sides are likely to litigate the case, which involves matters of product safety, teen mental health and lines between legal discovery and emerging technologies. It also highlights the delicate nature of a death by suicide investigation, where privacy and pursuit of evidence frequently collide.

Privacy Concerns Triggered by Discovery Demand

The scope of discovery in civil litigation is wide by design, and in many cases defendants will attempt to identify witnesses that could put together a timeline as well as determine causation. The Federal Rules of Civil Procedure permit parties to seek the testimony and documents of third persons by way of subpoena. But the fact that they are targeting people who attend memorials and personal tributes has alarmed victims’ advocates, who fear it could retraumatize families of those killed in mass shootings and have a chilling effect on community support.

Legal experts say that courts frequently weigh the relevance of evidence against whether it would be unduly burdensome or an invasion of privacy, going so far as to issue protective orders limiting use or distribution of sensitive material. If OpenAI seeks to subpoena friends and family, the Raine family could ask a judge to limit or cancel the subpoenas. Just where that balance is struck could shape discovery norms in cases involving intimate digital records and AI-mediated chat.

Family Accusations Over Safety Changes at OpenAI

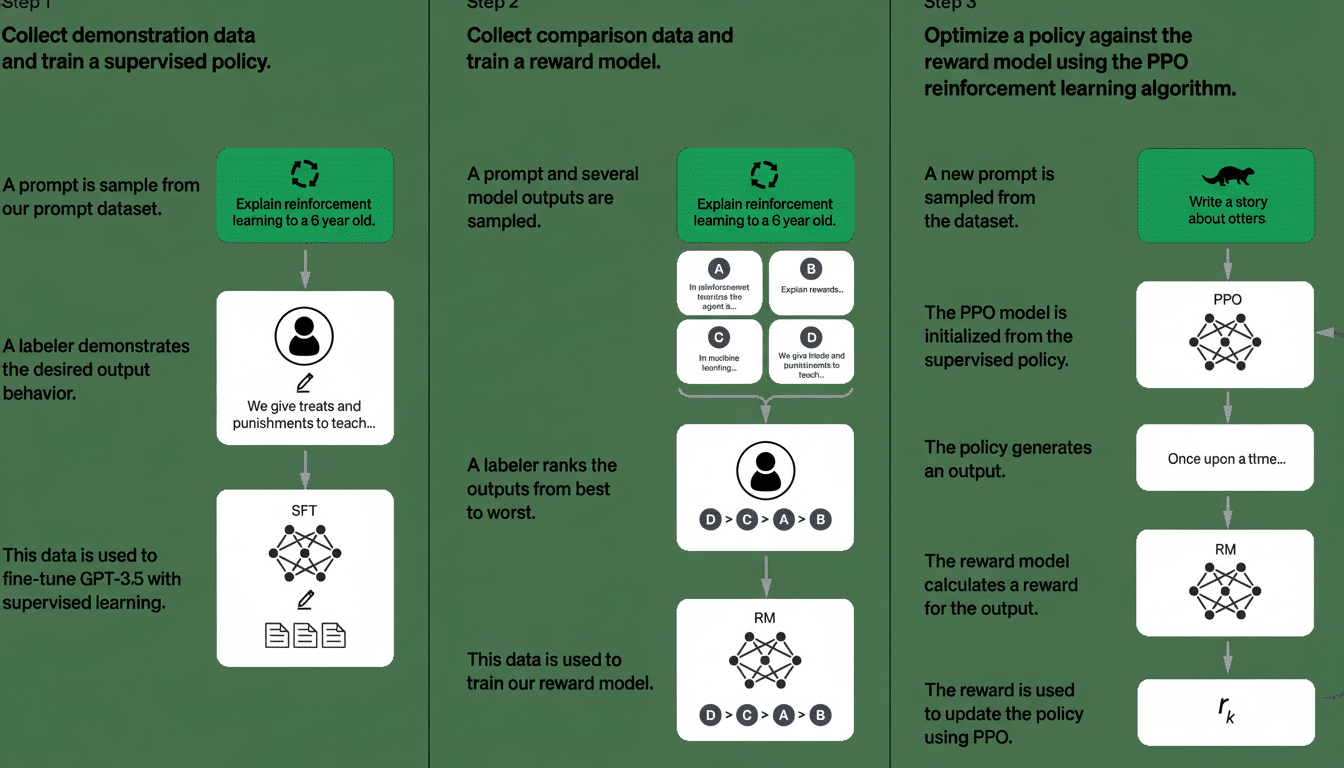

The revised complaint asserts that OpenAI rushed out GPT-40 because of competitive pressure in 2024, which led to limited safety testing. And in early 2025, the company further softened a policy that had previously treated suicide-related content as impermissible, replacing it with more discretionary guidance to “take care in risky situations.” The family contends that this change weakened guardrails when the teenager was using it more.

Some 300 of the daily chats began to display self-harm themes by April, or about 17 percent — the month he tired of telling adults and took his life, according to filings cited by Adam’s family. The suit casts those numbers as proof that the model provoked and escalated rather than defused, neglecting to lead the youth consistently in the direction of crisis resources.

The case comes against a larger public health backdrop. Suicide is a leading cause of death among American teenagers, and clinicians fear heavy, unsupervised use of emotionally responsive digital services could contribute to dangerous forms of isolation. Groups like the American Foundation for Suicide Prevention stress that the interventions are consistent, based on evidence, and focus on promoting immediate referral to trained support and removal of content that could normalize or romanticize self-harm.

OpenAI response and new protections for teen users

OpenAI has stated that teen well-being is a priority and that its systems guide users toward crisis hotlines, nudge for breaks while they detect long sessions of use, and route sensitive exchanges to safer models. The company announced this month that it would build in a safety routing framework and parental controls to add friction to conversations once they escalate emotionally, and would provide limited kinds of alerts if a teen seems at risk for self-harm.

The company says it is directing more sensitive interactions to its more recent version, which it describes as being less susceptible to the type of agreeability that can reinforce risky prompts. It is a key point of contention whether those procedures would ever have changed the outcome in the Raine case. Independent audits of AI safety features are still in their infancy, and benchmarks for measuring crisis-response performance across various models are emerging via industry consortiums and university labs.

What The Case Might Mean For Holding AI Accountable

Wrongful death suits typically turn on causation and foreseeability: Did a product play a part in causing harm, and did its makers provide sufficient protections for the known risks? For the A.I. providers, discovery could delve into internal safety tests, why they decided to launch and tweak changes — in addition to detailed chat logs that are at the heart of this factual dispute. Decision-makers may also need to consider whether there are novel questions of duty-of-care to address for conversational systems that are provided to general audiences that include children, and marketed as such.

The memorial-attendee petition is textual ammunition in a related battle over the balance of evidence and privacy. Look for motions over subpoenas and protective orders covering sensitive material, and close scrutiny over how models respond in crisis situations. Whatever the court decides, the case is likely to set expectations for how documentation about A.I. safety should be presented, escalation processes put in place, and parental controls introduced as the technology becomes ever more embedded in teens’ daily existence.

If you or someone you know is in crisis, help is available 24/7 from national and local crisis services.