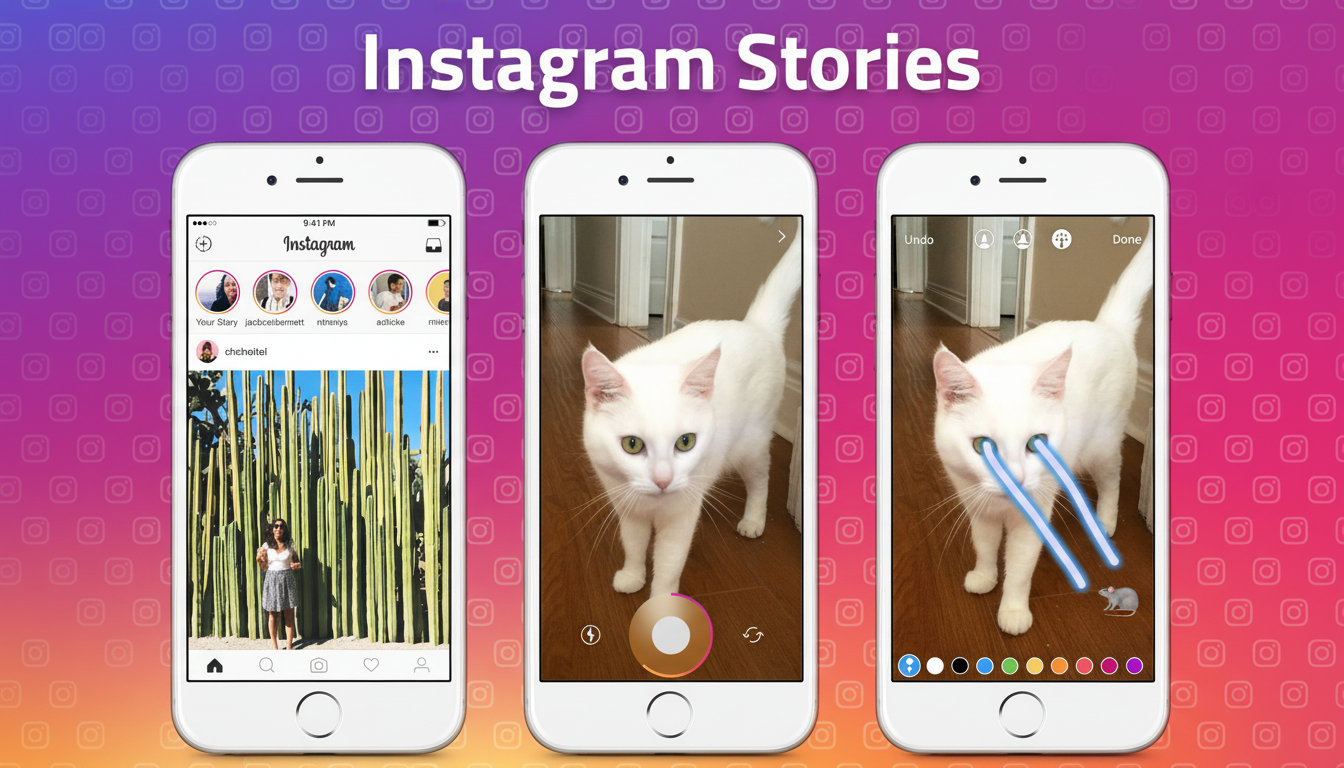

Instagram introduces Meta’s generative editing directly into Stories, allowing users to describe desired changes with plain text prompts and see the results in seconds. The update shifts intense AI effects from a chatbot and into the creative surface people are already using, reducing the friction to edit photos and videos before uploading them.

How AI Editing Works Within Instagram Stories

The tools live underneath the Restyle menu in the Stories composer. Tap the paintbrush icon, open Restyle, and type what you want: add or remove a word or phrase, change another — tell it how. You might ask it to change hair color, pop on a crown, swap in a sunset background, or take out something distracting. For a video, you can add effects like fake snow or fire.

In addition to the custom prompts, there are preset styles that can change clothing or the overall aesthetic — you could automatically add sunglasses and a biker jacket, say, or give an outfit a watercolor look. Features integrated at capture time also mean creators can iterate quickly without having to export to multiple applications.

Why It Matters for Creators and Brands on Instagram

Stories is one of Instagram’s most frequently used surfaces — more than 500 million accounts use Stories every day, Instagram has said — so bringing AI-based editing to that canvas could transform how often people post and what those posts look like. This is a fraction of the cost for creators and social teams to experiment, with one photo being restyled into minutes’ worth of on-brand variants for A/B testing.

It also democratizes effects that used to demand professional tools, such as object removal, color grading, and atmospheric effects. And that can mean quicker turnarounds for product teases, behind-the-scenes reels, and limited-time promotions — especially for small businesses whose entire existence is inside Instagram’s ecosystem.

Safety Labels and Data Use with Meta AI on Instagram

Running Meta AI in Instagram activates Meta’s AI Terms of Service, which allows uploaded media — including facial features — to be analyzed to create a summary of its content, alter images, and produce new material based on what it sees. Simply put, the system looks at your photo or video to see what things are and where they are before making edits.

AI-manipulated content is labeled in-app and contains hidden cues, or metadata, to show it’s been changed artificially, according to Meta, which follows emerging provenance guidelines such as those from the Coalition for Content Provenance and Authenticity. For families, Meta has introduced more parental controls that allow parents to block chats with AI characters and also let parents view categories of topics teens are engaging with through the Meta AI assistant.

Part of a Bigger AI Push Across Meta Platforms

The Stories integration is the latest in a slow drumbeat of AI features across Meta’s various platforms, which already include a Write with Meta AI prompt that helps you pen comments and an AI-powered video feed inside the Meta app called Vibes. Daily active users of the Meta AI app on iOS and Android have risen to about 2.7 million, from about 775,000 in four weeks’ time, according to Similarweb — a sign that lightweight, fun formats can drive adoption.

The shift also follows a larger industry trend: Snapchat has been doubling down on AI lenses, TikTok is promoting generative tools for advertisers, and both Google and Adobe are normalizing consumer-grade generative edits. Embedding actionable edits in Instagram’s frequent posting flow should help Meta maintain a competitive edge while generating real-world data to feed back into its models.

Early Tips and Known Limitations for Instagram Stories

Good prompts are specific and rooted in things you can see (“put a gold crown on my head,” “change the sky to a purple sunset behind that palm tree”). When requests become complex due to overlapping objects or reflections, artifacts can be generated (in particular for fast-action video). Shorter clips seem to produce more reliable results, and it’s a good idea to preview edits full-screen before you publish because labels will tell users whether AI was involved.

For creators, the practical playbook is straightforward: write a Story and experiment with two or three Restyle options before publishing the one that garners the most thumbs-up. For brands, codify a library of prompts that complies with visual guidelines — which means matching color palettes, textures, and accessories — so teams can reproduce the on-brand effect quickly.

Now that AI is built into Stories, Instagram can use the edit step as a creative prompt — one that might nudge people to post more frequently, up production quality, and establish a new baseline for what “unpolished” video looks like on social media.