Instagram chief Adam Mosseri notes that the smartest way to keep social feeds trustworthy is by labeling what’s authentic at the moment of its creation, not playing whack-a-mole identifying every piece of AI-fabricated media after it has already been shared. In a recent exchange on Threads, he wrote that cryptographic “fingerprints” stamped onto cameras and apps could prove that content was authentic all through a digital chain of custody as it moved from editing to sharing.

The stance is the latest sign of a broad convergence among technologists and news leaders that technology simply won’t solve many misinformation problems as artificial imagery and audio become increasingly realistic. If provenance can be maintained from shutter to screen, Mosseri argues, platforms can help people figure out what to trust without feeling like they’re guessing.

Why It Might Be Better to Label Reality Than Chase Fakes

AI detectors are currently locked in an arms race.

Watermarks can be erased, metadata is regularly removed when files are resaved, and open-source models can drive rapid iteration that dodges platform rules. In contrast, hardware-based or capture-app-level authenticity labels use public-key cryptography to sign an image or video at creation and preserve that signature through edits while maintaining provenance.

The trust gap is widening. According to the Digital News Report of the Reuters Institute, 59% of respondents in surveyed markets have difficulty distinguishing between real and fake on digital media. It is even more acute on visually led platforms where manipulated media proliferates fast and passes as convincing at first glance.

How Cryptographic Provenance Would Function

In the approach Mosseri prefers, cameras and capture apps would sign media at the pixel level, adding tamper-evident metadata to describe when, where and how it was captured. The standard is called the Coalition for Content Provenance and Authenticity (C2PA), and it includes these kinds of signatures, along with a mechanism to display “Content Credentials” to viewers, created by Adobe, Intel, Microsoft, the BBC and others.

Camera makers are moving. Leica has added Content Credentials to a production camera, and Sony has field-tested in-camera signing with major news organizations. Nikon has announced similar capabilities. Newsrooms like The Associated Press and Reuters have signed up for provenance initiatives, so that editors and audiences can see a photo’s history before it hits the front page or a feed.

On the software side, companies are building provenance into creation tools. Adobe’s suite can hang Content Credentials onto workflows, while research from Google DeepMind and others seeks more durable watermarking for AI output. It’s not that synthetic media should be outlawed; it’s that viewers should be given a solid signal when a piece of presented media is confirmed to have originated in the world we all share.

How Platform Policies Are Evolving on AI and Authenticity

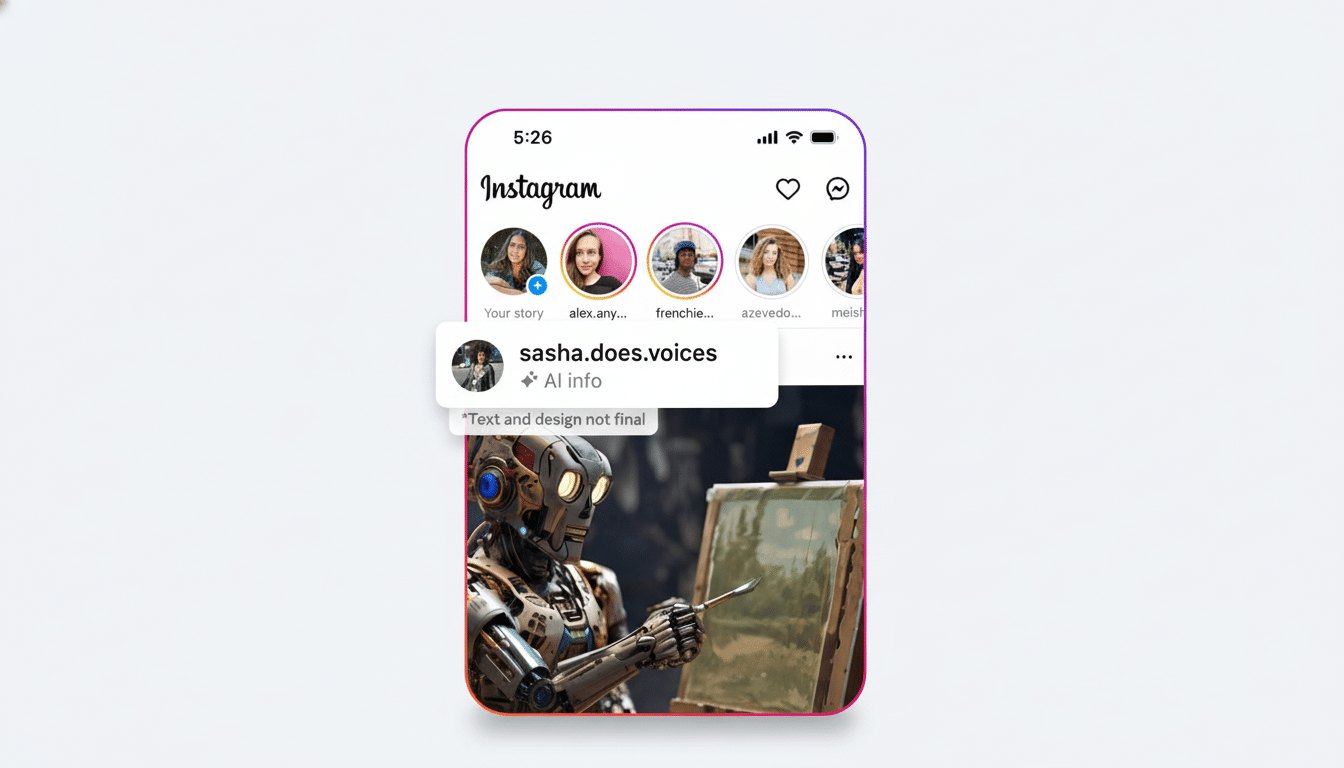

Major platforms have added labels for AI-driven content and now require that creators indicate when they are using synthetic media. YouTube is adding creator disclosures and visible labels to sensitive topics, and TikTok now requires a label on generated deepfakes. Meta has said that it will label AI-generated imagery that it can detect or if creators flag it. But detection is still imperfect, and the disclosures are easy to skip or misuse.

Regulators are also weighing in. The EU’s fledgling rules nudge platforms to identify deepfakes, and governments have urged industry to embrace provenance standards. Mosseri’s suggestion comes in line with that trajectory, focusing on verifiable signals that can persist as the generation models improve and remain undetectable by historical methods.

The Trade-Offs and Risks of Cryptographic Provenance Labels

Provenance is not a cloak of magic. The adoption needs to cross devices on both smartphones and pro cameras, as well as the full video pipeline: editing, compression, reposting. If any phase discards metadata, authenticity signals are broken. Video at scale is particularly painful because it pipes through a lot of tools and formats before it sees the light of day.

Privacy is another concern. Both activists and journalists working under threat and everyday users would likely prefer their location or device signatures not be exposed. Standards groups are working on ways to demonstrate that a file is genuine without providing sensitive information, but the UX needs to make this trade-off clear. There’s also possible “realness privilege,” where accounts with signed capture get algorithmic advantages over creators who can’t or won’t adopt the tech.

Very importantly, authenticity labels tell us nothing about context or intent. A real video can even be misleading (see the example above) when clipped or miscaptioned. It’s why Mosseri has also emphasized “credibility signals” about who is doing the posting — account history, affiliations and past corrections — so audiences are given more than a green checkmark on a photo.

What To Watch Next as Provenance Tech Rolls Out Broadly

Anticipate a proliferation of firmware upgrades from camera companies and pressure on smartphone platforms to make provenance a default capture choice. Find dedicated, easy-to-read Content Credentials across Instagram, Facebook and other apps for edited or remixed media. News organizations are likely to post more assets with proven histories due to the increasing cost of manipulation around elections and major events.

Mosseri’s gamble is practical: As AI nears the point at which it looks just like reality, we’ll need a receipt for the truth. If the industry coalitions and platforms can coalesce around signing real media at capture, then users may never have to spot a deepfake — they’ll merely insist that something prove it’s real.