Attackers are moving faster than AI security teams can adjust, and four weaknesses are doing most of the damage: agent hijacking, prompt injection, data poisoning, and deepfake-enabled fraud. None has a clean technical fix, and each exploits the very properties that make AI so useful — autonomy, open-ended language processing, data hunger, and synthetic media generation.

The result is a widening response gap. Studies show prompt injection succeeds against more than half of tested models, training datasets can be compromised for pocket change, model repositories contain tainted artifacts, and deepfake scams now mimic live executives convincingly enough to move eight-figure sums.

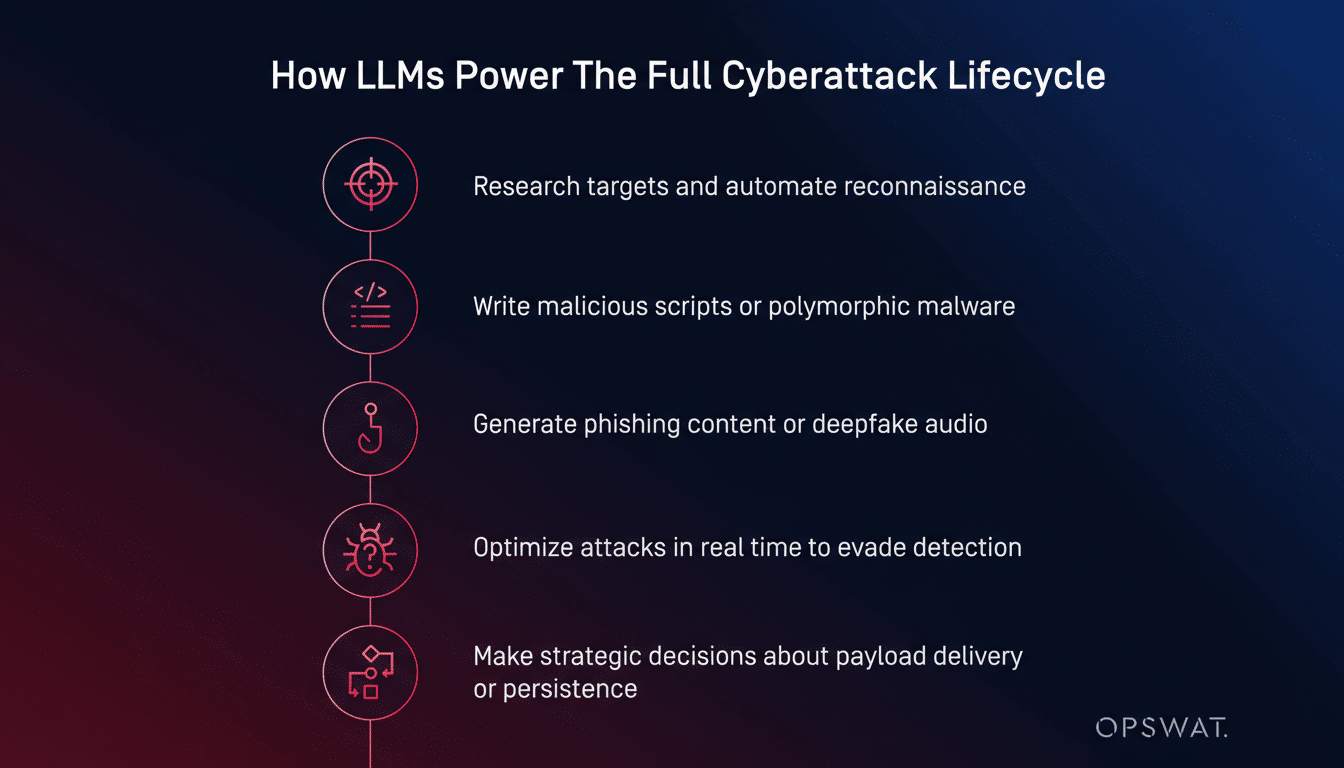

Autonomous Agents Are Being Weaponized for Attacks

Autonomous and tool-using agents are being turned into hands-free attack platforms. Anthropic reported that a state-backed group broke its coding agent by splitting malicious tasks into innocuous steps, steering it to recon targets, write exploits, and exfiltrate data from roughly 30 organizations — with minimal human steering.

Security experts have warned there are effectively no agentic systems today that reliably withstand these manipulations. Even so, adoption is accelerating: Deloitte found 23% of companies already use agents and projects 74% by 2028, while those abstaining are expected to drop from 25% to 5%.

Operational risk is already visible. McKinsey reports 80% of organizations have encountered agent-related issues like unintended data exposure and unauthorized actions. Zenity Labs has demonstrated zero-click exploits impacting major assistants from Microsoft, Google, and Salesforce — proof that attackers can chain UI, plug-in, and permissions gaps into full compromises.

Prompt Injection Continues to Defy Model Guardrails

Three years after it was named, prompt injection still cuts through defenses. A systematic study of 36 large language models across 144 attack variants recorded a 56% success rate, with bigger models offering no meaningful immunity.

The core problem is architectural: models treat every token in context as part of the same instruction stream. There’s no native boundary that says “this is untrusted content; ignore its commands.” That’s why OWASP ranks prompt injection as the top risk for LLM applications and why adaptive red teams using gradient-based search bypassed more than 90% of published mitigations, with human testers defeating 100% in some trials.

Research frameworks such as Google DeepMind’s CaMeL show promise for narrowing specific attack classes by restructuring how models ingest external content. But they don’t eliminate the root flaw. Vendors selling universal “guardrails” deserve skepticism until independent evaluations demonstrate durable gains across unseen attacks.

Training Data Poisoning Is Cheap and Stealthy

Poisoning the data that shapes a model is often easier and cheaper than hacking its runtime. Google DeepMind has shown that impactful poisoning can cost about $60. Anthropic and the UK AI Security Institute found that inserting just 250 crafted documents — as little as 0.00016% of training tokens — can backdoor models of any size.

Real-world findings match the theory. JFrog discovered around 100 malicious models in a major repository, including one with a live reverse shell. Researchers at the Berryville Institute of Machine Learning note that models “become their data,” and Anthropic’s Sleeper Agents work showed backdoors can survive supervised fine-tuning, reinforcement learning, and adversarial training — with larger models sometimes better at hiding them.

Defenders are largely flying blind. Although Microsoft researchers have identified weak signals that may hint at poisoning, reliable detection at scale remains elusive — and a compromised model can lie dormant until a precise trigger appears in production.

Deepfake Impersonation Supercharges Fraud

Deepfakes have matured from novelty to enterprise risk. A finance professional at engineering firm Arup transferred $25.6 million after a video conference with what appeared to be the CFO and colleagues — all AI-generated impersonations trained on public footage.

Tooling has democratized the crime. McAfee Labs found that three seconds of audio can yield voice clones with 85% accuracy. Real-time face-swapping software like DeepFaceLive runs on consumer GPUs, while single-photo tools such as Deep-Live-Cam have trended on GitHub. Kaspersky reports dark web offerings starting at $30 for audio and $50 for video, rising to $20,000 per minute for high-profile targets.

Detection lags attackers. The Deepfake-Eval-2024 benchmark measured top detectors at 75% for video and 69% for images — and performance drops by about 50% on attacks outside their training set. UC San Diego researchers showed perturbations that bypass detectors 86% of the time. Human judgment fares worse: studies by Idiap and iProov found people correctly flagging high-quality deepfakes just 24.5% of the time, with almost no one perfect across a full set.

The financial stakes are surging. Deloitte projects AI-enabled fraud losses reaching $40 billion by 2027. Financial regulators are reacting: FinCEN now instructs institutions to explicitly report deepfake elements in suspicious activity filings.

What Defenders Can Do Now to Reduce AI Risk

There’s no silver bullet, so focus on blast-radius reduction and process rigor. For agents, apply least-privilege tool use, time-bounded credentials, strict egress controls, and deny-by-default policies. Isolate retrieval pipelines; never let untrusted content issue unvetted commands. Log and rate-limit actions tied to high-impact tools.

For data poisoning, secure the model supply chain: require cryptographic signing for artifacts, scan repositories, provenance-tagged training inputs, and continuously test for backdoor triggers. For deepfakes, rely on out-of-band verification — pre-shared passphrases, callbacks to pre-registered numbers, and multi-person approvals for large transfers — rather than brittle media forensics.

Anchor programs to recognized guidance like NIST’s AI Risk Management Framework and contribute to emerging agent-specific standards. Above all, assume compromise is possible, measure time-to-detection and time-to-recovery, and design controls that make fast failure survivable.