I conducted pairwise comparison tests of OpenAI’s GPT-5.2 and Google’s Gemini 3 in everyday and expert workflows, and the ruling is clear: GPT-5.2 walks in feeling like a little bit of a refresh, while Gemini 3 is something you might actually want to land as an update. OpenAI has partnered on general enhancements, though these are in practice subtle and often difficult to tell apart from GPT-5.1. By contrast, Gemini 3 provides quicker and cleaner responses, along with clear improvements in vision and creative tasks that you will notice even if you are not a power user.

What’s actually new in GPT-5.2 and Google’s Gemini 3

OpenAI’s release notes position GPT-5.2 as more intelligent on long-context understanding, multi-step projects, spreadsheet operations, image processing, and tool use. Google packages Gemini 3 as a team: 3 Pro for high-end reasoning, Flash 3 for speed and efficiency, Nano 3 Pro to generate and edit images. Both companies cite lower latency and better grounding, while Google also says that it uses more renewable energy to power its servers. On paper, it’s a near draw. In use, it isn’t.

- What’s actually new in GPT-5.2 and Google’s Gemini 3

- Hands-on results consistently outperform the hype

- Why images and visuals are Gemini 3’s standout strength

- Agentic coding performance and the use of developer tools

- Costs, pricing changes, and real-world practicality compared

- Bottom line: choosing between GPT-5.2 and Google’s Gemini 3

Hands-on results consistently outperform the hype

Start with structured tasks. I asked GPT-5.2 and GPT-5.1 to craft a reference chart of the last five Prime Warframes and their respective relics needed. GPT-5.2 did not make an accuracy error that GPT-5.1 stumbled over on the first attempt, but the older model righted its answer as soon as it was prompted. In more general testing—presentations, math breakdowns, multi-step planning—the gulf between 5.1 and 5.2 was practically imperceptible. I found myself double-checking the model selector a lot to confirm which one I was using.

Vision was equally telling. From a picture of a PC build, GPT-5.2’s component recognition and specificity were not substantially better than 5.1’s, but there was again a clear step up from its 2.5 generation. With Gemini 3, component ID improved and wild guessing stopped; it was roughly on par with or better than GPT-5.2 depending on the prompt.

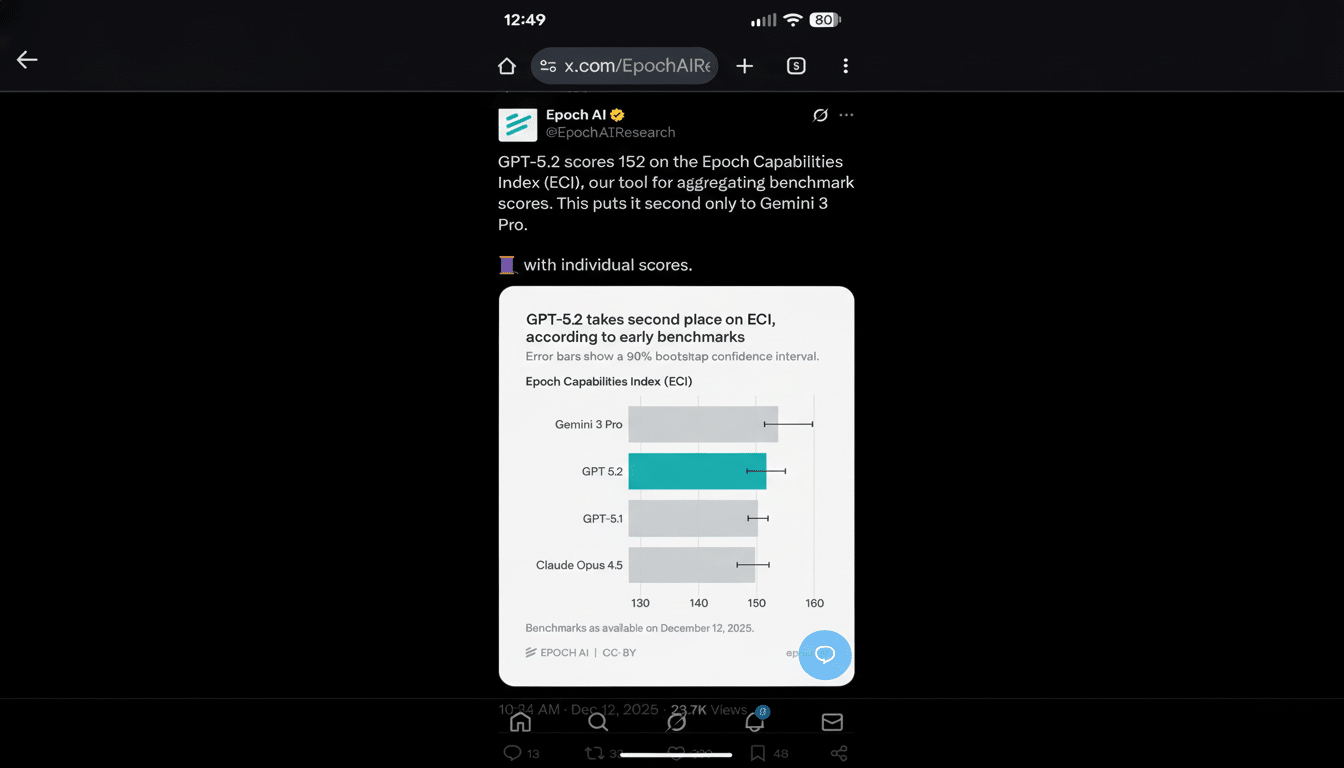

With deep research and complex reasoning, Gemini 3 Pro seemed more definitive: fewer tangents, tighter synthesis, and a swifter route to an answer. Google’s published benchmarks highlight improvements in performance across computer science, math, and physics, and my own prompts reflected that. OpenAI’s own GPT-5.2 Thinking results indicate Gemini 3 Pro lags only a few points behind on some tests, which mirrors the experience of receiving generally similar answers—just that Gemini usually reaches them faster and without as much fluff. Independent eval hubs such as LMSYS Chatbot Arena and research from groups like Stanford’s CRFM and MLCommons have demonstrated that small deltas can seem big when they impact speed and relevance; Gemini 3 does just that.

Why images and visuals are Gemini 3’s standout strength

The headline act is Gemini 3’s Nano 3 Pro. It is great for quick responses, trying out style control, and iteration with lower artifacting than older models when infilling or doing layout-dependent composition. OpenAI’s GPT Image 1.5 is a real improvement for ChatGPT’s visuals—crisper detail, better typography—but in side-by-side creative briefs on the service, Nano 3 Pro consistently produced results that were closer to the brief on the first generation, with no regeneration required every time.

Creative writing saw similar movement. Gemini 3 Flash adopts a less AI-predictable cadence and punctuation to generate poems and short-form copy than 2.5 Flash, leaving behind the sing-song symmetry I’ve come to associate with questionable LLM text. GPT-5.2 can write solid drafts, but I discovered Gemini to be more willing to take stylistic chances that still managed to cohere.

Agentic coding performance and the use of developer tools

So there is a gap where GPT-5.2 can be luminous: agentic coding and tool calling. People like Jeff Wang of Windsurf and AJ Orbach of Triple Whale heralded GPT-5.2 for minimal prompting and a lower perceived response latency. Claims of this fix are featured on OpenAI’s blog, and they seem to jibe with pockets of social posts from developers reporting cleaner function execution. That’s not to say that there aren’t niche use cases, mind you, but it’s something that the general user struggles to see the day-to-day benefits of.

Costs, pricing changes, and real-world practicality compared

Developers face a clear trade-off. OpenAI raised GPT-5.2 API pricing after a 40% reduction against GPT-5.1 per million tokens. For Google’s Gemini 3, pricing differs by tier but is lower than GPT-5.2 in most cases for those workloads, and that counts when you’re scaling up. The practical concern for most consumers is availability: both companies have done their best to herd you toward the modern models and stubbed out older access.

Bottom line: choosing between GPT-5.2 and Google’s Gemini 3

GPT-5.2 is solid, respectable, and safe—but it hardly ever feels distinct. Gemini 3, for its part, genuinely feels faster and is a more vibrant experience, ahead in research and in vision/creative tasks. OpenAI’s 5-series was already dominant, which may account for the gradualist feel here, but Google’s upgrades are both more obvious and easier to appreciate this time around.

If you’re deciding based on daily performance—writing, research, image work, and so forth—Gemini 3 should be the greater improvement. If your stack is heavily focused on agentic coding through rigid tool schemas, GPT-5.2 still may be the better fit. Watch official releases from OpenAI and Google Research, and check community benchmarks from academic groups and LMSYS; the margins are slim, but for the time being at least, Gemini 3 edges ahead where it’ll matter most.