Fitness wearables are terrific at tracking what you burn but terrible at measuring what you eat. That disconnect is why a growing number of users want the next generation of smart glasses to serve as an automated food logbook. With Google parading real-time, glasses-ready AI and the category inching toward mainstream, the time is right for a nutrition-first push.

The Unaddressed Half of Fitness Tracking

Step counts, runs and sleep are currently passively captured. Meals are not. Forced manual logging continues to be the No. 1 reason people drop diet apps, a pattern described in studies published over and over again in the Journal of Medical Internet Research and Obesity. And it’s not a trivial gap: The C.D.C. estimates that 41.9 percent of American adults have obesity, and the Global Burden of Disease project in The Lancet has linked dietary risks to some 11 million deaths each year. If tech wants to make more than a marginal difference in people’s lives, it needs to make tracking nutrition as mindless as tracking workouts.

Apps have attempted to make the grind easier through barcode scans, saved meals and A.I. facial recognition. They certainly help, but only if you remember to plug them in and use them at the right moments, and they fail on sections of dishes, shared plates, complex cuisines. The result is patchy data that detracts from the rest of what your wearables do so well.

Why Glasses Are the Best Form Factor for Nutrition

Smart glasses see what you see and hear what you say, which will give them context your wrist can’t provide. Google has already unveiled Project Astra, a multitasking assistant in real time (demonstrated running on a wearable device much like glasses). Elsewhere, Ray-Ban’s camera-equipped smart glasses have shown that everyday voice-and-vision eyewear can be socially acceptable — as long as the use case is obvious and privacy is well protected.

Nutrition is a natural fit. Instead of hunching over a phone to take a picture, glasses might silently observe the meal, log the venue via location, parse visible ingredients used in crafting an entree and guess at portion sizes without ever being trained. A tweak of voice — “split the fries 3 ways”; “with extra olive oil” — would polish the entry on the spot.

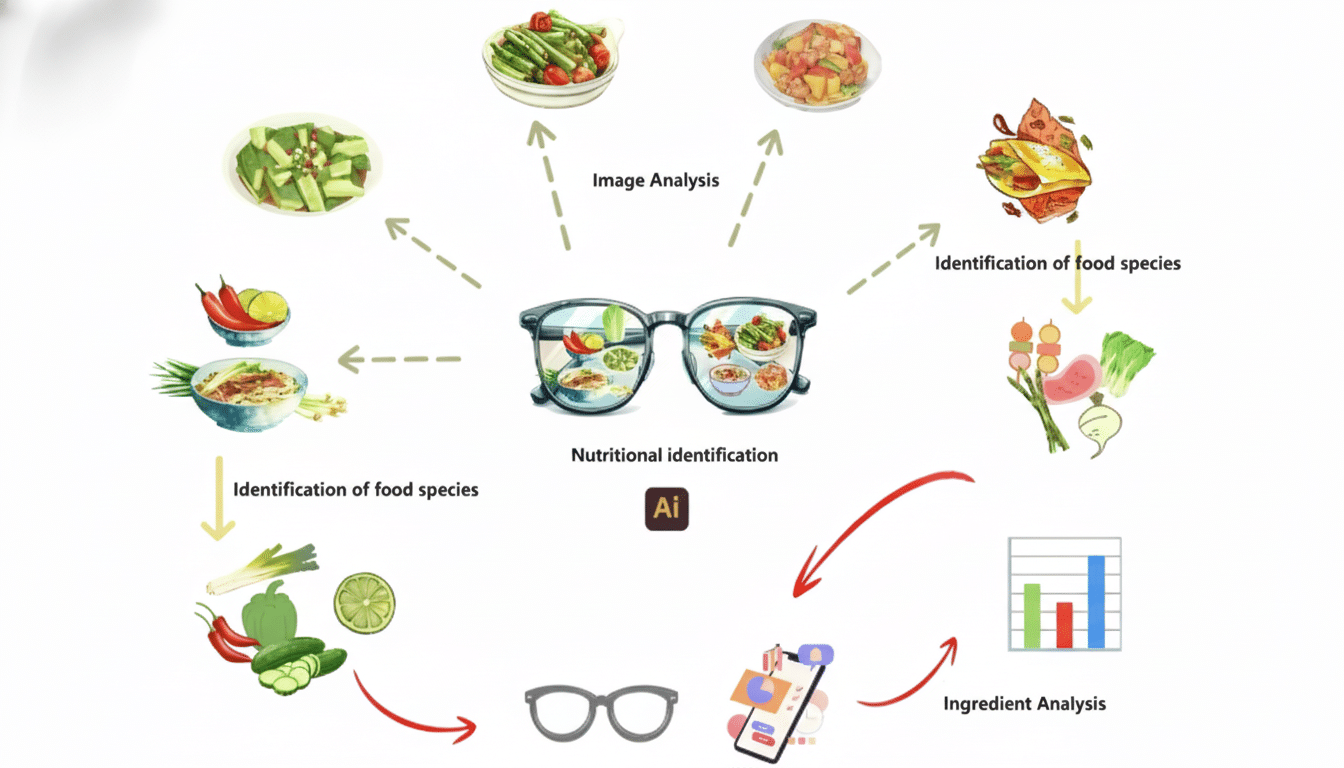

How Automated Food Logging on Glasses Could Work

That pipeline is technically feasible today. Computer vision can rely on segmentation to identify particular objects, depth cues for estimating volume and menu data to ground likely recipes. Datasets such as Food-101 have already buoyed accuracy numbers, and contemporary LLMs can draw lines between visual cues and variables like restaurant style, time of day or prior habits.

For the platform, Android has Health Connect, which can serve as the data backbone syncing your nutritional entries to Google Fit, Fitbit and third-party apps like Cronometer, Lose It or MyFitnessPal. For those using continuous glucose monitors from Dexcom or Abbott, glasses-driven food logs could automatically match meals to glucose responses, instantly converting disparate numbers into a coherent narrative.

Picture yourself walking into a familiar cafe. You’d feel at home, right? Imagine that in the digital space. Your glasses recognize the plate, find a match on your menu item, infer portion size and write a log before you take your first bite. You give it a quick look to make sure, say “no mayo, add cheese,” and the entry changes. Later, your watch pushes you to a little more protein at dinner since the day’s input is generally running scant. That’s the sort of closed loop that makes nutrition tracking finally stick.

The Difficult Problems to Solve for Food Logging

Food is culturally specific and infuriatingly variable. Portions are noisy, home recipes do not come with labels and cooking techniques alter macros. Remember, accuracy will never be perfect — but even a good guess is better than nothing. The trick is quick, low-friction capture with easy voice or gesture corrections.

Privacy is non-negotiable. These kinds of always-on capture should be opt-in, very obviously signaled and suitable for on-device processing using ephemeral data. Visual data that never leaves the glasses. Visible recording indicators. And granular controls around collected footage from shared spaces to respect social norms and legal regulations for privacy — can maintain social and regulatory trust. Any health claims also have to be carefully positioned to avoid running afoul of the rules around medical devices.

Hardware matters, too. Everything needs to have a lightweight frame, all-day battery life and secure microphones with depth-capable cameras. AI on the device should lower latency while keeping everything private — the cloud only steps in when required.

What Google Should Do Next to Enable Nutrition Tracking

Run Astra-class multimodal AI reliably on a glasses reference design, with a food recognition mode that processes on-device by default. Second, extend Health Connect’s nutrition schema to include portions, cooking methods and confidence scores, and create robust APIs that allow partners to update entries over time.

Third, partner with Maps and Search to digest restaurant menus, regional cuisines and common ingredient lists. Ship a developer toolkit that enables nutrition platforms to plug into the glasses’ capture flow and provide high-end verification or coaching layers. Finally, pilot on volunteer users for their eating habits in diverse cuisines, not Western menus, to train models where current apps have less success.

Wearables solved output. Glasses can solve input. If Google transforms smart eyewear into an almost invisible food logbook, it will have done more than build a feature for another gadget — it will also be pulling off the loop that completes digital health, making the most difficult habit in wellness easier to follow than not following it.